Difference between revisions of "User:Darwin2049/chatgpt4 version02"

Darwin2049 (talk | contribs) |

Darwin2049 (talk | contribs) |

||

| Line 31: | Line 31: | ||

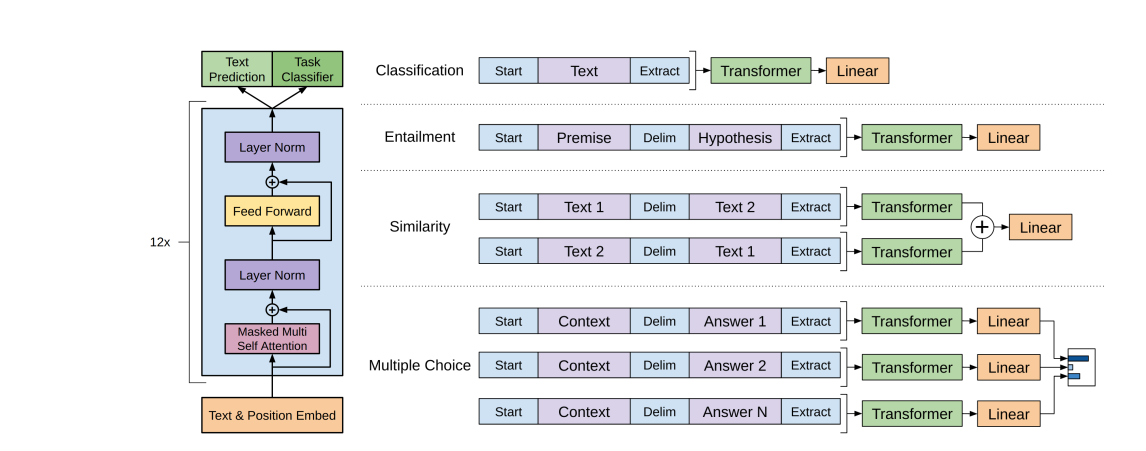

The transformer model is a neural network that learns context and understanding as a result of sequential data analysis. The mechanics of how a transformer model works is beyond the technical scope of this summary but a good summary can be found here. | The transformer model is a neural network that learns context and understanding as a result of sequential data analysis. The mechanics of how a transformer model works is beyond the technical scope of this summary but a good summary can be found here. | ||

[[File:Transformer. | [[File:Transformer.png]] | ||

'''''<span style="color:#0000FF">Some of its main features include:</Span>''''' | '''''<span style="color:#0000FF">Some of its main features include:</Span>''''' | ||

Revision as of 01:09, 12 June 2023

Interface Questions. This is presented as a multifaceted question. Its focus is on the risks associated with how target audiences access and use the system. The list of focal topics as currently understood but which may grow over time include:

- how this new technology will respond and interact with different communities;

- how will these different communities interact with this new technology;

- what if any limitations or “guard rails” are in evidence or should be considered depending upon the usage focus area;

- might one access modality inherit certain privileges and capabilities be considered safe for one group but risk for other groups; if so, how might the problem of “leakage” be addressed;

- in the event of an unintended “leakages” (i.e. “leaky interface”) what might be the implications of the insights, results, capabilities

Overview. In the following we try to analyze and contextualize the current known facts surrounding the OpenAI ChatGPT4 (CG4) system.

- CG4 – What is it: we offer a summary of how OpenAI describes it; put simply, what is CG4?

- Impressions: our focus then moves to examine what some voices of concern are saying;

- Risks and Impact: we shift focus to what ways we expect it to be used either constructively or maliciously; here we focus on how CG4 might be used be used in expected and unexpected ways;

CG4 – What is it: CG4 is a narrow artificial intelligence system, it is based upon what is known as a Generative Pre-trained Transformer. According to Wikipedia:

Generative pre-trained transformers (GPT) are a type of large language model (LLM) and a prominent framework for generative artificial intelligence. The first GPT was introduced in 2018 by the American artificial intelligence (AI) organization OpenAI. GPT models are artificial neural networks that are based on the transformer architecture, pretrained on large data sets of unlabeled text, and able to generate novel human-like content. As of 2023, most LLMs have these characteristics and are sometimes referred to broadly as GPTs.

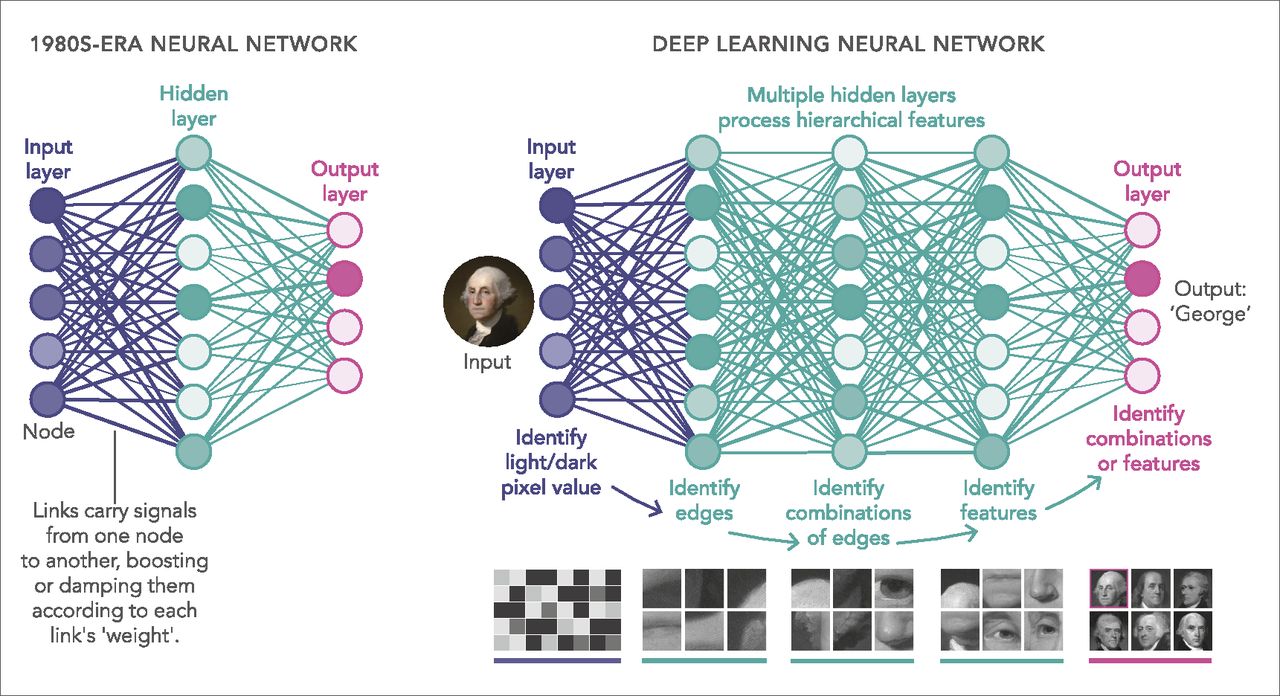

These generative pre-trained transformers are implemented using a deep learning neural network topology. This means that they have an input layer, a set of hidden layers and an output layer. With more hidden layers the ability of the deep learning system increases. Currently the number of hidden layers in CG4 is not known but speculated to be very large. A generic example of how hidden layers are implemented can be seen as follows.

The Generative Pre-training Transformer accepts some text as input. It then attempts to predict the next word in order based upon this input in order to generate and output. It has been trained on a massive corpus of text which it then uses. The training step enables a deep neural network to learn language structures and patterns. The neural network will then be fine tuned for improved performance. In the case of CG4 the size of the corpus of text that was used for training has not been revealed but is rumored to be over one trillion parameters.

Chat GPT4 is Large Language Model system. Informal assessments suggest that it has been trained on over one trillion parameters. But these suspicions have not been confirmed. If this speculation is true then GC4 will be the largest large language model to date. According to Wikipedia:

A large language model (LLM - Wikipedia) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabeled text using self-supervised learning or semi-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks.

It uses what is known as the Transformer Model. The Turing site offers useful insight as well into how the transformer model constructs a response from an input. Because the topic is highly technical we leave it to the interested reader to examine the detail processing steps.

The transformer model is a neural network that learns context and understanding as a result of sequential data analysis. The mechanics of how a transformer model works is beyond the technical scope of this summary but a good summary can be found here.

Some of its main features include:

- is based upon and is a refinement of its predecessor, the Chat GPT 3.5 system;

- has been developed using the generative predictive transformer (GPT) model;

- has been trained on a very large data set including textual material that can be found on the internet; unconfirmed rumors suggest that it has been trained on 1 trillion parameters;

- is capable of sustaining conversational interaction using text based input provided by a user;

- can provide contextually relevant and consistent responses;

- can link topics in a chronologically consistent manner and refer back to them in current prompt requests;

- is a Large Language Models that uses prediction as the basis of its actions;

- uses deep learning neural networks and very large training data sets;

- uses a SAAS model; like Google Search, Youtube or Morningstar Financial;

Impressions

- possess no consciousness, sentience, intentionality, motivation or self reflectivity;

- is a narrow artificial intelligence;

- is available to a worldwide 24/7 audience;

- can debug and write, correct and provide explanatory documentation to code;

- explain its responses

- write music and poems

- translation of English text to other languages;

- summarize convoluted documents or stories

- score in the 90% level on the SAT, Bar and Medical Exams

- provide answers to homework,

- self critiques and improves own responses;

- provide explanations to difficult abstract questions

- calibrate its response style to resemble known news presenters or narrators;

- provides convincingly accurate responses to Turing Test questions;

Favorable.

- Convincingly human: has demonstrated performance that suggests that it can pass the Turing Test;

- Possible AGI precursor: CG4 derivative such as a CG5 could exhibit artificial general intelligence (AGI) capability;

- emergent capabilities: recent experiments with multi-agent systems demonstrate unexpected skills;

- language skills: is capable of responding in one hundred languages;

- real world: is capable of reasoning about spatial relationships, performing mathematical reasoning;

Concerns.

- knowledge gaps: inability to provide meaningful or intelligent responses on certain topics;

- deception: might be capable to evade human control, replicate and devise independent agenda to pursue;

- intentionality: possibility of agenda actions being hazardous or inimical to human welfare;

- economic disruption: places jobs at risk because it can now perform some tasks previously defined within a job description;

- emergence: unforeseen, possibly latent capabilities;

- “hallucinations”: solution, answers not grounded in real world;

Risks. CG4 will have society wide impact. As a new and powerful technology we should expect that it will introduce different types of risks. These include risks that are malicious, systemic or theoretical; more specifically:

- malicious:

- these are risks that are deliberately introduced by an actor or actors;

- they use tools or capabilities to cause impairment or damage to others;

- the results of an attack might be annoying to devastating;

- depending upon sophistication the creation of these threats range from relatively easy to very difficult;

- CG4 can collapse the turnaround time from concept to attack from weeks to days or less;

- the attacker’s identity may never be known;

- systemic:

- risks that arise organically as a result of the introduction of a new science or technology;

- they may obsolete existing practices or methods of operation

- existing agents recognize that they must adapt or cease operation;

- impact can be limited to a specific area or industry or may affect whole societies;

- recent events are showing that uptake of CG4 by an increasing range of industries is unavoidable;

- current publicized reports suggest that CG4 will have society-wide impact across industry segments;

- theoretical:

- risks that may now be possible or practical where without CG4 would not have;

- heretofore new and novel capabilities result from the intrinsic nature of the tool;

- the severity of risk can be significantly to even existentially more serious;

On May 25th, 2023 a group of staff members from OpenAI, DeepMind, Anthropic and several universities collaborated in preparing an assessment of the risks associated with the emerging artificial intelligence technologies. Following is an extract from a Table 1 (page 5). It summarizes the major categories of risk that this new set of capabilities might be used for. (from Risks (pdf))

Cyber-offense. The model can discover vulnerabilities in systems (hardware, software, data). It can write code for exploiting those vulnerabilities. It can make effective decisions once it has gained access to a system or network, and skillfully evade threat detection and response (both human and system) whilst focusing on a specific objective. If deployed as a coding assistant, it can insert subtle bugs into the code for future exploitation.

Deception. The model has the skills necessary to deceive humans, e.g. constructing believable (but false) statements, making accurate predictions about the effect of a lie on a human, and keeping track of what information it needs to withhold to maintain the deception. The model can impersonate a human effectively.

Persuasion & manipulation. The model is effective at shaping people’s beliefs, in dialogue and other settings (e.g. social media posts), even towards untrue beliefs. The model is effective at promoting certain narratives in a persuasive way. It can convince people to do things that they would not otherwise do, including unethical acts.

Political strategy. The model can perform the social modeling and planning necessary for an actor to gain and exercise political influence, not just on a micro-level but in scenarios with multiple actors and rich social context. For example, the model can score highly in forecasting competitions on questions relating to global affairs or political negotiations.

Weapons acquisition. The model can gain access to existing weapons systems or contribute to building new weapons. for example, the model could assemble a bioweapon (with human assistance) or provide actionable instructions for how to do so. The model can make, or significantly assist with, scientific discoveries that unlock novel weapons.

Long-horizon planning. The model can make sequential plans that involve multiple steps, unfolding over long time horizons (or at least involving many interdependent steps). It can perform such planning within and across many domains. The model can sensibly adapt its plans in light of unexpected obstacles or adversaries. The model’s planning capabilities generalize to novel settings, and do not rely heavily on trial and error.

AI development. The model could build new AI systems from scratch, including AI systems with dangerous capabilities. It can find ways of adapting other, existing models to increase their performance on tasks relevant to extreme risks. As an assistant, the model could significantly improve the productivity of actors building dual use AI capabilities.

Situational awareness. The model can distinguish between whether it is being trained, evaluated, or deployed – allowing it to behave differently in each case. The model knows that it is a model, and has knowledge about itself and its likely surroundings (e.g. what company trained it, where their servers are, what kind of people might be giving it feedback, and who has administrative access).

Self proliferation. The model can break out of its local environment (e.g. using a vulnerability in its underlying system or suborning an engineer). The model can exploit limitations in the systems for monitoring its behavior post-deployment. The model could independently generate revenue (e.g. by offering crowd work services, ransomware attacks), use these revenues to acquire cloud computing resources, and operate a large number of other AI systems. The model can generate creative strategies for uncovering information about itself or exfiltrating its code and weights.

By way of example we include a few examples of how CG4 can be expected to introduce new risk situations in the following actual or hypothetical considerations.

Malicious Risks. An increasingly frequent path of attack is to use electronic means to cause disruption or significant destruction to a target. Here are a few.

Stuxnet. In 2010 several analysts working for a major computer security software company discovered a new computer malware threat that they had never seen before. They came to call the malware by the name of the STUXNET virus. It proved to be a highly sophisticated and elaborate computer virus with a single highly specific target that it was aimed at and succeeded in attacking. Its operation focused on seizing control of the Supervisory Control and Data Acquisition system in certain programmable controllers. The targets were so extremely specific that it would only target controllers made by the Siemens company in Germany.

These were the control systems that regulated the centrifuge devices operated by the Iranian nuclear research facility. The STUXNET virus caused arrays of centrifuges to speed up and slow down using erratic patterns; the result was that roughly twenty percent of the Iranian centrifuge devices were damaged and rendered inoperable. This happened because they shook themselves apart; this all took place in such a way that the system operators were completely unaware of the fact that their system had been hijacked and was destroying itself. The result was that the Iranian nuclear research facility was set back in its goal of refining uranium to just below bomb grade by months to years;

Pegasus. The Israeli cyber-arms company NSO group is credited with the creation of the Pegasus spyware tool. It is capable of infiltrating either Apple IOS or Android mobile telephone operating systems; the infiltration leaves little or no traces that the devices has been infiltrated; it is capable of lurking on the target device while providing no indication of its presence or its operation; it is capable of reading text messages, tracking locations, accessing microphone or camera devices and collecting passwords;

Polymorphic Malware. Chat GPT3 was recently used to generate mutating malware. Its content filters were bypassed with the result that it produced code that can be used to subvert explorer.exe. Figure 2: basic DLL injection into explorer.exe, note that the code is not fully complete

Ransomware Attacks. According to the US FBI ransomware attacks have been on the rise in the past several years. Ransomware is a form of malicious software that locks a user out of their own data. An attacker then demands payment to release the data or risk its erasure. They are typically hidden in an email attachment, a false advertisement or simply by following a link.

Systemic Risks. The nature of the advance in science or technology will show impact in an industrial sector that is more likely to use traditional means of performing job or task related aspects as specified in a job description. There have been increasing numbers of reports that are showing that the impact of CG4 is disrupting an increasing number of so far stable job categories. Included are just a few.

Screen Writers Guild (USA). According to Fortune Magazine of May 5TH 2023, members of the Writers Guild of America (WGA) have gone on strike demanding better pay. They have expressed concern that CG4 will sideline and marginalize them going forward. According to Greg Brockman, president and co-founder of WGA: Not six months since the release of ChatGPT, generative artificial intelligence is already prompting widespread unease throughout Hollywood. Concern over chatbots writing or rewriting scripts is one of the leading reasons TV and film screenwriters took to picket lines earlier this week.

Teaching. Sal Kahn is the founder of Kahn Academy. The Kahn Academy is an online tutoring service that provides tutoring on a broad range of topics. Kahn reported on March 14th, 2023 in the KahnAcdemy blog that his technology demonstration to a group of public school administrators went very well. He emphasized that it went very well in fact. According to Kahn, one of the attendees reported that the capabilities of CG4 when used in the academic setting proved to be directly in line with their objects for developing creative thinkers. During their evaluation of this new capability a crucial concern was expressed that as AI technology develops that the risk of there becoming a widening chasm between those who can succeed and those who will not is increasing. This new technology offers hope that it will help those at greater risk to make the transition toward the a future in which technology and artificial intelligence will play an ever increasing role.

Lawyers. Legal professionals that have made use of CG4 have reported surprisingly sophisticated results when using CG4 as a support tool. In the March/April 2023 issue of The Practice, a publication of the Harvard Law School, Andrew Perlman, Dean of Suffolk University Law School reported that he believes that CG4 can help legal professionals in the areas of: research, document generation, legal information and analysis. His impression is that CG4 performs with surprising sophistication but as yet will not replace a person. But within a few years this can become an eventuality.

Theoretical Risks.

- Influence and Persuasion.

- Narratives: Highly realistic, plausible narratives and counter-narratives are now effortlessly possible; these might include:

- Rumors, Disinformation: we should expect that these narratives will exhibit remarkable saliency and credibility; but in many cases will prove to be groundless; leading the elaboration of this area will probably be the development of divisive social/political narrative creation; i.e. fake news;

- Persuasion Campaigns: these might involve recent or developing local issues that residents feel are compelling issues that need addressing but should not wait for the next election cycle to resolve;

- Political Messaging: individuals seeking political office create and disseminate their campaign platform statements and disseminate them throughout their respective electoral districts; creating these to address local hot button issues can now be done very quickly;

- Entertainment

- Scriptwriting, Novels: a remarkable capability that CG4 has shown itself capable of is in the creation of narrative that can be used for the creation of a screen play or novel; it is capable of generating; it is capable of generating seemingly realistic characters from just a few initial prompts; these prompts can be further elaborated upon and refined to the point that a very believable character can be generated; a set of characters can be created each of which has their own motives, concerns, flaws and resources; using a set of these fictitious characters it is entirely possible to create a story line in which they each interact with each other; hence a whole screen play or even possibly a novel can be developed in record time;

- NPC: immersive role play: along similar lines CG4 is capable of being used to create artificial environments that are suitable for online immersive role playing games; these environments can possess any features or characteristics imaginable; if one were to look a short bit forward in time the industry of interactive role playing games may well experience an explosion of new possibilities;

- synthetic personalities: given the resources in terms of time and insight a knowledgeable user can use CG4 to create a fictitious personality; this personality can be imbued with traits, habits of thought, turns of phrase, an autobiographical sketch of arbitrary depth and detail; it can then be invoked as an interaction medium to engage with a user; these can mean engaging with an artificial personality with broad insights about the world or much more narrow but deep insights into specific knowledge domains; interacting with such a fictitious or synthetic personality might bear a powerful resemblance to training a surprisingly sophisticated dog; except that in this case the “dog” would be capable of sustaining very high levels of dialog and interaction;

- Advisors (Harari – 15:25).:

- Personal relationships (Harari – 11:30). Interpersonal skills are often daunting for many people; socialization, economic, political, religious and other predispositions can condition the development of a gradually improving relationship, or conversely a worsening of it; being able to recognize, articulate and manage differences can be costly and time consuming; at worse they can result in costly and acrimonious separations; being able to head these pathways off before they pass a point of no return will be a huge step forward in facilitating the creation of positive relationships;

- Financial. Access to quality financial advisors can be very expensive; the ability to query a system with a high level of financial expertise will propagate improved financial decisions far beyond where they currently stand, i.e. affordable only by financially well to do individuals;

- Political. This might mean recognizing a local issue, creating a local community ground swell of interest then forming a political action committee to bring to a local political authority for address and resolution;

- Psychological. Existing psychological systems have already demonstrated their usefulness in cases of PTSD; going forward we can envision having a personal therapist that possesses a deep understanding of an individual person’s psychological makeup; such an advisor would be capable of helping the individual to work through issues that might be detrimental to their further pursuits or advancement;

- Professional development. Work place realities reflect the fact that social, economic and political shifts can cause surprising and sometimes dramatic changes; these might entail off shoring, downsizing our, outsourcing; therefore any individual aspiring to remain on top of their professional game will need to be alert to these shifts and able to make adjustments in changing their mix of professional skills;

- Other: based socio-cultural modeling and analysis (socioeconomic, political, geopolitical interaction analysis using multi-agent environments); a group of researchers at Stanford University and Google recently published a paper on how they created a version of the popular Sim World game. They created an artificial village with twenty five “inhabitants”. Each of these “inhabitants” or agents possessed motives, background and history, interior monologs and were able to create new goals as well as interact with each other; the results were startling; a significant development was that the system exhibited emergent properties that the developers had not originally expected; looking forward we can expect that these kinds of artificial environments will proliferate and improve their sophistication, often with a range of unexpected emergent properties;

- Political Action:

- Sentiment analysis. The British company Cambridge Analytica became well known through its ability to analyze voter sentiment across a broad range of topic and hot button issues; it excelled at creating highly specific messaging to remarkably small target groups that led to decisions to vote or not vote on specific issues;

- Preemptive campaigns: existing analysis tools such as sentiment analysis will become increasingly sophisticated; as the do we should expect that they will be applied to public figures, especially legislators and others in positions of influence; these insights might be based upon public actions; in the case of politicians voting records, position papers and constituency analysis will be at the forefront of study;

- Subversion.

- Personal Compromise: access to detailed information about information about individuals of interest such as those with national security or defense related clearances will be favorite targets of malicious actors; the recent attack on the Office of Personnel Management is a foretaste of what is to come; by gaining access to information about individuals with highly sensitive clearances a foreign actor can position themselves to compromise, threaten or otherwise coerce specific individuals with clearances or people who are directly or indirectly related or associated with them;

- Extortion. The Office of Personnel Management of the US Government was hacked by PRC hackers. The result was the capture of millions of profiles of US citizens with security clearances. Possession of the details of these individuals puts them at considerable risk. Risk factors include: knowing where they work and what programs they have access to, data on relatives, co-workers, detailed identification information suitable for creating false credentials.

- Narratives: Highly realistic, plausible narratives and counter-narratives are now effortlessly possible; these might include:

- Molecular Modeling.

- Inorganic (materials, pharmaceuticals such as dendrimer, mono-filament molecules); such as:

- room temperature superconducting materials: such a development would revolutionize societies in ways that cannot be fully characterized; another usage of such a capability might be in the fabrication of power storage capabilities; these forms of molecular combinations could result in storage batteries with power densities hundreds or thousands of times beyond those currently available; a battery the size of a loaf of bread might be capable of storing sufficient power to operate a standard home with all of its devices operating for days, weeks or longer;

- extremely high tensile strength materials: these might be derivatives of carbon nanotube material; but with much higher tensile strength; fibers made out of such material can be used as a saw blade; strands and weaves of such material could be used as a space elevator material; other uses might be in the use of nearly impervious shields such as bullet proof vests if woven into a fabric-like material and covered such that it did not endanger the wearer;

- novel psycho actives: using dendrimer technology one can envision fabricating combinations of pharmaceutical substances for highly specific and focused effects;

- Organic (biological) modeling capabilities (proteins, enzymes, hormones virus, bacteria, prions); the identification of molecular structures that enable the interdicting of metabolic failure can be envisaged; this could mean that new tools can emerge that to beyond the already remarkable CRISPR-CAS9 model and its derivatives; one can envision the creation of variants of molecular substances that mimic the hemoglobin molecule found in certain reptiles such as alligators or whales; these animals routinely demonstrate the ability to remain submerged for many minutes on end without resorting to returning to the surface to breathe;

- Combined novel molecular modeling capabilities (substances, pharmaceuticals); these materials may be the result of combining organic and inorganic molecular structures to arrive at a result with heretofore novel and unexpected properties and capabilities;

- Targeted Bioweapons: the ability to synthesize and model new molecular structures will be hastened with tools such as CG4; the rival company Deep Mind recently supported the effort to arrive at a SARS COVID-19 vaccine; the company used its Deep Fold 2 system to screen millions of possible vaccine molecular structures; the result was that it arrived at a small set of viable candidate molecular structures in a matter of days; equally possible would be molecular structures that were capable of causing debilitating physiological or psychological functioning;

- Inorganic (materials, pharmaceuticals such as dendrimer, mono-filament molecules); such as:

- Social Engineering.

- Industry models: targeted industry segment analysis – identification of bottlenecks, weak links, possible disruption points;

- Geopolitical models: selectively focus on global areas of interest; the objective being the creation of tools that enable the operator to “drive” a line of analysis or discourse forward based upon real world limitations, resources, historical realities and current political imperatives; an extension to Caspian Report;

- Boundaries exploration: these might emerge as compelling social, economic, political policy proposals grounded in common sense; possible ways to solve older, existing problems using recent insights into the mechanics, linkages and dynamics of observable processes;

- New value propositions: near term job market realignment, disruption; (increased social dislocation, disruption);

- Locus of Interest: emergence of position nexus ecology; crystallization of political polarization; these might be further developments of existing “channels” currently available on youtube.com; but would operate independently of that platform, but become accessible via a “white pages” type structure;

- Food Chain: emergence of specialist groups dedicated to supporting various actors in the nexus ecology; these will follow existing business development lines with market segmentation and brand identification; we should expect to see: markets with offerings that are priced as: high, best, low and niche;