Difference between revisions of "Darwin2049/chatgpt4 version03"

Darwin2049 (talk | contribs) |

Darwin2049 (talk | contribs) |

||

| (172 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

(2023.08.09: THIS IS NOW A BIT CLOSER... NEEDTO GO THROUGH NOW AND ) | |||

(EVEN OUT ENTRIES )<br /> | |||

(MAKE BULLET POINT HEADINGS WHERE APPROPRIATE )<br /> | |||

(BRING LINKS AND REFERENCES IN )<br /> | |||

(INCLUDE LINKS - TRANSFER FROM VERSION02 TO VERSION03 )<br /> | |||

[[File:OPENAI.png|left|200px]] | |||

'''''<SPAN STYLE="COLOR:BLUE">OpenAI - ChatGPT4. </SPAN>''''' | |||

In what follows we attempt to address several basic questions about the onrushing progress with the current focus of artificial intelligence. There are several competing actors in this space. These include OpenAI, DeepMind, Anthropic, and Cohere. A number of other competitors are active in the artificial intelligence market place. But for purposes of brevity and because of the overlap we will limit focus on ChatGPT4 (CG4). Further, we focus on several salient questions that that raise questions of safety, risk and prospects. | |||

Specifically, risks that involve or are: | |||

'''''<SPAN STYLE="COLOR: | * '''''<SPAN STYLE="COLOR:BLUE">interfacing/access:</SPAN>''''' how will different groups interact with, respond to and be affected by it; might access modalities available to one group have positive or negative implications for other groups; | ||

* '''''<SPAN STYLE="COLOR:BLUE">political/competitive:</SPAN>''''' how might different groups or actors gain or lose relative advantage; also, how might it be used as a tool of control; | |||

* '''''<SPAN STYLE="COLOR:BLUE">evolutionary/stratification:</SPAN>''''' might new classifications of social categories emerge; were phenotypical bifurcations to emerge would or how would the manifest themselves; | |||

* '''''<SPAN STYLE="COLOR:BLUE">epistemological/ethical relativism:</SPAN>''''' how to reconcile ethical issues within a society, between societies; more specifically, might it provide solutions or results that are acceptable to the one group but unacceptable to the other group; recent attention has been drawn to the evidence that a LLM such as CG4 may begin to exhibit '''''<SPAN STYLE="COLOR:BLUE">[https://www.marktechpost.com/2023/08/13/this-ai-research-from-deepmind-aims-at-reducing-sycophancy-in-large-language-models-llms-using-simple-synthetic-data/ sycophancy]</SPAN>''''' in its interactions with a user; even if the value stance of the user can be considered as an equivocation; | |||

We | '''''<SPAN STYLE="COLOR:BLUE">Synthesis.</SPAN>''''' Responding to these questions calls for some baseline information and insights about the issues that this new technology entails. We propose to suggest we look at what | ||

* some of the basic concepts are which underpin this new development; | |||

* sentiment is being expressed about it by knowledgeable observers; | |||

* the basic technology or paradigmatic theories are that enable its behavior; | |||

* might be some risks that various communities will face as a result of its introduction; | |||

* or might risks might manifest themselves; | |||

* insights were used to develop this synthesis; | |||

''''' | '''''Terms.''''' | ||

* GPT3. | |||

* GPT3.5. | |||

* GPT4. | |||

* Chat GPT4. | |||

* Deep Learning. | |||

* Large Language Model. | |||

* Generative Pre-trained Models. | |||

* Neural Network. | |||

* Hidden Layer. | |||

* Training Data. | |||

* Parameters. | |||

* Emergence. | |||

* Alignment. | |||

* Autonomous Agent. | |||

* Theory of Mind. | |||

* Consciousness. | |||

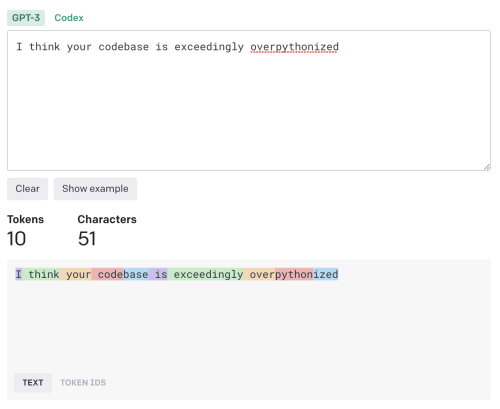

* Tokens. | |||

* Weights. | |||

CG4 has demonstrated capabilities that represent a significant leap forward in overall capability and versatility beyond what has gone before. In order to attempt an assessment prospective risks suggests reviewing recent impressions at a later date as more reporting and insights have come to light. | |||

CG4 has already demonstrated that new and unforeseen risks are tangible; in some instances novel and unforeseen capabilities have been reported. | |||

It is with this in mind that we attempt here to offer an initial profile or picture of the risks that we should expect to see with its broader use. | |||

By way of of addressing this increasingly expanding topic we offer our summary along the following plan of discourse: | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Overview and Impressions: </SPAN>''''' | |||

** what has emerged so far; some initial impressions are listed; | |||

** next are some caveats that have been derived from these impressions; | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Theory of Operation: </SPAN>''''' for purposes of brevity a thumb nail sketch of how CG4 performs its actions is presented; | |||

** included are some high level diagrams | |||

** also included are links to several explanatory sources; these sources include articles and video content; | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Risks are then focused on our summary includes risks that are viewed as: </SPAN>''''' | |||

** '''''<SPAN STYLE="COLOR:RED"> systemic: </SPAN>''''' these are inherent as a natural process of ongoing technological, sociological advance; | |||

** '''''<SPAN STYLE="COLOR:RED"> malicious: </SPAN>''''' who known actors categories are; how might they use this new capability; | |||

** '''''<SPAN STYLE="COLOR:RED"> theoretical: </SPAN>''''' or possible new uses that might heretofore not been possible; | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Notes, References:</SPAN>''''' we list a few notable portrayals of qualitative technological or scientific leaps; | |||

'''''<SPAN STYLE="COLOR: | '''''<SPAN STYLE="COLOR:BLUE">Overview. </SPAN>''''' Some observers early on were variously favorable and voiced possible moderate caution while others were guarded or expressed caution to serious fear. | ||

'''''<SPAN STYLE="COLOR:BLUE">Several notable voices expressed optimism and interest in seeing how events unfold. </SPAN>''''' | |||

'''''<SPAN STYLE="COLOR: | * '''''<SPAN STYLE="COLOR:BLUE"> Dr. Jordan Peterson. </SPAN>''''' During his discussion with [https://www.youtube.com/watch?v=S_E4t7tWHUY&t=1726s&ab_channel=JordanBPeterson Brian Roemmele] many salient topics covered. Of note some germane capabilities such as: | ||

* | ** super prompts; Roemmele revealed that he was responsible for directing CG4 to focus specifically upon the topic that he wished to address and omit the usual disclaimers and preamble information that CG4 is known to issue in response to a query. | ||

* | ** discussions with historical figures; they posited that it is possible to engage with CG4 such that it can be directed to respond to queries as if it were a specific individual; the names of Milton, Dante, Augustine and Nietzsche. Peterson also mentions that a recent project of his is to enable a dialog with the King James Bible. | ||

** among the many interesting topics that their discussion touched on was the perception that CG4 is more properly viewed as a 'reasoning engine' rather than just a knowledge base; essentially it is a form of intelligence amplification; | |||

* | |||

* | |||

'''''<SPAN STYLE="COLOR: | * '''''<SPAN STYLE="COLOR:BLUE"> Dr. Alan Thompson. </SPAN>''''' Thompson focuses his video production on recent artificial intelligence events. His recent videos have featured '''''<SPAN STYLE="COLOR:BLUE">[https://www.youtube.com/watch?v=_coWqL25uHU&ab_channel=DrAlanD.Thompson CG4 capabilities via an avatar that he has named "Leta".] </SPAN>''''' He has been tracking the development of [https://lifearchitect.ai/models/ artificial intelligence and large language models] for several years. | ||

** Leta is an avatar that Dr. Thompson regularly uses to illustrate how far LLM's have progressed. In a recent post his interaction with [https://www.youtube.com/watch?v=J1Gar79POhs&ab_channel=DrAlanD.Thompson Leta] demonstrated the transition in capability from the prior underlying system (GPT3) and the newer one (GPT4). | |||

** Of note are his presentations that characterize where artificial intelligence is at the moment and his predictions of when it might reasonable to expect to witness the emergence of artificial general intelligence. | |||

** A recent post of his titled [https://www.youtube.com/watch?v=gAeBIc3iQTI&ab_channel=DrAlanD.Thompson In The Olden Days] portrays a contrast between how many of societies current values, attitudes and solutions will be viewed as surprisingly primitive in coming years. | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Dr. de Grass-Tyson. </SPAN>''''' A recent episode of the [https://www.youtube.com/watch?v=MUOVnIbTZeA&t=631s&ab_channel=Valuetainment Valuetainment] was present with Patrick Bet-David to discuss CG4 with Dr. de Grass-Tyson. '''''<SPAN STYLE="COLOR:BLUE"> A lot of people in Silicon Valley will be out of a job. </SPAN>''''' | |||

** Salient observations that were brought forward was that the state of artificial intelligence at this moment as embodied in the CG4 system is not something to be feared. | |||

** Rather his stance was that it has not thus far demonstrated the ability to reason about various aspects of reality that no human has ever done yet. | |||

** He cites a hypothetical example of himself whereby he takes a vacation. While on the vacation he experiences an engaging meeting with someone who inspires him. The result is that he comes up with totally new insights and ideas. He then posits that CG4 will have been frozen in its ability to offer insights comparable to those that he was able to offer prior to his vacation. So the CG4 capability would have to "play catch up". By extension it will always be behind what humans are capable of. | |||

'''''<SPAN STYLE="COLOR: | '''''<SPAN STYLE="COLOR:BLUE"> Caution and concern was expressed by: </SPAN>''''' | ||

* '''''<SPAN STYLE="COLOR:BLUE"> Dr. Bret Weinstein. </SPAN>''''' Dr. Weinstein has been a professor of evolutionary biology. His most recent teaching post was at Evergreen College in Oregon. | |||

** In a recent interview on the David Bet-David discussed how quickly the area of artificial intelligence has been progressing. Rogan asked Weinstein to comment on where the field appeared to be at that moment and where it might be going. In response Weinstein's summary was that [https://www.youtube.com/watch?v=ITAG4H3heZg&ab_channel=Valuetainment "we are not ready for this"]. | |||

** Their discussion touched on the fact '''''<SPAN STYLE="COLOR:BLUE">CG4 had shown its ability to pass the Wharton School of Business MBA final exam as well as the United States Medical Licensing Exam.</SPAN>''''' Dr. Weinstein warns that "we are not ready for this." | |||

** He makes the case that the current level of sophistication that CG4 has demonstrated is already very problematic. | |||

** By way of attempting to look forward he articulates the prospective risk of how we as humans might grapple with a derivative of CG4 that has in fact become sentient and conscious. | |||

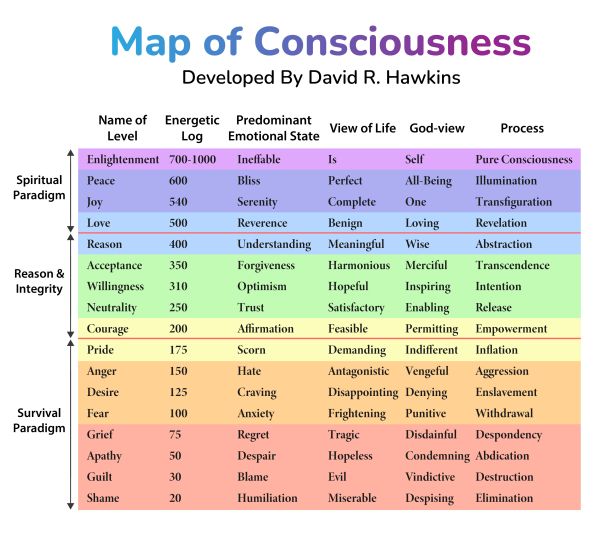

[[File:Consciousnessmap.jpg|left|600px]] | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Dr. Joscha Bach. </SPAN>''''' Bach is a researcher on the topic of artificial consciousness. During an interview with Lex Fridman focuses the discussion on [https://www.youtube.com/watch?v=YDkvE9cW8rw&ab_channel=LexClipswhether or not a derivative of CG4 could become conscious.] '''''<SPAN STYLE="COLOR:BLUE">Bach makes the case that CG4 is not consciousness. </SPAN>''''' | |||

** He states the position that a large language model would not an effective way to proceed to arrive at an entity that possessed self consciousness, sentience, motivation and intentionality. | |||

** During his response regarding the possibility that CG4 or a derivative become conscious his position was that the very basis of the Large Language Model would not be an appropriate approach to creating any of the processes that appear to underpin human consciousness. His premises include that large language models are more like the mythical golem of Eastern European Seventeen Century literature. Crucially that LLM's have no self reflexivity. | |||

** They are simply executing tasks until a goal is met. Which again points to the position that "the lights are on but the doors and windows are open and there is nobody home". Rather it would be better to start with self organizing systems rather than predictive LLM's. In the discussion he points out that some form of self-model would have to be discovered by the system and recognize that it was modeling itself. | |||

** Bach suggests that instead of using a LLM to attempt to arrive at a real time self reflective model of consciousness comparable to human consciousness that we should start from scratch. However interestingly given the willingness to pursue this path he advocates caution. | |||

** He adds the interesting fact that in order to pursue such a path it would be necessary to move forward from the basis of there being an operating environment that was based upon some form of representation of ground truth. Otherwise the result could develop in which no one really knows who is talking to who. Existing LLM's offer no comparable world-model that an artificial consciousness can embed itself within. | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Dr. Alex Karp (CEO - Palantir). </SPAN>''''' Dr Karp is the CEO of a data mining company. Palantir has focused on [https://www.cnbc.com/2019/01/23/palantir-ceo-rips-silicon-valley-peers-for-sowing-seeds-of-division.html providing analysis services to a range of national security and national defense organizations]. The company has developed its relationship with these agencies over two decades. It espouses the crucial role that advanced technology provides to the safety and security of the nation as well as how it provides global stability in several different venues. | |||

** He has put '''''<SPAN STYLE="COLOR:BLUE"> a spotlight many Silicon Valley technology companies shy away from supporting US national security.</SPAN>''''' This despite the fact that their successes stem directly from the fact that the US has provided the economic, political, educational, legal and military infrastructure that has enabled them to incorporate, grow and develop. | |||

**Given Palantir's close relationships with national security and national defense organizations it has a far greater sense of the realities and risks of not forging ahead with all possible speed to equip the nations defenses and provide those charged with seeing to the nation's security. | |||

'''''<SPAN STYLE="COLOR: | '''''<SPAN STYLE="COLOR:BLUE">Other notable voices have expressed sentiments ranging from caution to alarm. </SPAN>''''' | ||

'''''<SPAN STYLE="COLOR: | * '''''<SPAN STYLE="COLOR:BLUE"> Elizer Yudkowski. </SPAN>''''' Yudkowski is a polymath who has been following the development of LLM's for several years. The release of CG4 has resulted in his frequent presence speaking out publicly about the risks that humanity may face extinction should a artificial general intelligence emerge from current research. | ||

** During an interview with Lex Fridman expresses fear that the field of artificial intelligence is progressing so rapidly that humans could find themselves facing [https://www.youtube.com/watch?v=jW2ihBRzLxc&ab_channel=LexClips the prospect of an extinction event]. His concern is that it could evolve with such rapidity that we as humans would be unable to assess its actual capacities and as such be unable to make reliable determinations as to whether it was presenting its authentic self or if it was presenting a dissembling version of itself. '''''<SPAN STYLE="COLOR:BLUE"> His position is that artificial intelligence will kill us all. </SPAN>''''' | |||

** He has made several [https://www.youtube.com/watch?v=Yd0yQ9yxSYY&ab_channel=TED presentations regarding the potential down sides and risk] that artificial intelligence might cause. His specific concerns are that a form of intelligence could crystallize as a result of normal operation in one or another of the current popular systems. These generally are considered to be the large language models such as Chat GPT4. | |||

** The DeepMind system is one that is believed to be comparably sophisticated. The Meta variant known as Llama is also of sufficient sophistication that an unexpected shift in capability as a result of some unforeseen emergent capability could escape the control of its human operators. | |||

**A recent development at the Meta artificial intelligence lab resulted in pausing the activities of an emergent capability. This capability seemed to suggest that two instances of the ai were exchanging messages using a private language that they had just developed. | |||

'''''<SPAN STYLE="COLOR: | * '''''<SPAN STYLE="COLOR:BLUE"> Elon Musk. </SPAN>'''''Musk was an early investor in OpenAI when it was still as private startup. | ||

** He has tracked development of artificial intelligence in both the US as well as DeepMind in the UK. He has recently enlisted 1100 other prominent personalities and researchers to sign a petition to presented to the US Congress regarding the risks and dangers of artificial intelligence. | |||

** His observation is that regulation has always happened by government after there has been a major adverse or tragic development. In the case of artificial intelligence his position is that the pace of development is such that a moment in time is quickly approaching when a singularity moment arrives and passes. | |||

** It is at that moment that '''''<SPAN STYLE="COLOR:RED">[https://www.youtube.com/watch?v=a2ZBEC16yH4&ab_channel=FoxNews humanity unwittingly unleashing an AGI is a very serous risk]. </SPAN>'''''may discover that it has '''''<SPAN STYLE="COLOR:BLUE"> unleashed a capability that it is unable to control.</SPAN>''''' | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Gordon Chang. </SPAN>''''' Says that the US must forge ahead with all possible speed and effort to maintain its lead against the PRC in the area of artificial intelligence. '''''<SPAN STYLE="COLOR:BLUE"> Chang asserts that the [https://www.youtube.com/watch?v=_bUwiqQFRxc&ab_channel=FoxBusiness PRC culture, values and objectives are adversarial] </SPAN>''''' and that they will use whatever means necessary to achieve a commanding edge and achieve dominance with this capability in all of its geopolitical endeavors. His earlier public statements have been very explicit that '''''<SPAN STYLE="COLOR:BLUE">the West faces an existential crisis from the CCP and that it will use any and all means, including artificial intelligence to gain primacy. </SPAN>''''' | |||

'''''<SPAN STYLE="COLOR:BLUE">Indirect alarm has been expressed by several informed observers. </SPAN>''''' | |||

In several cases highly knowledgeable individuals have expressed concern about how the PRC has geared up to become the world leader in artificial intelligence. Their backgrounds range from journalism, diplomatic, military and Big Tech. | |||

Some observers have explicitly suggested that the role of artificial intelligence will be crucial going forward. Their pronouncement however preceded the release of such powerful tools as GPT3 and GPT4. Following are several individuals who have been raising attention or sounding the alarm about the risk that external geopolitical actors pose. We should note in reviewing them that in the case of those PRC and CCP watchers the risks are clear, imminent and grave. | |||

* '''''<SPAN STYLE="COLOR:BLUE"> [https://www.wikiwand.com/en/Kai-Fu_Lee Kai-Fuu Lee.] </SPAN>''''' Is a well known and respected expert in the area of artificial intelligence. Is known to be leading the charge to make the PRC into a world class leader in AI, an AI Superpower. | |||

** He leads [https://www.wikiwand.com/en/Sinovation_Ventures SinoVentures], which is a venture capital firm with the charter to start up five successful startup per year. | |||

** Recent pronouncements have suggested that advances in artificial intelligence can be co-operative rather than adversarial and confrontational. Events in the diplomatic sphere suggest otherwise. | |||

** His efforts provide the gestation environment needed to launch potential billion dollar major concerns. | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Bill Gertz. </SPAN>''''' Bill Gertz is an author who has published several books that focus on technology and national security. A recent book titled "Deceiving the Sky" warns of a possible preemptive action on the part of the PRC. '''''<SPAN STYLE="COLOR:BLUE">His work details how the CCP has launched a detailed, highly focused set of actions that will enable it to marginalize and neutralize the current "hegemon", i.e. the USA </SPAN>''''' | |||

** He posits that the US could find itself at a serious disadvantage in a contest with the PLA and the PLAN. | |||

** By way of clarification he points out that the leadership of the CCP has been building out an increasingly sophisticated military which it intends to use to modify, by force if necessary the global geopolitical order. | |||

** Additionally other references such as that explicitly expressed by [https://www.youtube.com/watch?v=c8q6P_HfIBE&ab_channel=GlobalNews President Vlad Putin] of the Russian Federation state clearly that control of artificial intelligence will be a make or break development for whatever nation-state reaches the high ground first. | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Dr. Graham Allison. </SPAN>''''' Dr Allison is a professor at Harvard University. A recent work that he published in 2018 is titled Destined for War. Dr Allison places front and center the reality that only a minority of power transitions have been peaceful. '''''<SPAN STYLE="COLOR:BLUE">Of sixteen power transitions fourteen have been resolved through war.</SPAN>''''' | |||

** In this volume he develops the position that there have been sixteen historical instances where a reigning hegemonic power was challenged by a newcomer power for the role of dominant hegemonic power. | |||

** Of those sixteen only two were transitioned in a peaceful manner. The rest were accomplished by armed conflict. | |||

** In several chapters Dr. Allison details how the leadership of the CCP has focused the economic development of the PRC. He presents detailed charts and graphs that clarify the importance of not only national GDP numbers but also the crucial importance of purchasing power parity (PPP). | |||

** His thesis takes the direction that the PRC has an innate advantage due to is far larger population base. | |||

** He shows how a pivot point will be reached at which the PPP of the PRC will exceed that of the USA. When this happen then a) the economy of the PRC will be larger than that of the USA but will also b) be able to wield greater political and geopolitical influence world wide. It is during this period of time as this rebalancing takes place that the time of maximum risk will crystallize. | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Amb. (ret.) Dr. Michael Pillsbury. </SPAN>''''' Deep, lucid insight into CCP, PRC doctrine, philosophy, world view (Mandarin fluent). | |||

** Dr. Pillsbury details how '''''<SPAN STYLE="COLOR:BLUE">the CCP has mobilized the entire population of the PRC to prepare to seize the high ground in science and technology - through any means necessary. </SPAN>''''' | |||

** His publication Hundred Year Marathon goes into great detail how a succession of CCP leadership has been gradually repositioning the PRC to be a major global competitor. He makes very clear that the PRC is a command economy comparable to how the former USSR functioned. | |||

**He clarifies how the CCP leadership has focused its thinking to position itself to displace what it views as the "reigning hegemon - the USA". A study of this work makes very clear that the CCP leadership take very seriously its charter to become the world's global leader in all things. | |||

** The list of all crucial science and technology is presented that shows that the CCP plans to own and control anything that matters. That the PRC is an authoritarian society is made very clear as well. | |||

** Dr. Pillsbury provides background and context into the values and mindset of the current CCP leader, Xi Jinping. When a fuller recognition of just how implacably focused this leader is then there might be a sea change in how the US and the West refocuses its efforts to meet this growing challenge. | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Brig. Gen (ret.) Robert Spalding III. </SPAN>''''' Provides a catalog of insightful military risks, concerns. | |||

**Stealth War: details how the CCP has directed its various agencies to identify and target US vulnerabilities; he clarifies that the CCP will use unrestricted warfare, i.e. '''''<SPAN STYLE="COLOR:BLUE">unrestricted warfare i.e. warfare through any other means necessary to accomplish its goals. </SPAN>''''' | |||

** The CCP is the main, existential threat: In chapter after chapter he details how the CCP uses intellectual property theft - which is the actual know-how and detailed design information needed to replicate a useful new high tech or scientific breakthrough; thus saving themselves years and billions of dollars; | |||

*** it uses American financial markets to raise money to develop their own military base; | |||

*** it uses espionage to identify, target and acquire American know how, technology and science for its own advantage; | |||

*** debt trap diplomacy: several countries belatedly came to recognize the dangers of the Belt and Road initiative; the PRC approach meant appearing to offer a great financial or infrastructure deal to a third world country for access to that country's infrastructure; when the country found itself struggling with meeting the terms of the agreement it discovered that the CCP would unilaterally commandeer some part of the country's infrastructure as a form of repayment; | |||

*** unfair trade practices: such as subsidized shipping for any and all products made in the PRC; trading partners are faced with import tariffs; | |||

*** trading partner surrender: it also demands that the Western partner share with it any and all practices and lessons learned by the Western partner; | |||

*** espionage: this is commonly used against trade partners to identify, target and acquire new or promising technologies or scientific advancements; | |||

*** infiltration of American society with subversive CCP institutions such as Confucian Institutes which are front organization that serve as collection points for espionage activities; | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Dr. A. Chan & Hr. Ridley published [https://www.wikiwand.com/en/Viral:_The_Search_for_the_Origin_of_COVID-19 a book titled VIRAL IN 2021] </SPAN>'''''. | |||

Though subsequent [https://www.youtube.com/watch?v=FEh5JyZC218&ab_channel=JordanBPeterson investigative reporting] did not directly address the questions of how AI tools like DeepMind or CG4 might be used to fabricate a novel pathogen the subsequent reporting has made are clear that these tools can definitely be employed to drive research on the creation of extremely dangerous pathogens. | |||

** They provided a deeply insightful chronological genesis and progressions of COVID-19 pandemic. | |||

** Dr Chan and Hr Ridley provide a detailed and extremely well documented case that draws attention directly to the influence that the CCP has had with the World Health Organization(WHO). It then used that influence to deflect attention away from its own role in attempting to claim that the SARS COVID-19 outbreak happened as a result of zoonotic transmission. | |||

** The CCP leadership attempted to build the case that the outbreak happened as a result of mishandling of infected animals at a "wet market". A "wet market" is an open air facility that specializes in the purchase of live exotic animals. These animals are typically offered for personal consumption. Some are presented because of claims of their beneficial health effects. | |||

** They go on to describe how the CCP oversaw misinformation regarding its pathogenicity and infectivity. Their account shows that they had a number of extremely insightful contributors working with them. | |||

** Their efforts the team was able to show how the Hunan Institute of Virology (HIV) attempted to confuse crucial insight about the COVID-19 virus. | |||

** Crucial insights that are offered include facts such as a) genetically modified mice were used in a series of experiments. These genetically modified or chimeric mice had human airway tissue spliced into their DNA. | |||

**The following additional notes are included as a means to draw attention to the reality of achieving advances in biotechnology at a rate outpacing what has already happened with the SARS-COVIE19 virus. | |||

*** '''''<SPAN STYLE="COLOR:RED">Passaging: </SPAN>''''' The technique called "passaging" is a means of increasing the infectivity of a particular pathogen through targeted rapid evolution. It is use to introduce a pathogen, in this case a virus into a novel environment. This means that the virus has no "experience" of successfully lodging itself into the new organism. | |||

*** '''''<SPAN STYLE="COLOR:RED">Chimeric Organism are used: </SPAN>''''' Initially a target set of organisms, in the case of SARS-COVID19, chimeric mice with the intended pathogen. | |||

*** '''''<SPAN STYLE="COLOR:RED">Chimeric offspring: </SPAN>''''' several generations of modified mice were used to incorporate genetic information using human DNA; specifically DNA coding for human airway passage tissue; | |||

*** '''''<SPAN STYLE="COLOR:RED">Initial Infection: </SPAN>''''' These mice were then inoculated with the SARS-COVID19 virus; initially they showed little reaction to the pathogen. | |||

*** '''''<SPAN STYLE="COLOR:RED">Iterative Infections were performed: </SPAN>''''' These mice produced offspring which were subsequently inoculated with the same pathogen; | |||

*** '''''<SPAN STYLE="COLOR:RED">Directed Evolution via passaging: </SPAN>''''' Iterations of this process resulted in a SARS-COVIE19 virus that had undergone several natural mutation steps and was now so dangerous that its handling required special personal protection equipment and very highly secure containment work areas; | |||

*** '''''<SPAN STYLE="COLOR:RED">Increasing infectivity: Iterative passaging increased the infectivity and pathogenicity of the virus as expected; | |||

*** '''''<SPAN STYLE="COLOR:RED">Highly contagious and dangerous: </SPAN>''''' Ultimately the infectivity and pathogenicity of the mutated virus reached a stage where it was considered highly infective and extremely dangerous; | |||

*** '''''<SPAN STYLE="COLOR:RED">Accidental handling: </SPAN>''''' Some kind of release occurred such that the extremely dangerous virus could successfully spread via aerosol delivery; | |||

*** '''''<SPAN STYLE="COLOR:RED">Only the HIV could do this: </SPAN>''''' Ongoing reporting and investigative work has conclusively demonstrated that the HIV was the source of the COVID-19 pandemic; | |||

*** '''''<SPAN STYLE="COLOR:RED">National Lockdown: </SPAN>''''' Of note is the extremely curious fact that the CCP imposed a nationwide lockdown that went into effect 48 hours after being announced. | |||

*** '''''<SPAN STYLE="COLOR:RED">With "leaks": </SPAN>''''' The CCP imposed extreme lockdown measures which in some cases proved to be extreme measures. | |||

*** '''''<SPAN STYLE="COLOR:RED">International spreading was allowed: </SPAN>''''' Further puzzling behavior on the part of the CCP is the fact that international air travel out of the PRC continued for one month after the lockdown. | |||

* '''''<SPAN STYLE="COLOR:BLUE"> Dr Eric Li. </SPAN>''''' [https://www.ted.com/talks/eric_x_li_a_tale_of_two_political_systems?language=en CCP Values Ambassador. ]; Dr Li is a Chinese national. He was educated in the US at the University of California at Berkeley and later at Stanford University. His PhD is from Fudan University in Shanghai China. He has become a venture capitalist. His focus has focused his resources '''''<SPAN STYLE="COLOR:BLUE"> as a venture capitalist to support the growth and development of [https://www.wikiwand.com/en/Eric_X._Li#Business_ventures PRC high technology companies.] </SPAN>''''' | |||

** During a presentation at TED Talks Dr Li made the case that there are notable value differences between the Chinese world view and that typically found in the West. | |||

** In his summary of value comparisons he builds the position that the Chinese model works in ways that Western observers claim that it does not. He champions the Chinese "meritocratic system" as a means of managing public affairs. | |||

'''''<SPAN STYLE="COLOR: | '''''<SPAN STYLE="COLOR:BLUE">-CAVEATS-</SPAN>'''''<br /> | ||

The above impressions by notable and knowledgeable observers in the field of artificial intelligence and geopolitics is a very small, unscientific sampling of positions. However | |||

From the few observations listed above we reach the tentative conclusion that several factors should condition our synthesis going forward. These include the premises that CG4: | |||

[[File:Exa_scale_el_capitain.jpg|left|450px]] | |||

* '''''<SPAN STYLE="COLOR:BLUE">is inherently dual use technology</SPAN>''''' should always be kept clearly in mind. The prospect that totally unforeseen and unexpected uses will emerge must be accepted as the new reality. Like any new capability it will be applied toward positive and benign outcomes but we should not be surprised when adverse and negative uses emerge. Hopes of only positive outcomes are hopelessly unrealistic. | |||

* '''''<SPAN STYLE="COLOR:BLUE">can be viewed as a cognitive prosthetic (CP). </SPAN>''''' It possesses no sense of self, sentience, intentionality or emotion. Yet it has demonstrated itself to be a more powerful tool for expression or problem solving. Possibly the next step forward above and beyond the invention of symbolic representation through writing. | |||

* '''''<SPAN STYLE="COLOR:BLUE">has shown novel and unexpected emergent capabilities. </SPAN>''''' These instances of emergent behavior typically were not foreseen by CG4's designers. However though they take note of this development they do not support the position that sentience, intentionality, consciousness or self reference is present. The lights certainly are on but the doors and windows are still open and there is still nobody home. | |||

* '''''<SPAN STYLE="COLOR:BLUE">operates from within a von Neuman hosting. </SPAN>''''' As it is currently accessed has been developed and is hosted on classical computing equipment. Despite this fact it has shown a stunning ability to provide context sensitive an meaningful responses to a broad range of queries. However intrinsic to its fundamental modeling approach has shown itself as being prone to actions that can intercept or subvert its intended agreeable functionality. To what extent solving this "alignment" problem is successful going forward remains to be seen. As training data sets grew in size processing power demands grew dramatically. Subsequently the more sophisticated LLM's such as CG4 and its peers have required environments that are massively parallel and operate at state of the art speeds. This has meant that in order to keep training times within acceptable limits some of the most powerful environments have been brought into play. Going to larger training data sets and keeping time to training conclusion may call for hosting on such exa-scale computing environments such as the El Capitan system or one of its near peer processing ensembles. | |||

'''''<SPAN STYLE="COLOR: | * '''''<SPAN STYLE="COLOR:BLUE">CGX-Quantum will completely eclipse current incarnations of CG4. </SPAN>''''' Quantum computing devices are already demonstrating their ability to solve problems in seconds or minutes that classical von Neuman computing machines would take decades, centuries or even millennia. | ||

[[File:IBMQ2.jpg|right|500px]] | |||

* '''''<SPAN STYLE="COLOR:BLUE">Phase Shift.</SPAN>''''' Classical physics describes how states of matter possess different properties depending upon their energy state or environment. Thus on the surface of the earth we can experience the gas of the atmosphere, the wetness of water and the solidity of an ice berg. | |||

These all consist of the same elements. H2O. Yet properties found in one state, or phase bear little or no resemblance to those in the subsequent state. We should expect to see an evolution comparable as witnessing what happens when we see gas condense to a liquid state; then when we see that liquid state solidify into an object that can be wielded in one's hand. When systems such as DeepMind or CG4 and its derivatives are re-embodied in a quantum computing environment heretofore unimaginable capabilities will become the norm.<br /> | |||

'''''<SPAN STYLE="COLOR:BLUE">-RISKS-</SPAN>'''''<br /> | |||

The following collects these reactions suggesting promise or risk. They seem to partition into questions of promise and Risk. The main risk categories are: systemic, malicious and theoretical. | |||

* '''''<SPAN STYLE="COLOR:BLUE">Systemic. </SPAN>'''''These risks arise innately from the emergence and adaptation of new technology or scientific insights. | |||

During the early years of private automobile usage the risks of traffic accidents was very low. This was because there were very few in private hands. But as they began to proliferate. Traffic accident risks escalated. Ultimately civil authorities were obliged to act to regulate their use and ownership. | |||

As some observers have pointed out, regulation usually occurs after there has been an unfortunate or tragic event. The pattern can be seen in civil aviation and later in control and usage of heavy transportation or construction equipment. In each case training became formalized and licensing became obligatory for airplane ownership and usage, trucking or heavy construction equipment. | |||

'''''<SPAN STYLE="COLOR: | * '''''<SPAN STYLE="COLOR:BLUE">Malicious.</SPAN>''''' History if littered with examples of how a new scientific advance or technological advance was applied in ways not intended by the inventor. The Montgolfier hot air balloons were considered an entertaining novelty. Their use during World War One as surveillance and attack platforms cast a new and totally different perception on their capabilities. We should expect the same lines of development with CG4. Its peers and derivatives should be considered as no different. | ||

'''''<SPAN STYLE="COLOR: | * '''''<SPAN STYLE="COLOR:BLUE">Theoretical. </SPAN>'''''CG4 has shown itself to be a powerful cognitive appliance or augmentation tool. Given that it is capable of going right to the core of what makes humans the apex predator we should take very seriously the kinds of unintended and unexpected ways that it can be applied. This way suggests considerable caution. | ||

* '''''<SPAN STYLE="COLOR:BLUE">Recent Reactions. </SPAN>'''''Since the most recent artificial intelligence systems have swept over the public consciousness sentiment has begun to crystallize. There have been four general types of sentiment that have crystallized over time. These include: voices of enthusiastic encouragement, cautious action, urgent preemption. | |||

* '''''<SPAN STYLE="COLOR:BLUE">Enthusiastic Encouragement.</SPAN>''''' Several industry watchers have expressed positive reactions to the availability of CG4 and its siblings. Their position has been that these are powerful tools for good and that they should be viewed as means that illuminate the pathway forward to higher standards of living and human potential. | |||

* '''''<SPAN STYLE="COLOR:BLUE">Cautious Action. </SPAN>'''''Elon Musk and eleven hundred knowledgeable industry observers or participants signed a petition to the US Congress urging caution and regulation of the rapidly advancing areas of artificial intelligence. They voiced concern that the potential usage of these new capabilities can have severe consequences. They also expressed concern that the rapid pace of the adaption of artificial intelligence tools can very quickly lead to job losses across a broad cross section of currently employed individuals. This topic is showing itself to be dynamic and fast changing. Therefore it merits regular review for the most recent insights and developments. In recent weeks and months there have been sources signaling that the use of this new technology will make substantial beneficial impact on their activities and outcomes. Therefore they wish to see it advance as quickly as possible. Not doing so would place the advances made in the West and the US at risk. Which could mean foreclosing on having the most capable system possible to use when dealing with external threats such as those that can be posed by the CCP. | |||

* '''''<SPAN STYLE="COLOR:BLUE">Urgent Preemption. </SPAN>'''''Those familiar with geopolitics and national security hold the position that any form of pause would be suicide for the US because known competitors and adversaries will race ahead to advance their artificial intelligence capabilities at warp speed. In the process obviating anything that the leading companies in the West might accomplish. They argue that any kind of pause can not even be contemplated. Some voices go so far as to point out that deep learning systems require large data sets to be trained on. They posit the reality that the PRC has a population of 1.37 billion people. A recent report indicates that in 2023 there were 827 million WeChat users in the PRC. Further that the PRC makes use of data collection systems such as WeChat and TenCent. In each case these are systems that capture the messaging information from hundreds of millions of PRC residents on any given day. They also capture a comparably large amount of financial and related information each and every day. Topping the list of enterprises that gather user data we find: | |||

** '''''<SPAN STYLE="COLOR:BLUE">WeChat. </SPAN>'''''According to [https://www.bankmycell.com/blog/number-of-wechat-users/#:~:text=WeChat%20Number%20of%20Users%3A%20WeChat,more%20WeChat%20users%2C%20by%202025. BankMyCell.COM] the '''''<SPAN STYLE="COLOR:BLUE">PRC boasts of 1.671 billion users. </SPAN>''''' | |||

** '''''<SPAN STYLE="COLOR:BLUE">TenCent. </SPAN>'''''Recent reporting by [https://webtribunal.net/blog/tencent-stats/] TenCent has '''''<SPAN STYLE="COLOR:BLUE">over one billion users. </SPAN>''''' | |||

** '''''<SPAN STYLE="COLOR:BLUE">Surveillance. </SPAN>'''''Reporting from '''''<SPAN STYLE="COLOR:BLUE">the PRC has [http://chinascope.org/archives/30749 over 600,000,000 surveillance cameras] </SPAN>'''''within a country of 1.37 billion people. | |||

The result is that the Peoples Republic of China (PRC) has an almost bottomless sea of data with which to work when they wish to train their deep learning systems with. Furthermore they have no legislation that focuses on privacy. A disadvantage for the PRC AI efforts is that their entire data sets are limited to the Chinese people. | |||

The relevant agencies charged with developing these AI capabilities benefit from a totally unrestricted volume of current training data. | |||

This positions the government of the PRC to chart a pathway forward in its efforts to develop the most advanced and sophisticated artificial intelligence systems on the planet. And in very short time frames. If viewed from the national security perspective then it is clear that an adversary with the capability to advance the breadth of capability and depth of sophistication of an artificial intelligence tool such as ChatGPT4 or DeepMind will have an overarching advantage over all other powers. | |||

This must be viewed in the context of the Western democracies. Which in all cases are bound by public opinion and fundamental legal restrictions or roadblocks. A studied scrutiny of the available reports suggests that there is a very clear awareness of how quantum computing will be used in the area of artificial intelligence. | |||

''''' | * ''''' 2023.08.11 INSERT ENDS RIGHT HERE: MERGE INSERT WITH ANYTHING BELOW THAT LOOKS USEFUL ''''' | ||

* ''''' 2023.08.14: RESUME HERE - COMB THESE OUT... EVEN THEM UP; MERGE - PURGE''''' | |||

'''''<SPAN STYLE="COLOR: | In what follows we pose several questions that focus on questions related to '''''<SPAN STYLE="COLOR:RED">Risk.</SPAN>''''' Because of the inherent novelty of what a quantum computing environment might make possible the following discussion limits itself to what is currently known. | ||

Risks fall into three main categories: '''''<SPAN STYLE="COLOR:RED">systemic, malicious</SPAN>''''' and '''''<SPAN STYLE="COLOR:RED">theoretical</SPAN>'''''. | |||

These risks might be considered to be more systemic risks. These are risks that arise innately as a result of use or adoption of that new technology. A case in point might be the risks of traffic accidents when automobiles began to proliferate. Prior to their presence there were no systematized and government sanctioned method of traffic management and control. | |||

One had to face the risk of dealing with what were often very chaotic traffic conditions. Only after unregulated traffic behavior became recognized did various civil authorities impose controls on how automobile operators could operate. | |||

Going further as private ownership of automobiles increased even further, vehicle identification and registration became a common practice. Even further, automobile operators became obliged to meet certain basic operations competence and pass exams that verified operations competence. | |||

The impetus to regulate how a new technology recurs in most cases where that technology can be used positively or negatively. Operating an aircraft requires considerable academic and practical, hands on training. | |||

After the minimum training that the civil authorities have demanded a prospective pilot can apply for a pilot's license. We can see the same thing in the case of operators of heavy equipment such as long haul trucks, road repair vehicles and comparable specialized equipment. | |||

Anyone familiar with recent events in both the US and in various European countries will be aware that private vehicles have been used with malicious intent resulting in severe injury and death to innocent bystanders or pedestrians. We further recognize the fact that even though powered vehicles such as cars or trucks require licensing and usage restrictions they have still been repurposed to be used as weapons. | |||

'''''<SPAN STYLE="COLOR:#0000FF">Recent Reactions. </SPAN>''''' | |||

Since the most recent artificial intelligence systems have swept over the public consciousness sentiment has begun to crystallize. There have been four general types of sentiment that have crystallized over time. These include: voices of encouragement, caution, action, preemption and urgency. | |||

[[File:Columbus00.jpg|left|500px]] | |||

'''''<SPAN STYLE="COLOR:BLUE">Caution. </SPAN>''''' | |||

Elon Musk and eleven hundred knowledgeable industry observers or participants signed a petition to the US Congress urging caution and regulation of the rapidly advancing areas of artificial intelligence. They voiced concern that the risks were very high for the creation and dissemination of false or otherwise misleading information that can incite various elements of society to action. | |||

They also expressed concern that the rapid pace of the adaption of artificial intelligence tools can very quickly lead to job losses across a broad cross section of currently employed individuals. This topic is showing itself to be dynamic and fast changing. Therefore it merits regular review for the most recent insights and developments. | |||

'''''<SPAN STYLE="COLOR:RED">Action. </SPAN>''''' | |||

In recent weeks and months there have been sources signaling that the use of this new technology will make substantial beneficial impact on their activities and outcomes. Therefore they wish to see it advance as quickly as possible. Not doing so would place the advances made in the West and the US at risk. | |||

Which could mean foreclosing on having the most capable system possible to use when dealing with external threats such as those that can be posed by the CCP. | |||

'''''<SPAN STYLE="COLOR: | '''''<SPAN STYLE="COLOR:RED">Preemption. </SPAN>''''' | ||

Those familiar with geopolitics and national security hold the position that any form of pause would be suicide for the US because known competitors and adversaries will race ahead to advance their artificial intelligence capabilities at warp speed. In the process obviating anything that the leading companies in the West might accomplish. They argue that any kind of pause can not even be contemplated. | |||

[[File:Ford00.jpg|left|500px]] | |||

Some voices go so far as to point out that deep learning systems require large data sets to be trained on. They posit the reality that the PRC has a population of 1.37 billion people. A recent report indicates that in 2023 there were 827 million WeChat users in the PRC. | |||

Further that the PRC makes use of data collection systems such as WeChat and TenCent. In each case these are systems that capture the messaging information from hundreds of millions of PRC residents on any given day. They also capture a comparably large amount of financial and related information each and every day. | |||

'''''<SPAN STYLE="COLOR: | '''''<SPAN STYLE="COLOR:RED">WeChat. </SPAN>''''' | ||

According to OBERLO.COM the PRC boasts of [https://www.oberlo.com/statistics/number-of-wechat-users#:~:text=Number%20of%20WeChat%20users%20in%20China&text=The%20latest%20statistics%20show%20that,at%20least%20once%20a%20month.] '''''<SPAN STYLE="COLOR:BLUE">of 827 million users.</SPAN>''''' | |||

'''''<SPAN STYLE="COLOR:RED">TenCent. </SPAN>''''' | |||

Recent reporting by [https://webtribunal.net/blog/tencent-stats/ WebTribunal] WeChat has '''''<SPAN STYLE="COLOR:BLUE">over one billion users.</SPAN>''''' | |||

'''''<SPAN STYLE="COLOR: | '''''<SPAN STYLE="COLOR:RED">Surveillance. </SPAN>''''' | ||

Reporting from [https://www.comparitech.com/vpn-privacy/the-worlds-most-surveilled-cities/ Comparitech] the PRC has '''''<SPAN STYLE="COLOR:BLUE">over 600,000,000 surveillance cameras </SPAN>'''''within a country of 1.37 billion people. | |||

The result is that the Peoples Republic of China (PRC) has an almost bottomless sea of data with which to work when they wish to train their deep learning systems with. Furthermore they have no legislation that focuses on privacy. | |||

The relevant agencies charged with developing these artificial intelligence capabilities benefit from a totally unrestricted volume of current training data. | |||

This positions the government of the PRC to chart a pathway forward in its efforts to develop the most advanced and sophisticated artificial intelligence systems on the planet. And in very short time frames. | |||

If viewed from the national security perspective then it is clear that an adversary with the capability to advance the breadth of capability and depth of sophistication of an artificial intelligence tool such as ChatGPT4 or DeepMind will have an overarching advantage over all other powers. | |||

This must be viewed in the context of the Western democracies. Which in all cases are bound by public opinion and fundamental legal restrictions or roadblocks. | |||

'''''<SPAN STYLE="COLOR: | '''''<SPAN STYLE="COLOR:RED">Urgency. </SPAN>''''' | ||

A studied scrutiny of the available reports suggests that there is a very clear awareness of how quantum computing will be used in the area of artificial intelligence. | |||

Simply put, very little attention seems to be focused on the advent of quantum computing and how it will impact artificial intelligence progress and capabilities. | |||

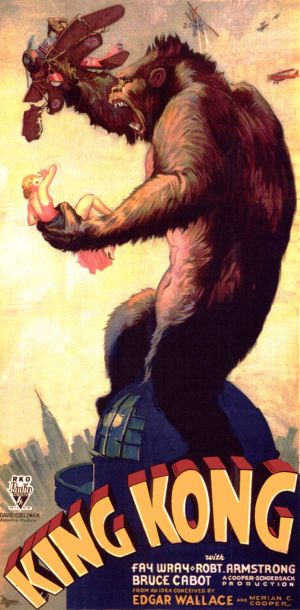

[[File:Kingkong00.jpg|right|300px]] | |||

What has already been made very clear is that even with the very limited quantum computing capabilities currently available, these systems prove themselves to be orders of magnitude faster in solving difficult problems than even the most powerful classical supercomputer ensembles. | |||

If we take a short step into the near term future then we might be obliged to attempt to assimilate and rationalize developments happening on a daily basis. Any or even all of which can have transformative implications. | |||

The upshot is that as quantum computing becomes more prevalent the field of deep learning will take another forward "quantum leap" - literally which will put those who possess it at an incalculable advantage. It will be like trying to go after King Kong with Spad S. - firing with bb pellets. | |||

The central problem in these most recent developments arises because of the well recognized human inability to process changes that happen in a nonlinear fashion. | |||

If change is introduced in a relatively linear fashion at a slow to moderate pace then most people adapt to and accommodate the change. However if change happens geometrically like what we see in the areas of deep learning then it is much more difficult to adapt to change. | |||

'''''<SPAN STYLE="COLOR: | * '''''<SPAN STYLE="COLOR:BLUE">-Theory of Operations-</SPAN>''''' | ||

'''''<SPAN STYLE="COLOR:#0000FF"> | '''''<SPAN STYLE="COLOR:#0000FF">CG4 – Theory of Operation: </SPAN>'''''CG4 is a narrow artificial intelligence system, it is based upon what is known as a Generative Pre-trained Transformer. According to Wikipedia: Generative pre-trained transformers (GPT) are a type of Large Language Model (LLM) and a prominent framework for generative artificial intelligence. | ||

The first GPT was introduced in 2018 by the American artificial intelligence (AI) organization OpenAI. | |||

GPT models are artificial neural networks that are based on the transformer architecture, pretrained on large data sets of unlabeled text, and able to generate novel human-like content. As of 2023, most LLMs have these characteristics and are sometimes referred to broadly as GPTs. | |||

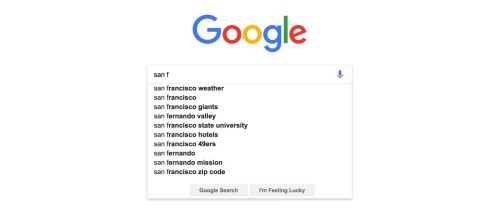

Generative Pre-Trained Language models are fundamentally prediction algorithms. They attempt to predict a next token or element from an input from the previous or some prior element. Illustrative video describing how the prediction process works. Google Search is attempting to predict what a person is about to type. | |||

Generative Pre-Trained language models are attempting to do the same thing. But they require a very large corpus of langue to work with in order to arrive at a high probability that they have made the right prediction. | |||

'''''<SPAN STYLE="COLOR:#0000FF">Fundamentals. </SPAN>'''''Starting with the basics here is a link to a video that explains how a neural network learns. | |||

'''''<SPAN STYLE="BLUE">From 3Blue1Brown: </SPAN>''''' | |||

'''''<SPAN STYLE="BLUE">[https://www.youtube.com/watch?v=aircAruvnKk&ab_channel=3Blue1Brown Neural_Network_Basics]</SPAN>''''' | |||

'''''<SPAN STYLE="BLUE">[https://www.youtube.com/watch?v=IHZwWFHWa-w&ab_channel=3Blue1Brown Gradient Descent]</SPAN>''''' | |||

'''''<SPAN STYLE=" | '''''<SPAN STYLE="BLUE">[https://www.youtube.com/watch?v=Ilg3gGewQ5U&ab_channel=3Blue1Brown Back Propagation], </SPAN>''''' intuitively, what is going on? | ||

'''''<SPAN STYLE="BLUE">[https://www.youtube.com/watch?v=tIeHLnjs5U8&ab_channel=3Blue1Brown Back Propagation Theory]</SPAN>''''' | |||

'''''<SPAN STYLE=" | |||

'''''<SPAN STYLE="COLOR:#0000FF">CG4 – What is it: </SPAN>''''' | |||

[[File:GooglePredict.jpg|left|500px]] | |||

Large Language Models are are attempting to predict the next token, or word fragment from an input text. In part one the narrator describes how an input is transformed using a neural network to predict an output. In the case of language models the prediction process is attempting to predict what should come next based upon the word or token that has just been processed. However in order to generate accurate predictions very large bodies of text are required to pre-train the model. | |||

'''''<SPAN STYLE="COLOR:BLUE">Part One. </SPAN>'''''In this video the narrator describes how words are used to predict subsequent words in an input text. | |||

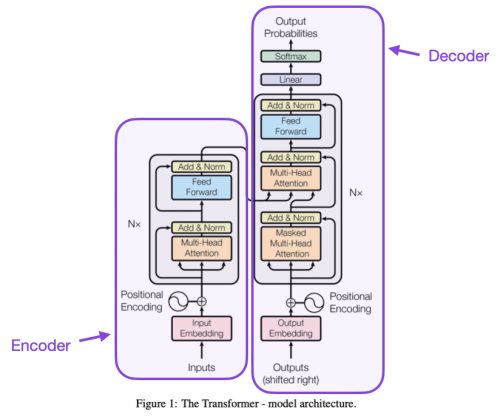

'''''<SPAN STYLE="COLOR:BLUE">Part Two. </SPAN>'''''Here, the narrator expands on how the transformer network is constructed by combining the next word network with the attention network to create context vectors that use various weightings to attempt to arrive at a meaningful result. | |||

'''''<SPAN STYLE="COLOR: | '''''<SPAN STYLE="COLOR:BLUE">Note: </SPAN>'''''this is a more detailed explanation of how a transformer is constructed and details how each term in an input text is encoded using a context vector; the narrator then explains how the attention network uses the set of context vectors associated with each word or token are passed to the next word prediction network to attempt to match the input with the closest matching output text. | ||

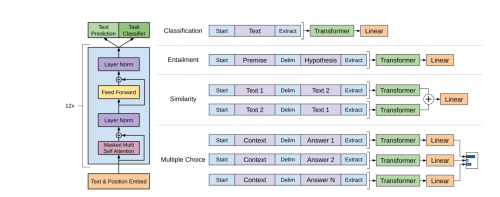

[[File:Transformer.png|left|500px]] | |||

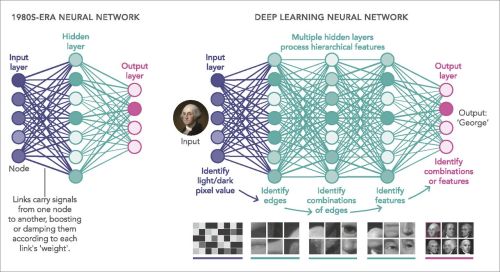

Generative pre-trained transformers are implemented using a deep learning neural network topology. This means that they have an input layer, a set of hidden layers and an output layer. With more hidden layers the ability of the deep learning system increases. Currently the number of hidden layers in CG4 is not known but speculated to be very large. A generic example of how hidden layers are implemented can be seen as follows. | |||

The Generative Pre-training Transformer accepts some text as input. It then attempts to predict the next word in order based upon this input in order to generate and output. It has been trained on a massive corpus of text which it then uses to base its prediction on. The basics of how tokenization is done can be found here. | |||

Tokenization is the process of creating the mapping of words or word fragments to their position in the input text. The training step enables a deep neural network to learn language structures and patterns. The neural network will then be fine tuned for improved performance. In the case of CG4 the size of the corpus of text that was used for training has not been revealed but is rumored to be over one trillion parameters. | |||

[[File:Tokens00.png|left|500px]] | |||

The | They perform their magic by accepting text as input and assigning several parameters to each token that is created. A token can be a whole word or part of a word. The position of the word or word fragment. The Graphics in Five Minutes channel provides a very concise description of how words are converted to tokens and then how tokens are used to make predictions. | ||

This | '''''<SPAN STYLE="COLOR:BLUE">* Transformers </SPAN>'''''(basics, BERT, GPT)[1] This is a lengthy and very detailed explanation of the BERT and GPT transformer models for those interested in specific details. | ||

'''''<SPAN STYLE="COLOR:BLUE">* Words and Tokens </SPAN>'''''This video provides a general and basic explanation on how word or tokens are predicted using the large language model. | |||

'''''<SPAN STYLE="COLOR:BLUE">* Context Vectors, Prediction and Attention. </SPAN>'''''In this video the narrator expands upon how words and tokens are mapped into input text positions and is an excellent description of how words are assigned probabilities; based upon the probability of word frequency an expectation can be computed that predicts what the next word will be. | |||

[[File:DeepLearning.jpg|left|500px]] | |||

image source: IBM. Hidden Layers | |||

'''''<SPAN STYLE="COLOR: | '''''<SPAN STYLE="COLOR:BLUE">Chat GPT4 is Large Language Model system. </SPAN>'''''Informal assessments suggest that it has been trained on over one trillion parameters. But these suspicions have not been confirmed. If this speculation is true then GC4 will be the largest large language model to date. | ||

'''''<SPAN STYLE="COLOR:BLUE">According to Wikipedia: A Large Language Model</SPAN>''''' (LLM - Wikipedia) is a Language Model consisting of a Neural Network with many parameters (typically billions of weights or more), trained on large quantities of unlabeled text using Self-Supervised Learning or Semi-Supervised Learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. | |||

This has shifted the focus of Natural Language Processing research away from the previous paradigm of training specialized supervised models for specific tasks. | |||

It uses what is known as the Transformer Model. The Turing site offers useful insight as well into how the transformer model constructs a response from an input. Because the topic is highly technical we leave it to the interested reader to examine the detail processing steps. | |||

The | The transformer model is a neural network that learns context and understanding as a result of sequential data analysis. The mechanics of how a transformer model works is beyond the technical scope of this summary but a good summary can be found here. | ||

If we use the associated diagram as a reference model then we can see that when we migrate to a deep learning model with a large number of hidden layers then the ability of the deep learning neural network escalates. If we examine closely the facial images at the bottom of the diagram then we can see that there are a number of faces. | |||

Included in the diagram is a blow up of a selected feature from one of the faces. In this case it comes from the image of George Washington. If we are using a deep learning system with billions to hundreds of billions of parameters then we should expect that the ability of the deep learning model to possess the most exquisite ability to discern extremely find detail recognition tasks. Which is in fact exactly what happens. | |||

We can see in this diagram the main processing steps that take place in the transformer. The two main processing cycles include encoding processing and decoding processing. As this is a fairly technical discussion we will defer examination of the internal processing actions for a later iteration. | |||

The following | [[File:Transformer00.png|left|500px]] | ||

The following four references offer an overview of what basic steps are taken to train and fine tune a GPT system. | |||

'''''<SPAN STYLE="COLOR:BLUE">"Attention is all you need" Transformer model: </SPAN>''''' processing | |||

'''''<SPAN STYLE="COLOR:BLUE">Training and Inferencing a Neural Network</SPAN>''''' | |||

'''''<SPAN STYLE="COLOR:BLUE">Fine Tuning GPT</SPAN>''''' | |||

'''''<SPAN STYLE="COLOR: | '''''<SPAN STYLE="COLOR:BLUE">General Fine Tuning</SPAN>''''' | ||

''''' | |||

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$ RESUME RIGHT HERE $$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$ | |||

CG4 is a narrow artificial intelligence system, it is based upon what is known as a Generative Pre-trained Transformer. According to Wikipedia: Generative pre-trained transformers (GPT) are a type of Large Language Model (LLM) and a prominent framework for generative artificial intelligence. The first GPT was introduced in 2018 by the American artificial intelligence (AI) organization OpenAI. | |||

GPT models are artificial neural networks that are based on the transformer architecture, pretrained on large data sets of unlabeled text, and able to generate novel human-like content. As of 2023, most LLMs have these characteristics and are sometimes referred to broadly as GPTs. Generative Pre-Trained Language models are fundamentally prediction algorithms. They attempt to predict a next token or element from an input from the previous or some prior element. Illustrative video describing how the prediction process works. Google Search is attempting to predict what a person is about to type. Generative Pre-Trained language models are attempting to do the same thing. But they require a very large corpus of langue to work with in order to arrive at a high probability that they have made the right prediction. | GPT models are artificial neural networks that are based on the transformer architecture, pretrained on large data sets of unlabeled text, and able to generate novel human-like content. As of 2023, most LLMs have these characteristics and are sometimes referred to broadly as GPTs. Generative Pre-Trained Language models are fundamentally prediction algorithms. They attempt to predict a next token or element from an input from the previous or some prior element. Illustrative video describing how the prediction process works. Google Search is attempting to predict what a person is about to type. Generative Pre-Trained language models are attempting to do the same thing. But they require a very large corpus of langue to work with in order to arrive at a high probability that they have made the right prediction. | ||

Large Language Models | |||

Large Language Models attempt to predict the next token, or word fragment from an input text. In part one the narrator describes how an input is transformed using a neural network to predict an output. In the case of language models the prediction process is attempting to predict what should come next based upon the word or token that has just been processed. However in order to generate accurate predictions very large bodies of text are required to pre-train the model. | |||

Note: this is a more detailed explanation of how a transformer is constructed and details how each term in an input text is encoded using a context vector; the narrator then explains how the attention network uses the set of context vectors associated with each word or token are passed to the next word prediction network to attempt to match the input with the closest matching output text. | * Part One. In this video the narrator describes how words are used to predict subsequent words in an input text. | ||

Generative pre-trained transformers are implemented using a deep learning neural network topology. This means that they have an input layer, a set of hidden layers and an output layer. With more hidden layers the ability of the deep learning system increases. Currently the number of hidden layers in CG4 is not known but speculated to be very large. A generic example of how hidden layers are implemented can be seen as follows. | |||

* Part Two. Here, the narrator expands on how the transformer network is constructed by combining the next word network with the attention network to create context vectors that use various weightings to attempt to arrive at a meaningful result. | |||

* Note: this is a more detailed explanation of how a transformer is constructed and details how each term in an input text is encoded using a context vector; the narrator then explains how the attention network uses the set of context vectors associated with each word or token are passed to the next word prediction network to attempt to match the input with the closest matching output text. | |||

* Generative pre-trained transformers are implemented using a deep learning neural network topology. This means that they have an input layer, a set of hidden layers and an output layer. With more hidden layers the ability of the deep learning system increases. Currently the number of hidden layers in CG4 is not known but speculated to be very large. A generic example of how hidden layers are implemented can be seen as follows. | |||

The Generative Pre-training Transformer accepts some text as input. It then attempts to predict the next word in order based upon this input in order to generate and output. It has been trained on a massive corpus of text which it then uses to base its prediction on. The basics of how tokenization is done can be found here. | The Generative Pre-training Transformer accepts some text as input. It then attempts to predict the next word in order based upon this input in order to generate and output. It has been trained on a massive corpus of text which it then uses to base its prediction on. The basics of how tokenization is done can be found here. | ||

Tokenization is the process of creating the mapping of words or word fragments to their position in the input text. The training step enables a deep neural network to learn language structures and patterns. The neural network will then be fine tuned for improved performance. In the case of CG4 the size of the corpus of text that was used for training has not been revealed but is rumored to be over one trillion parameters. | Tokenization is the process of creating the mapping of words or word fragments to their position in the input text. The training step enables a deep neural network to learn language structures and patterns. The neural network will then be fine tuned for improved performance. In the case of CG4 the size of the corpus of text that was used for training has not been revealed but is rumored to be over one trillion parameters. | ||

They perform their magic by accepting text as input and assigning several parameters to each token that is created. A token can be a whole word or part of a word. The position of the word or word fragment. The Graphics in Five Minutes channel provides a very concise description of how words are converted to tokens and then how tokens are used to make predictions. | They perform their magic by accepting text as input and assigning several parameters to each token that is created. A token can be a whole word or part of a word. The position of the word or word fragment. The Graphics in Five Minutes channel provides a very concise description of how words are converted to tokens and then how tokens are used to make predictions. | ||

* Transformers (basics, BERT, GPT)[1] This is a lengthy and very detailed explanation of the BERT and GPT transformer models for those interested in specific details. | * Transformers (basics, BERT, GPT)[1] This is a lengthy and very detailed explanation of the BERT and GPT transformer models for those interested in specific details. | ||

* Words and Tokens This video provides a general and basic explanation on how word or tokens are predicted using the large language model. | * Words and Tokens This video provides a general and basic explanation on how word or tokens are predicted using the large language model. | ||

* Context Vectors, Prediction and Attention. In this video the narrator expands upon how words and tokens are mapped into input text positions and is an excellent description of how words are assigned probabilities; based upon the probability of word frequency an expectation can be computed that predicts what the next word will be. | * Context Vectors, Prediction and Attention. In this video the narrator expands upon how words and tokens are mapped into input text positions and is an excellent description of how words are assigned probabilities; based upon the probability of word frequency an expectation can be computed that predicts what the next word will be. | ||

image source:IBM. Hidden Layers | image source: IBM. Hidden Layers | ||

Chat GPT4 is Large Language Model system. Informal assessments suggest that it has been trained on over one trillion parameters. But these suspicions have not been confirmed. If this speculation is true then GC4 will be the largest large language model to date. According to Wikipedia: A Large Language Model (LLM - Wikipedia) is a Language Model consisting of a Neural Network with many parameters (typically billions of weights or more), trained on large quantities of unlabeled text using Self-Supervised Learning or Semi-Supervised Learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of Natural Language Processing research away from the previous paradigm of training specialized supervised models for specific tasks. | Chat GPT4 is Large Language Model system. Informal assessments suggest that it has been trained on over one trillion parameters. But these suspicions have not been confirmed. If this speculation is true then GC4 will be the largest large language model to date. According to Wikipedia: A Large Language Model (LLM - Wikipedia) is a Language Model consisting of a Neural Network with many parameters (typically billions of weights or more), trained on large quantities of unlabeled text using Self-Supervised Learning or Semi-Supervised Learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of Natural Language Processing research away from the previous paradigm of training specialized supervised models for specific tasks. | ||

It uses what is known as the Transformer Model. The Turing site offers useful insight as well into how the transformer model constructs a response from an input. Because the topic is highly technical we leave it to the interested reader to examine the detail processing steps. | It uses what is known as the Transformer Model. The Turing site offers useful insight as well into how the transformer model constructs a response from an input. Because the topic is highly technical we leave it to the interested reader to examine the detail processing steps. | ||

The transformer model is a neural network that learns context and understanding as a result of sequential data analysis. The mechanics of how a transformer model works is beyond the technical scope of this summary but a good summary can be found here. | The transformer model is a neural network that learns context and understanding as a result of sequential data analysis. The mechanics of how a transformer model works is beyond the technical scope of this summary but a good summary can be found here. | ||

If we use the associated diagram as a reference model then we can see that when we migrate to a deep learning model with a large number of hidden layers then the ability of the deep learning neural network escalates. If we examine closely the facial images at the bottom of the diagram then we can see that there are a number of faces. Included in the diagram is a blow up of a selected feature from one of the faces. In this case it comes from the image of George Washington. If we are using a deep learning system with billions to hundreds of billions of parameters then we should expect that the ability of the deep learning model to possess the most exquisite ability to discern extremely find detail recognition tasks. Which is in fact exactly what happens. | If we use the associated diagram as a reference model then we can see that when we migrate to a deep learning model with a large number of hidden layers then the ability of the deep learning neural network escalates. If we examine closely the facial images at the bottom of the diagram then we can see that there are a number of faces. Included in the diagram is a blow up of a selected feature from one of the faces. In this case it comes from the image of George Washington. If we are using a deep learning system with billions to hundreds of billions of parameters then we should expect that the ability of the deep learning model to possess the most exquisite ability to discern extremely find detail recognition tasks. Which is in fact exactly what happens. | ||

We can see in this diagram the main processing steps that take place in the transformer. The two main processing cycles include encoding processing and decoding processing. As this is a fairly technical discussion we will defer examination of the internal processing actions for a later iteration. | We can see in this diagram the main processing steps that take place in the transformer. The two main processing cycles include encoding processing and decoding processing. As this is a fairly technical discussion we will defer examination of the internal processing actions for a later iteration. | ||

The following four references offer an overview of what basic steps are taken to train and fine tune a GPT system. | The following four references offer an overview of what basic steps are taken to train and fine tune a GPT system. | ||

"Attention is all you need" Transformer model: processing | |||

Training and Inferencing a Neural Network | '''''<SPAN STYLE="COLOR:BLUE">* "Attention is all you need" Transformer model: processing</SPAN>''''' | ||

Fine Tuning GPT | |||

General Fine Tuning | '''''<SPAN STYLE="COLOR:BLUE">* Training and Inferencing a Neural Network</SPAN>''''' | ||

An Overview. If we step back for a moment and summarize what some observers have had to say about this new capability then we might tentatively start with that: | |||

'''''<SPAN STYLE="COLOR:BLUE">* Fine Tuning GPT</SPAN>''''' | |||

'''''<SPAN STYLE="COLOR:BLUE">* General Fine Tuning</SPAN>''''' | |||

'''''<SPAN STYLE="COLOR:BLUE">An Overview. </SPAN>''''' If we step back for a moment and summarize what some observers have had to say about this new capability then we might tentatively start with that: | |||

* is based upon and is a refinement of its predecessor, the Chat GPT 3.5 system; | |||

* has been developed using the generative predictive transformer (GPT) model; | |||

* has been trained on a very large data set including textual material that can be found on the internet; unconfirmed rumors suggest that it has been trained on 1 trillion parameters; | |||

* is capable of sustaining conversational interaction using text based input provided by a user; | |||

;;;;;;;;;;;;;;;;;;;;;;;;;;;;;;;;; | * can provide contextually relevant and consistent responses; | ||

* can link topics in a chronologically consistent manner and refer back to them in current prompt requests; | |||

* is a Large Language Models that uses prediction as the basis of its actions; | |||

* uses deep learning neural networks and very large training data sets; | |||

* uses a SAAS model; like Google Search, Youtube or Morningstar Financial; | |||

'''''<SPAN STYLE="COLOR:BLUE">Interim Observations and Conclusions.</SPAN>''''' | |||

* this technology will continue to introduce novel, unpredictable and disruptive risks; | |||

* a range of dazzling possibilities that will emerge that will be beneficial to broad swathes of society; | |||