Difference between revisions of "Darwin2049/ChatGPT4/PhaseShift"

Darwin2049 (talk | contribs) |

Darwin2049 (talk | contribs) |

||

| Line 112: | Line 112: | ||

* '''''<SPAN STYLE="COLOR:BLUE">Entanglement.<SPAN />''''' Two electrons can have an entangled state. This means that if one electron is in one state, such as up spin then by definition the entangled electron of the pair will automatically have a down spin state. The two electrons can be removed to an arbitrary distance from each other. However examination of one of the pair will automatically reveal the state of the other. '''''<SPAN STYLE="COLOR:BLUE">[https://www.youtube.com/watch?v=fkAAbXPEAtU This short explanation]<SPAN />''''' captures the basics of entanglement. | * '''''<SPAN STYLE="COLOR:BLUE">Entanglement.<SPAN />''''' Two electrons can have an entangled state. This means that if one electron is in one state, such as up spin then by definition the entangled electron of the pair will automatically have a down spin state. The two electrons can be removed to an arbitrary distance from each other. However examination of one of the pair will automatically reveal the state of the other. '''''<SPAN STYLE="COLOR:BLUE">[https://www.youtube.com/watch?v=fkAAbXPEAtU This short explanation]<SPAN />''''' captures the basics of entanglement. | ||

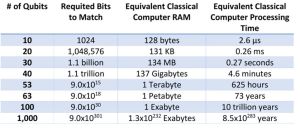

* '''''<SPAN STYLE="COLOR:BLUE">Coherence/Decoherence.<SPAN />''''' A crucial factor that conditions the utility of a device operating at the quantum level is noise. Any kind of noise from heat, vibration or cosmic rays can disrupt the extremely delicate processes at the quantum level. Therefore when numbers are presented they are often not well differentiated into qubits that can perform useful computations relative to those that do not. A strategy for dealing with this problem has been to use large numbers of qubits as an error correcting means. Therefore when a quantum device is said to consist of over a thousand qubits then in fact it might have to use 90% of them just for maintaining quantum coherence and entanglement. In order to get meaningful results these quantum states must be maintained for the duration of the calculation. But at these levels and using this means the result is that calculations happen at scales far beyond merely electronic or even photonic speed but due to quantum realities multiple evaluations can happen in parallel. The result has been the dramatic speed up numbers that have recently been reported in various research labs and corporations. Therefore a quantum computer that is claiming to have one hundred or more coherent qubit capability means that they can outperform classical computers by very wide margins.<BR /> | * '''''<SPAN STYLE="COLOR:BLUE">Coherence/Decoherence.<SPAN />''''' A crucial factor that conditions the utility of a device operating at the quantum level is noise. Any kind of noise from heat, vibration or cosmic rays can disrupt the extremely delicate processes at the quantum level. Therefore when numbers are presented they are often not well differentiated into qubits that can perform useful computations relative to those that do not. A strategy for dealing with this problem has been to use large numbers of qubits as an error correcting means. Therefore when a quantum device is said to consist of over a thousand qubits then in fact it might have to use 90% of them just for maintaining quantum coherence and entanglement. In order to get meaningful results these quantum states must be maintained for the duration of the calculation. But at these levels and using this means the result is that calculations happen at scales far beyond merely electronic or even photonic speed but due to quantum realities multiple evaluations can happen in parallel. The result has been the dramatic speed up numbers that have recently been reported in various research labs and corporations. Therefore a quantum computer that is claiming to have one hundred or more coherent qubit capability means that they can outperform classical computers by very wide margins.<BR /> | ||

*'''''<SPAN STYLE="COLOR:BLUE">Wave-Particle Duality.<SPAN />''''' Quantum physics reveals that at subatomic levels matter and light exhibit behavior that can be interpreted as showing that it is both wave in nature as well as particle. Innumerable experiments have revealed that despite this being intuitively contradictory the experimental results conclusively show that the dual wave-particle nature of matter and light is a reality. This is an abstruse topic and its full explication is beyond the scope of this discourse. See [https://www.wikiwand.com/en/Wave%E2%80%93particle_duality this page for more insight]. A schematic representation of the [https://arguably.io/Darwin2049/ChatGPT4/PhaseShift particle-wave duality of light can be studied here]. | *'''''<SPAN STYLE="COLOR:BLUE">Wave-Particle Duality.<SPAN />''''' Quantum physics reveals that at subatomic levels matter and light exhibit behavior that can be interpreted as showing that it is both wave in nature as well as particle. Innumerable experiments have revealed that despite this being intuitively contradictory the experimental results conclusively show that the dual wave-particle nature of matter and light is a reality. This is an abstruse topic and its full explication is beyond the scope of this discourse. See '''''[https://www.wikiwand.com/en/Wave%E2%80%93particle_duality this page for more insight]<SPAN />'''''. A schematic representation of the '''''<SPAN STYLE="COLOR:BLUE">[https://arguably.io/Darwin2049/ChatGPT4/PhaseShift particle-wave duality of light can be studied here]. | ||

* '''''<SPAN STYLE="COLOR:BLUE">[https://www.wikiwand.com/en/Uncertainty_principle Heisenberg's Uncertainty Principle].<SPAN />''''' Werner Heisenberg was the developer of the now famous [https://www.youtube.com/watch?v=m7gXgHgQGhw Heisenberg Uncertainty Principle] and won the Nobel prize for his discovery in 1927. His principle essentially stated that at the micro level of reality (i.e. atomic or subatomic) one can only measure the position OR the momentum of a particle. But because of the particle/wave duality nature of all matter it is impossible to measure both. Should we attempt to pinpoint the location of a particle we might discover its precise point at a specific moment in time but we can NOT know its momentum. Conversely we might measure its momentum or energy state but we can NOT know its position. The reality of the world at the quantum level is inherently counterintuitive. However innumerable physics experiments have demonstrated beyond doubt that this is the reality in which all material objects exist. | * '''''<SPAN STYLE="COLOR:BLUE">[https://www.wikiwand.com/en/Uncertainty_principle Heisenberg's Uncertainty Principle].<SPAN />''''' Werner Heisenberg was the developer of the now famous '''''<SPAN STYLE="COLOR:BLUE">[https://www.youtube.com/watch?v=m7gXgHgQGhw Heisenberg Uncertainty Principle]<SPAN />''''' and won the Nobel prize for his discovery in 1927. His principle essentially stated that at the micro level of reality (i.e. atomic or subatomic) one can only measure the position OR the momentum of a particle. But because of the particle/wave duality nature of all matter it is impossible to measure both. Should we attempt to pinpoint the location of a particle we might discover its precise point at a specific moment in time but we can NOT know its momentum. Conversely we might measure its momentum or energy state but we can NOT know its position. The reality of the world at the quantum level is inherently counterintuitive. However innumerable physics experiments have demonstrated beyond doubt that this is the reality in which all material objects exist. | ||

The interested observer can find a number of useful references addressing the '''''<SPAN STYLE="COLOR:BLUE">topics of quantum bits, entanglement and superposition <SPAN />''''' | The interested observer can find a number of useful references addressing the '''''<SPAN STYLE="COLOR:BLUE">topics of quantum bits, entanglement and superposition <SPAN />''''' | ||

Revision as of 01:12, 16 December 2023

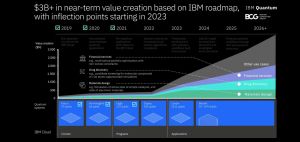

Quantum Computing. Quantum computing is currently making its way into the mainstream computing world. Major industrial giants such as IBM, Google and other smaller entrants such as D-Wave are moving forward briskly at ushering this new technology into the light of day.

IBM recently (2023.11) announced availability of its IBM Quantum System Two which is based upon their 432 qubit Osprey quantum processor. Google has published a timeline of progress stipulating increasing performance milestones through the 2020’s. Some salient points to keep in mind regarding this topic are that:

- Before/After. Quantum computing will be transformative in all areas of human endeavor. Impacts will emerge that are comparable to the taming of fire, animal husbandry, agriculture and writing.

- Cognitive Prosthetic. Increasingly mainstream quantum computing systems will enable dramatic advances in science and technology. Associated advances will emerge in other areas of human endeavor such as politics, sociology, psychology, economics and geopolitics. An tentative model might be a person with a cognitive expansion capability. This might resemble something like an actively directable form of subconsciousness.

- Composite Systems. Typical high end systems today consist of rows of rack mounted processing systems that are interfaced together for enhanced performance. IBM has publicized that its Quantum System Two will function as an element in a hybrid ensemble. An environment with several dedicated systems will offer novel and unexpected capabilities to users.

- Conformal Cognitive Interfaces. Combining a deep learning based system with optimized user interface systems that excel at voice and video presentation will result in systems that will provide users the experience of interacting with the most knowledgeable people on the planet. These avatar based presentations will be indistinguishable from real people. Calibrating their behavioral traits to make their presentations more human-like will mean that the need for a revision of the Turing Test will become necessary. By way of example early fictitious presentations as well as recent scientific and engineering developments suggests this will become the common reality. Why might this be so?:

- HAL9000. The 1968 movie, a computing system known as the HAL9000 system exhibited behavior that might convince an informed observer that it was conscious. Developments during the course of the movie made clear that it had intentionality and goal seeking behavior. Given today's climate that cautions against alignment risks the HAL9000 system clearly demonstrated that this had been a missing behavioral feature in HAL9000's development. With lethal results. That being so, the movie made ground breaking predictions insofar as HAL9000 was capable of interacting with human via speech. It possessed acute visual and spatial abilities. Moreover and crucially it clearly demonstrated theory of mind. This became evident as it executed a plan of deception. This was made clear when it formulated a plan to mislead the crew and cause them to believe that a crucial component failure had actually happened.

- Sphere. This Michael Crichton novel (which later became a movie) powerfully presented the concept of a cognitive prosthetic that was capable of materializing objects based upon the wishes or desires of a user. A key element in the novel offered the supposition that at some point an advanced extra terrestrial civilization might create technology that can materialize whatever a user could envision. Ultimately the sphere could be considered a cognitive echo chamber. But one with the ability to materialize the users thoughts or wishes. Like HAL9000 it too exhibited no signs of alignment. Rather it was presented as just another tool to be used much as hammers or screw drivers are considered to be merely tools and have no intrinsic means of enforcing any kind of moral or ethical alignment values upon their usages. It exhibited none of the traits associated with consciousness such as sentience, goal oriented behavior, intentionality or consciousness.

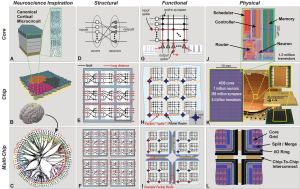

- CG4/Watson/TrueNorth (IBM). The IBM corporation has a technology imperative of creating electronic versions of massive neural networks. The current state of play can be seen with the IBM TrueNorth processor. This is a neuromorphic device that mimics the neurological activity of significant swathes of the mammalian cortex. Incorporating this technology into an ensemble of processing elements means that the new systems that will emerge will possess capabilities that rival or even dwarf those of the best humans, animals or combinations thereof.

- Geostrategic Imperatives. The national interest security community has forcefully sounded the alarm about the existential importance of seizing the scientific and technological high ground in the area of quantum computing and artificial intelligence. Going forward the race will escalate between the US and the PRC. Calls to slow or pause advances in deep learning systems will be dismissed as suicidality naïve and shortsighted.

Phase Shift: Gas, Liquid, Solid. Classical physics describes how states of matter possess different properties depending upon their energy state and environment.

- It is undetectable At sea level we experience the atmosphere as an odorless, colorless, tasteless gas.

- No, it is wet. At the same location the freezing point of water if 32F. We are unaware of the fact that we inhale and exhale atmospheric gas. Lower the ambient temperature to below the freezing point and something that is difficult if not impossible detect condenses into a clear liquid with properties that are absent in the gaseous state.

- No, it is solid. The previous gaseous or liquid states of water vapor do not prepare us to see solid objects made from this same material. This proves that a prior state of knowledge may offer no insight for encountering a subsequent states.

- Dual Use. This means that little or no time for reflection as to whether should be pursued.

- Unintended Consequences. This implies that we will enable possibilities that were not envisioned. Little or no time will be required to identify promising pathways forward when trying to solve difficult problems. Meanwhile, the pace is increasing.

- Warp Speed. Informed observers of OpenAI have reported that Chat GPT4 required roughly ninety to one hundred days of train time using 25000 high end Nvidia A100 GPU devices. As of this writing (December 2023) faster H100 GPU devices will halve the number of devices needed and the time required to perform the same training.

- Instant Results. Quantum systems will collapse to minutes or less. This is because the time to validate a new concept, process or idea can be done almost instantly. What recently required weeks and months of 24/7 run time can now be performed in seconds or even instantly. New functionality and capability might have been regarded as “possible but over the horizon” might now become possible instantly.

- Risks. Therefore with the ability to conceptualize a solution approach to a problem to seeing that problem’s solution instantly available leaves no margin of time to assess risk.

- Therefore. ChatGPT4 allows the user to perform fine tuning for their specific purposes. This suggests that we may see an entirely new ecology arise in very short periods of time. It may be liken transitioning from submitting batch jobs on decks of punched cards to ultra high resolution virtual reality in one step. The upshot will be that problem that no one has yet even imagined will become soluble in breathtakingly short periods of time. By way of imagining just a few:

- Cambrian Explosion.The genie is already out of the bottle. Attempts to reign it back in will prove to be futile. An explosion is likely to result that will defy the best efforts to control or regulate. Therefore we might expect to see a proliferation comparable to that of earlier eras in he development of life on earth.

- Ecology.This technology is essentially unregulated. The US Administration has proposed actions. However these may or may not get implemented any time soon. Widespread availability of deep learning and related technology absent meaningful regulation practically insures that a new ecological environment will emerge. At one end will be government sponsored research facilities and contractors. At the other end of the spectrum we may see the emergence of "one man and his dog" outfits that provide highly specialized niche products or services. An entire new ecology may gradually crystallize as a result. The electronic nature and the instant nature of worldwide connectedness may show a reiteration of evolutionary pressures that will morph at speeds far beyond the evolution of software.

- The Emerging Clerisy.Two opposing schools of thought have emerged centering around the dangers that an artificial intelligence system might exhibit. A December 7th, 1941 type event against the US by a rival or adversary would instantly tip the balance in favor of no holds barred efforts to advance all possible variations of artificial intelligence systems.

- Orthodoxy. For purposes of discussion we describe the first group’s stance as the Orthodoxy. It’s stance is that ongoing developments represent can unwittingly introduce unacceptable risk.

- Heterodoxy. This group’s position is that possession of advanced artificial intelligence capabilities by a rival or hostile adversary represents an existential threat and must be avoided at all costs.

- Orthodoxy. For purposes of discussion we describe the first group’s stance as the Orthodoxy. It’s stance is that ongoing developments represent can unwittingly introduce unacceptable risk.

- Why. Because a number of quantum algorithms promise to upend existing processes or safeguards.

For purposes of being concrete consider just a few algorithms.- Deutch's Algorithm.

- Shor's Algorithm This is an algorithm that can be used to crack an encryption scheme. It does this because it is capable of factoring large prime numbers. Quantum computers can collapse the time and effort required to factor large prime numbers. With the ability to factor large prime numbers it becomes possible to crack RSA encryption. Shor's Algorithm can also be used to solve the Traveling Salesman problem. The implications of this capability means that the most efficient path to a goal can be determined even with large numbers of nodes and links. Note that these nodes and links represent branch points and arcs that join them. In a Traveling Salesman problem as the number of cities that need to be visited increases and the number of possible pathways to travel between them increase then the number of possible solutions explodes. Solving this optimization problem can very quickly overwhelm classical computing architectures. However with a quantum computer all possible pathways can be explored simultaneously and the best solution can be presented. Shor's Algorithm can be used for machine learning problems. The ability to solve deep learning problems can benefit from this algorithm. The implications of this capability means that training even extremely large deep learning problems might see dramatic speed ups.

- Grover’s Algorithm. This is an algorithm developed in 1996 by Lov Grover. It leverages quantum computing to collapse the amount of time needed to perform a search of N unordered elements. Note that a crucial factor is that in performing the search for a specific element that meets a set of desired criteria, any evaluation of an element in the set yields no information about which other element in the set of N elements might help determine the desired one.

- Grover's Algorithm - Explanation. Short video that explains the Grover's Algorithm. Note that this is a sophisticated presentation that presumes some understanding of the underlying quantum mechanics.

- Grover's Algorithm - Uses. When we apply quantum computing to real world problems we will quickly discover that it is possible to model very complex chemical problems. This means that new materials and pharmaceuticals can be discovered in record amounts of time.

Quantum Mechanics. The brief items that follow are deliberately kept brief because a discussion of the mathematics and physics underpinning quantum computing is beyond the scope of this discussion. This is because the topic area obliged a deep understanding and conversance with very advanced mathematics and physics.

Quantum computing mechanisms due to their inherent nature are capable of solving problems that are beyond any current or foreseeable classical computing architecture.

There are various reasons why this is the case. Quantum computing as the name suggests is grounded in the quantum world. Understanding quantum physics requires the most advanced grounding in physics and mathematics. Therefore we provide only a cursory introduction to some of the more basic elements. Additional definitions can be found via the link at the end of this page.

Advanced mathematics is advised for those wishing to further understand the crucial elements of entanglement, coherence, vector spaces or quantum algorithms.

What can be said currently however is that this new computational environment will make possible solution to currently intractable problems soluble within acceptable time frames. The ability to do so will invariably carry with it great promise but also great risk.

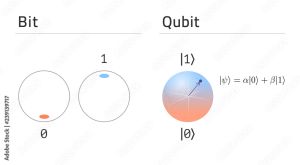

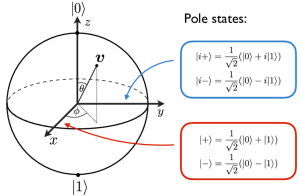

- Superposition. Quantum computers are capable of performing calculations that are beyond the scope of classical computing devices. This arises from the quantum reality of superposition. This means that an object at the quantum level has two factors that can be measured: momentum and location. At any instant a quantum system can be in all possible definable states. The act of attempting a measurement will result in the collapse of this superposition state and result in returning a specific value. But this also means that only one factor can be known at the moment of measurement EITHER the position of the object or its momentum - but not both. This arises due to the Heisenberg's Uncertainty Principle.

- Entanglement. Two electrons can have an entangled state. This means that if one electron is in one state, such as up spin then by definition the entangled electron of the pair will automatically have a down spin state. The two electrons can be removed to an arbitrary distance from each other. However examination of one of the pair will automatically reveal the state of the other. This short explanation captures the basics of entanglement.

- Coherence/Decoherence. A crucial factor that conditions the utility of a device operating at the quantum level is noise. Any kind of noise from heat, vibration or cosmic rays can disrupt the extremely delicate processes at the quantum level. Therefore when numbers are presented they are often not well differentiated into qubits that can perform useful computations relative to those that do not. A strategy for dealing with this problem has been to use large numbers of qubits as an error correcting means. Therefore when a quantum device is said to consist of over a thousand qubits then in fact it might have to use 90% of them just for maintaining quantum coherence and entanglement. In order to get meaningful results these quantum states must be maintained for the duration of the calculation. But at these levels and using this means the result is that calculations happen at scales far beyond merely electronic or even photonic speed but due to quantum realities multiple evaluations can happen in parallel. The result has been the dramatic speed up numbers that have recently been reported in various research labs and corporations. Therefore a quantum computer that is claiming to have one hundred or more coherent qubit capability means that they can outperform classical computers by very wide margins.

- Wave-Particle Duality. Quantum physics reveals that at subatomic levels matter and light exhibit behavior that can be interpreted as showing that it is both wave in nature as well as particle. Innumerable experiments have revealed that despite this being intuitively contradictory the experimental results conclusively show that the dual wave-particle nature of matter and light is a reality. This is an abstruse topic and its full explication is beyond the scope of this discourse. See this page for more insight. A schematic representation of the particle-wave duality of light can be studied here.

- Heisenberg's Uncertainty Principle. Werner Heisenberg was the developer of the now famous Heisenberg Uncertainty Principle and won the Nobel prize for his discovery in 1927. His principle essentially stated that at the micro level of reality (i.e. atomic or subatomic) one can only measure the position OR the momentum of a particle. But because of the particle/wave duality nature of all matter it is impossible to measure both. Should we attempt to pinpoint the location of a particle we might discover its precise point at a specific moment in time but we can NOT know its momentum. Conversely we might measure its momentum or energy state but we can NOT know its position. The reality of the world at the quantum level is inherently counterintuitive. However innumerable physics experiments have demonstrated beyond doubt that this is the reality in which all material objects exist.

The interested observer can find a number of useful references addressing the topics of quantum bits, entanglement and superposition

In some cases they will prove to be fairly technical.