User:Darwin2049/chatgpt4 caveats

CAVEATS. The above impressions by notable observers in the field of artificial intelligence and geopolitics is small, unscientific sampling of positions. However in each case these individuals are well respected and known in their fields. From these we propose a few caveats and observations as a way forward.

We propose the tentative conclusion that several factors should condition our synthesis going forward. These include the premises that CG4:

- Inherently Dual Use Technology: it should be kept clearly in mind that its further use entails significant risks; the prospect that totally unforeseen and unexpected uses will emerge must be accepted as the new reality; like any new capability it will be applied toward positive and benign outcomes but we should not be surprised when adverse and negative uses emerge. Hopes of only positive outcomes are hopelessly unrealistic.

- Cognitive Prosthetic: (CP): The kinds of tasks that CG4 can perform suggest that it is well beyond the category of an appliance but rather is closer to a heavy industrial tool. Because of its ability to adapt its functionality to user defined task it might be more accurately something akin to a prosthetic. But in this case one that supports and extends human reasoning ability.

CG4 possesses no sense of self, sentience, intentionality or emotion. Yet it continues to demonstrate itself to be a very powerful tool for expression or problem solving.

Given its power to offer cognitive amplification we suggest further that it can form the basis of a more advanced form of symbolic representation and communication.

- Emergence: CG4 has shown novel and unexpected capabilities; capabilities that the designers had not anticipated have spontaneously arisen; however though no one so far is proposing that sentience, intentionality, consciousness or self reference might be present; the lights clearly are on but the doors and windows are still open and there is still nobody home.

- Moving target: artificial intelligence, deep learning and large language models reporting has bloomed. The speed at which topics that focus on various aspects has gained speed in recent weeks and months. The upshot has been that it has become difficult to prioritize what to include or focus on when including topics for this discourse. Should a later version of this subject emerge from this original attempt it might do well to focus on specific sub topics.

- Hosting: Von Neuman Architecture: to date, CG4 is functioning on an ensemble of von Neuman machines. As it is currently accessed has been developed and is hosted on classical computing equipment. Despite this fact it has shown a stunning ability to provide context sensitive an meaningful responses to a broad range of queries. However intrinsic to its fundamental modeling approach has shown itself as being prone to actions that can intercept or subvert its intended agreeable functionality. To what extent solving this "alignment" problem is successful going forward remains to be seen. As training data sets grew in size processing power demands grew dramatically. Subsequently the more sophisticated LLM's such as CG4 and its peers have required environments that are massively parallel and operate at state of the art speeds. This has meant that in order to keep training times within acceptable limits some of the most powerful environments have been brought into play. Going to larger training data sets and keeping time to training conclusion may call for hosting on such exa-scale computing environments such as the El Capitan system or one of its near peer processing ensembles.

The current high ground of computing is found when the focus shifts to exa scale supercomputing. At this scale of processing throughput the terms typically used are about processing happening at the rate of trillions of floating point operations or teraflops (TF). The costs associated with constructing these kinds of devices are typically in the range that only national governments are able to afford their construction and operation. They are typically used the most advanced scientific, engineering and national defense related work. Mostly they are constructed of processing elements described as blades which are an entire processing element combined with its memory and communications mechanisms all on a single board. In the higher throughput machines communications will be mediated by fiber optic communications to enable the highest rates of data transfer possible.

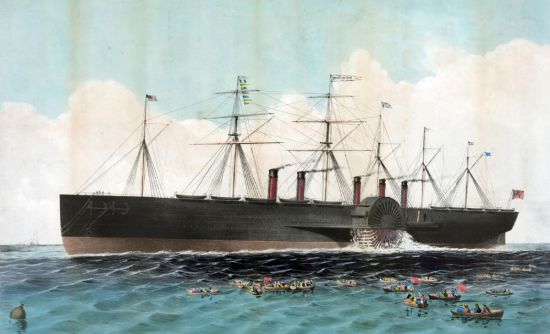

Yet despite these machines representing the maximum of possible computing capability they will shortly be superseded by devices that will render them museum pieces. A close comparable analogy would be the inexorable improvement in sailing technology during the eighteen and nineteenth centuries.

During the height of nautical technological advancement the clipper ship became the high end of sailing technology. These ships made use of many more sails than earlier sailing ships, were narrow, long and carried relatively less cargo. However they were very fast by comparison to earlier designs.

Likewise exa-scale computing machines will be eclipsed by the momentary advent of quantum computing devices. Initially they may serve as simple "front end" or communications devices for the machines that will follow.

Exa-Scale Computing. The El Capitan supercomputer is scheduled begin operation at the Lawrence Livermore Lab in California sometime in 2024. Performance estimates suggest that it will be capable of operating at 2 terra flops (2TF)/sec. It is expected to have the ability to move data between its various elements at the rate of 12.8 terabits/second between each of its blades. Though this machine may be the fastest computing ensemble on the planet when it becomes operational it will still function using the von Neuman architecture model. The upcoming transition to quantum computing will render it as an example of the height of classical architecture computing in much the same way that clipper sailing ships represented the height of sail based technology just before the advent of steam ship.