Chatgpt4 version04

OpenAI - ChatGPT4.

In what follows we attempt to address several basic questions about the onrushing progress with the current focus of artificial intelligence. There are several competing actors in this space. These include OpenAI, DeepMind, Anthropic, and Cohere. A number of other competitors are active in the artificial intelligence market place. But for purposes of brevity and because of the overlap we will limit focus on ChatGPT4 (CG4). Further, we focus on several salient questions that that raise questions of safety, risk and prospects.

Specifically, risks that involve or are:

- Interfacing/Access: how will different groups interact with, respond to and be affected by it; might access modalities available to one group have positive or negative implications for other groups;

- Political/Competitive: how might different groups or actors gain or lose relative advantage; also, how might it be used as a tool of control;

- Evolutionary/Stratification: might new classifications of social categories emerge; were phenotypical bifurcations to emerge would or how would the manifest themselves;

- Epistemological/Ethical relativism: how to reconcile ethical issues within a society, between societies; more specifically, might it provide solutions or results that are acceptable to the one group but unacceptable to the other group; recent attention has been drawn to the evidence that a LLM such as CG4 may begin to exhibit sycophancy in its interactions with a user; even if the value stance of the user can be considered as an equivocation;

Synthesis. Responding to these questions calls for some baseline information and insights about the issues that this new technology entails. We propose to suggest we look

- Terms are included to help clarify crucial elements and contextualize CG4;

- Sentiment is being expressed about it by knowledgeable observers;

- Theory of Operation of technology paradigm used to produce its results;

- Risks our approach has been to present a few commonly occurring risks, whether inherent or malicious as well as some theoretical risks that might emerge;

- Insights are offered to serve as takeoff points for subsequent discussion;

Terms and Basic Concepts.

CG4 has demonstrated capabilities that represent a significant leap forward in overall capability and versatility beyond what has gone before. In order to attempt an assessment prospective risks suggests reviewing recent impressions at a later date as more reporting and insights have come to light. CG4 has already demonstrated that new and unforeseen risks are tangible; in some instances novel and unforeseen capabilities have been reported. It is with this in mind that we attempt here to offer an initial profile or picture of the risks that we should expect to see with its broader use.By way of of addressing this increasingly expanding topic we offer our summary along the following plan of discourse:

Overview and Impressions:

- what has emerged so far; some initial impressions are listed;

- next are some caveats that have been derived from these impressions;

Theory of Operation: for purposes of brevity a thumb nail sketch of how CG4 performs its actions is presented;

- included are some high level diagrams

- also included are links to several explanatory sources; these sources include articles and video content;

Our thesis identifies three primary types of risks; these include:

- systemic: these are inherent as a natural process of ongoing technological, sociological advance;

- malicious: who known actors categories are; how might they use this new capability;

- theoretical: or possible new uses that might heretofore not been possible;

Notes, References: We list a few notable portrayals of qualitative technological or scientific leaps;

Sentiment and Impressions, An Overview. Some observers early on were variously favorable and voiced possible moderate caution while others were guarded or expressed caution to serious fear.

Optimism and Interest.

- Dr. Jordan Peterson. During his discussion with Brian Roemmele many salient topics covered. Of note some germane capabilities such as:

- super prompts; Roemmele revealed that he was responsible for directing CG4 to focus specifically upon the topic that he wished to address and omit the usual disclaimers and preamble information that CG4 is known to issue in response to a query.

- discussions with historical figures; they posited that it is possible to engage with CG4 such that it can be directed to respond to queries as if it were a specific individual; the names of Milton, Dante, Augustine and Nietzsche. Peterson also mentions that a recent project of his is to enable a dialog with the King James Bible.

- among the many interesting topics that their discussion touched on was the perception that CG4 is more properly viewed as a 'reasoning engine' rather than just a knowledge base; essentially it is a form of intelligence amplification;

- Dr. Alan Thompson. Thompson focuses his video production on recent artificial intelligence events. His recent videos have featured CG4 capabilities via an avatar that he has named "Leta". He has been tracking the development of artificial intelligence and large language models for several years.

- Leta is an avatar that Dr. Thompson regularly uses to illustrate how far LLM's have progressed. In a recent post his interaction with Leta demonstrated the transition in capability from the prior underlying system (GPT3) and the newer one (GPT4).

- Of note are his presentations that characterize where artificial intelligence is at the moment and his predictions of when it might reasonable to expect to witness the emergence of artificial general intelligence.

- A recent post of his titled In The Olden Days portrays a contrast between how many of societies current values, attitudes and solutions will be viewed as surprisingly primitive in coming years.

- Dr. de Grass-Tyson. A recent episode of the Valuetainment was present with Patrick Bet-David to discuss CG4 with Dr. de Grass-Tyson. A lot of people in Silicon Valley will be out of a job.

- Salient observations that were brought forward was that the state of artificial intelligence at this moment as embodied in the CG4 system is not something to be feared.

- Rather his stance was that it has not thus far demonstrated the ability to reason about various aspects of reality that no human has ever done yet.

- He cites a hypothetical example of himself whereby he takes a vacation. While on the vacation he experiences an engaging meeting with someone who inspires him. The result is that he comes up with totally new insights and ideas. He then posits that CG4 will have been frozen in its ability to offer insights comparable to those that he was able to offer prior to his vacation. So the CG4 capability would have to "play catch up". By extension it will always be behind what humans are capable of.

Caution and Concern:

- Dr. Bret Weinstein. Dr. Weinstein has been a professor of evolutionary biology. His most recent teaching post was at Evergreen College in Oregon.

- In a recent interview on the David Bet-David discussed how quickly the area of artificial intelligence has been progressing. Rogan asked Weinstein to comment on where the field appeared to be at that moment and where it might be going. In response Weinstein's summary was that "we are not ready for this".

- Their discussion touched on the fact CG4 had shown its ability to pass the Wharton School of Business MBA final exam as well as the United States Medical Licensing Exam. Dr. Weinstein warns that "we are not ready for this."

- He makes the case that the current level of sophistication that CG4 has demonstrated is already very problematic.

- By way of attempting to look forward he articulates the prospective risk of how we as humans might grapple with a derivative of CG4 that has in fact become sentient and conscious.

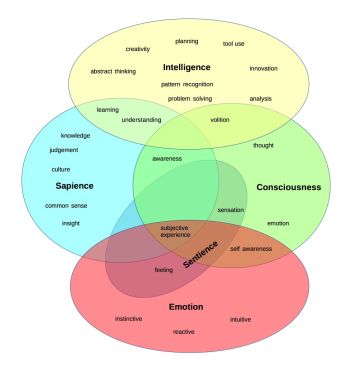

- Dr. Joscha Bach. Bach is a researcher on the topic of artificial consciousness. During an interview with Lex Fridman focuses the discussion on or not a derivative of CG4 could become conscious. Bach makes the case that CG4 is not consciousness, possesses no sentience, intentionality or emotional capability. Therefore expectations that it might spontaneously decide to "best" humans are unfounded by any facts.

- He states the position that a large language model would not be an effective way to arrive at an entity that possessed self consciousness, sentience, motivation and intentionality.

- During his response regarding the possibility that CG4 or a derivative become conscious his position was that the very basis of the Large Language Model would not be an appropriate approach to creating any of the processes that appear to underpin human consciousness. His premises include that large language models are more like the mythical golem of Eastern European Seventeen Century literature. Crucially that LLM's have no self reflexivity.

- LLM's are simply executing tasks until a goal is met. Which again points to the position that "the lights are on but the doors and windows are open and there is nobody home". Rather it would be better to start with self organizing systems rather than predictive LLM's. In the discussion he points out that some form of self-model would have to be discovered by the system and recognize that it was modeling itself.

- Bach suggests that instead of using a LLM to attempt to arrive at a real time self reflective model of consciousness comparable to human consciousness that we should start from scratch. However interestingly given the willingness to pursue this path he advocates caution.

- He adds the interesting fact that in order to pursue such a path it would be necessary to move forward from the basis of there being an operating environment that was based upon some form of representation of ground truth. Otherwise the result could develop in which no one really knows who is talking to who. Existing LLM's offer no comparable world-model that an artificial consciousness can embed itself within.

- Dr. Alex Karp (CEO - Palantir). Dr Karp is the CEO of a data mining company. Palantir has focused on providing analysis services to a range of national security and national defense organizations. The company has developed its relationship with these agencies over two decades. It espouses the crucial role that advanced technology provides to the safety and security of the nation as well as how it provides global stability in several different venues.

- He has put a spotlight many Silicon Valley technology companies shy away from supporting US national security. This despite the fact that their successes stem directly from the fact that the US has provided the economic, political, educational, legal and military infrastructure that has enabled them to incorporate, grow and develop.

- Given Palantir's close relationships with national security and national defense organizations it has a far greater sense of the realities and risks of not forging ahead with all possible speed to equip the nations defenses and provide those charged with seeing to the nation's security.

Caution to Alarm.

- Elizer Yudkowski. Yudkowski is a polymath who has been following the development of LLM's for several years. The release of CG4 has resulted in his frequent presence speaking out publicly about the risks that humanity may face extinction should a artificial general intelligence emerge from current research.

- During an interview with Lex Fridman expresses fear that the field of artificial intelligence is progressing so rapidly that humans could find themselves facing the prospect of an extinction event. His concern is that it could evolve with such rapidity that we as humans would be unable to assess its actual capacities and as such be unable to make reliable determinations as to whether it was presenting its authentic self or if it was presenting a dissembling version of itself. His position is that artificial intelligence will kill us all.

- He has made several presentations regarding the potential down sides and risk that artificial intelligence might cause. His specific concerns are that a form of intelligence could crystallize as a result of normal operation in one or another of the current popular systems. These generally are considered to be the large language models such as Chat GPT4.

- The DeepMind system is one that is believed to be comparably sophisticated. The Meta variant known as Llama is also of sufficient sophistication that an unexpected shift in capability as a result of some unforeseen emergent capability could escape the control of its human operators.

- A recent development at the Meta artificial intelligence lab resulted in pausing the activities of an emergent capability. This capability seemed to suggest that two instances of the ai were exchanging messages using a private language that they had just developed.

- Elon Musk. Musk was an early investor in OpenAI when it was still as private startup.

- He has tracked development of artificial intelligence in both the US as well as DeepMind in the UK. He has recently enlisted 1100 other prominent personalities and researchers to sign a petition to presented to the US Congress regarding the risks and dangers of artificial intelligence.

- His observation is that regulation has always happened by government after there has been a major adverse or tragic development. In the case of artificial intelligence his position is that the pace of development is such that a moment in time is quickly approaching when a singularity moment arrives and passes.

- It is at that moment that humanity unwittingly unleashing an AGI is a very serous risk. may discover that it has unleashed a capability that it is unable to control.

- Gordon Chang. Says that the US must forge ahead with all possible speed and effort to maintain its lead against the PRC in the area of artificial intelligence. Chang asserts that the PRC culture, values and objectives are adversarial and that they will use whatever means necessary to achieve a commanding edge and achieve dominance with this capability in all of its geopolitical endeavors. His earlier public statements have been very explicit that the West faces an existential crisis from the CCP and that it will use any and all means, including artificial intelligence to gain primacy.

Indirect Alarm.

In several cases highly knowledgeable individuals have expressed concern about how the PRC has been positioning itself to become the world leader in artificial intelligence. Their backgrounds range from journalism, diplomacy, the military and Big Tech.

Some observers have explicitly suggested that the role of artificial intelligence will be crucial going forward. In a few cases their pronouncement preceded the release of such powerful tools as GPT3 and GPT4.

Following are several individuals who have been raising attention or sounding the alarm about the risk that external geopolitical actors pose. We should note in reviewing them that in the case of those PRC and CCP watchers the risks are clear, imminent and grave.

- Kai-Fuu Lee. Is a well known and respected expert in the area of artificial intelligence. Is known to be leading the charge to make the PRC into a world class leader in AI, an AI Superpower.

- He leads SinoVentures, which is a venture capital firm with the charter to start up five successful startup per year.

- Recent pronouncements have suggested that advances in artificial intelligence can be co-operative rather than adversarial and confrontational. Events in the diplomatic sphere suggest otherwise.

- His efforts provide the gestation environment needed to launch potential billion dollar major concerns.

- Bill Gertz. Bill Gertz is an author who has published several books that focus on technology and national security. A recent book titled "Deceiving the Sky" warns of a possible preemptive action on the part of the PRC. His work details how the CCP has launched a detailed, highly focused set of actions that will enable it to marginalize and neutralize the current "hegemon", i.e. the USA

- He posits that the US could find itself at a serious disadvantage in a contest with the PLA and the PLAN.

- By way of clarification he points out that the leadership of the CCP has been building out an increasingly sophisticated military which it intends to use to modify, by force if necessary the global geopolitical order.

- Additionally other references such as that explicitly expressed by President Vlad Putin of the Russian Federation state clearly that control of artificial intelligence will be a make or break development for whatever nation-state reaches the high ground first.

- Dr. Graham Allison. Dr. Allison is a professor at Harvard University. A recent work that he published in 2018 is titled Destined for War. Dr Allison places front and center the reality that only a minority of power transitions have been peaceful. Of sixteen power transitions fourteen have been resolved through war.

- In this volume he develops the position that there have been sixteen historical instances where a new comer power challenged the reigning hegemonic power with the intent of displacing it.

- Of those sixteen only two were transitioned in a peaceful manner. The rest were accomplished by armed conflict.

- In several chapters Dr. Allison details how the leadership of the CCP has focused the economic development of the PRC. He presents detailed charts and graphs that clarify the importance of not only national GDP numbers but also the crucial importance of purchasing power parity (PPP).

- His thesis takes the direction that the PRC has an innate advantage due to is far larger population base. Further, the leadership of the CCP possesses authoritarian powers to control the population and the narrative that informs the population.

- He shows how a pivot point will be reached at which the purchasing power parity point (PPP) of the PRC will exceed that of the USA. When this happen then

- a) the economy of the PRC will not only already be larger than that of the USA but will also

- b) be able to wield greater political and geopolitical influence world wide;

- c) and that during this period of time the risks of armed conflict will reach a point of maximum risk.

- Amb. (ret.) Dr. Michael Pillsbury. Deep, lucid insight into CCP, PRC doctrine, philosophy, world view (Mandarin fluent).

- Dr. Pillsbury details how the CCP has mobilized the entire population of the PRC to prepare to seize the high ground in science and technology - through any means necessary.

- His publication Hundred Year Marathon goes into great detail how a succession of CCP leadership has been gradually repositioning the PRC to be a major global competitor. He makes very clear that the PRC is a command economy comparable to how the former USSR functioned.

- He clarifies how the CCP leadership has focused its thinking to position itself to displace what it views as the "reigning hegemon - the USA". A study of this work makes very clear that the CCP leadership take very seriously its charter to become the world's global leader in all things.

- The list of all crucial science and technology is presented that shows that the CCP plans to own and control anything that matters. That the PRC is an authoritarian society is made very clear as well.

- Dr. Pillsbury provides background and context into the values and mindset of the current CCP leader, Xi Jinping. When a fuller recognition of just how implacably focused this leader is then there might be a sea change in how the US and the West refocuses its efforts to meet this growing challenge.

- Brig. Gen (ret.) Robert Spalding III. Provides a catalog of insightful military risks, concerns.

- Stealth War: details how the CCP has directed its various agencies to identify and target US vulnerabilities; he clarifies that the CCP will use unrestricted warfare, i.e. unrestricted warfare i.e. warfare through any other means necessary to accomplish its goals.

- The CCP is the main, existential threat: In chapter after chapter he details how the CCP uses intellectual property theft - which is the actual know-how and detailed design information needed to replicate a useful new high tech or scientific breakthrough; thus saving themselves years and billions of dollars;

- financial leverage: it uses American financial markets to raise money to develop its own military base; yet its companies are not subjected to the same accounting and reporting standards as are US companies;

- espionage: it uses spies to identify, target and acquire American know how, technology and science for its own advantage which it then perfects at minimum cost and uses against its adversaries;

- debt trap diplomacy: several countries belatedly came to recognize the dangers of the Belt and Road initiative; the PRC approach meant appearing to offer a great financial or infrastructure deal to a third world country for access to that country's infrastructure; when the country found itself struggling with meeting the terms of the agreement it discovered that the CCP would unilaterally commandeer some part of the country's infrastructure as a form of repayment;

- unfair trade practices: such as subsidized shipping for any and all products made in the PRC; trading partners are faced with import tariffs;

- trading partner surrender: it also demands that the Western partner share with it any and all practices and lessons learned by the Western partner;

- infiltration of American society with subversive CCP institutions such as Confucian Institutes on college campuses that are front organization that serve as collection points for espionage activities;

- Dr. A. Chan & Lord Ridley published a book titled VIRAL IN 2021 . Though subsequent investigative reporting did not directly address the questions of how AI tools like DeepMind or CG4 might be used to fabricate a novel pathogen the subsequent reporting has made are clear that these tools can definitely be employed to drive research on the creation of extremely dangerous pathogens.

- Their work provides a deeply insightful chronological genesis and progressions of COVID-19 pandemic.

- Dr Chan and Lord Ridley provide a detailed and extremely well documented case that draws attention directly to the influence that the CCP has had with the World Health Organization(WHO). It then used that influence to deflect attention away from its own role in attempting to claim that the SARS COVID-19 outbreak happened as a result of zoonotic transmission.

- The CCP leadership attempted to build the case that the outbreak happened as a result of mishandling of infected animals at a "wet market". A "wet market" is an open air facility that specializes in the purchase of live exotic animals. These animals are typically offered for personal consumption. Some are presented because of claims of their beneficial health effects.

- They go on to describe how the CCP oversaw misinformation regarding its pathogenicity and infectivity. Their account shows that they had a number of extremely insightful contributors working with them.

- Their efforts the team was able to show how the Hunan Institute of Virology (HIV) attempted to confuse crucial insight about the COVID-19 virus.

- Crucial insights that are offered include facts such as a) genetically modified mice were used in a series of experiments. These genetically modified or chimeric mice had human airway tissue spliced into their DNA.

- The following additional notes are included as a means to draw attention to the reality of achieving advances in biotechnology at a rate outpacing what has already happened with the SARS-COV2 virus.

- Passaging: The technique called "passaging" is a means of increasing the infectivity and lethality of a particular pathogen through targeted rapid evolution. It is use to introduce a pathogen, in this case a virus into a novel environment. This means that the virus has no "experience" of successfully lodging itself into the new organism. Likewise that organism immune system has no experience in combatting it;

- Chimeric Organism are used: Initially a target set of organisms, in the case of SARS-COVID19, chimeric mice with the intended pathogen.

- Chimeric Offspring: several generations of chimeric mice were used to incorporate genetic information using human DNA;

- Specific Target: DNA that was spliced into the virus genome was selected to human airway passage tissue; the pathogen was explicitly intended to attack the organism's respiratory organs;

- Initial Infection: These mice were then inoculated with the SARS-COVID19 virus; initially they showed little reaction to the pathogen.

- Iterative Infections were performed: These mice produced offspring which were subsequently inoculated with the same pathogen;

- Directed Evolution via Passaging: After a number of iterations the result was a SARS-COVID19 virus that had undergone several natural mutation steps and was now extremely infective and lethal; this more dangerous variant mandated highly specialized and sophisticated personal protection equipment and an extremely isolated work environment;

- Increasing Infectivity: Iterative passaging increased the infectivity and lethality of the virus as expected;

- Highly Contagious and Dangerous: Ultimately the infectivity and lethality of the mutated virus reached a stage where it could be weaponized;

- Accidental Handling: Any kind of release, whether intended or unintended could successfully spread the virus via aerosol, or airborne transmission ;

- Only the HIV could do this: Ongoing reporting and investigative work has conclusively demonstrated that the HIV was the source of the COVID-19 pandemic;

- National Lockdown: Of note is the extremely curious fact that the CCP imposed a nationwide lockdown that went into effect 48 hours after being announced.

- With "Leaks": The CCP imposed extreme lockdown measures which in some cases proved to be extreme measures.

- International Spreading was Allowed: Further puzzling behavior on the part of the CCP is the fact that international air travel out of the PRC continued for one month after the lockdown.

- Military Applications: Subsequent research by the team and their collaborators have demonstrated that the HIV has been driven by military, bioweapon considerations.

- Unrestricted Warfare: Extensive investigation revealed that the PLA had been studying how to weaponize an engineered virus as recently as 2016 and published a report on how best to use such a weapon.

- Dr Eric Li. CCP Venture Capitalist. ; Dr Li is a Chinese national. He was educated in the US at the University of California at Berkeley and later at Stanford University. His PhD is from Fudan University in Shanghai China. He has focused his resources the growth and development of PRC high technology companies.

- During a presentation at TED Talks Dr Li made the case that there are notable value differences between the Chinese world view and that typically found in the West.

- In his summary of value comparisons he builds the position that the Chinese model works in ways that Western observers claim that it does not. He champions the Chinese "meritocratic system" as a means of managing public affairs.

CAVEATS.

The above impressions by notable observers in the field of artificial intelligence and geopolitics is small, unscientific sampling of positions. However in each case these individuals are well respected and known in their fields. From these we propose a few caveats and observations as a way forward.

We propose the tentative conclusion that several factors should condition our synthesis going forward. These include the premises that CG4:

- Inherently Dual Use Technology: it should be kept clearly in mind that its further use entails significant risks; the prospect that totally unforeseen and unexpected uses will emerge must be accepted as the new reality; like any new capability it will be applied toward positive and benign outcomes but we should not be surprised when adverse and negative uses emerge. Hopes of only positive outcomes are hopelessly unrealistic.

- Cognitive Prosthetic: (CP): The kinds of tasks that CG4 can perform suggest that it is well beyond the category of an appliance but rather is closer to a heavy industrial tool. Because of its ability to adapt its functionality to user defined task it might be more accurately something akin to a prosthetic. But in this case one that supports and extends human reasoning ability.

CG4 possesses no sense of self, sentience, intentionality or emotion. Yet it continues to demonstrate itself to be a very powerful tool for expression or problem solving.

Given its power to offer cognitive amplification we suggest further that it can form the basis of a more advanced form of symbolic representation and communication.

- Emergence: it has shown novel and unexpected capabilities; capabilities that the designers had not anticipated have spontaneously arisen; however though no one so far is proposing that sentience, intentionality, consciousness or self reference might be present; the lights clearly are on but the doors and windows are still open and there is still nobody home.

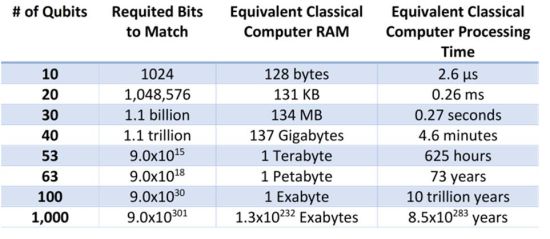

- Hosting: Von Neuman Architecture: to date, CG4 is functioning on an ensemble of von Neuman machines. As it is currently accessed has been developed and is hosted on classical computing equipment. Despite this fact it has shown a stunning ability to provide context sensitive an meaningful responses to a broad range of queries. However intrinsic to its fundamental modeling approach has shown itself as being prone to actions that can intercept or subvert its intended agreeable functionality. To what extent solving this "alignment" problem is successful going forward remains to be seen. As training data sets grew in size processing power demands grew dramatically. Subsequently the more sophisticated LLM's such as CG4 and its peers have required environments that are massively parallel and operate at state of the art speeds. This has meant that in order to keep training times within acceptable limits some of the most powerful environments have been brought into play. Going to larger training data sets and keeping time to training conclusion may call for hosting on such exa-scale computing environments such as the El Capitan system or one of its near peer processing ensembles.

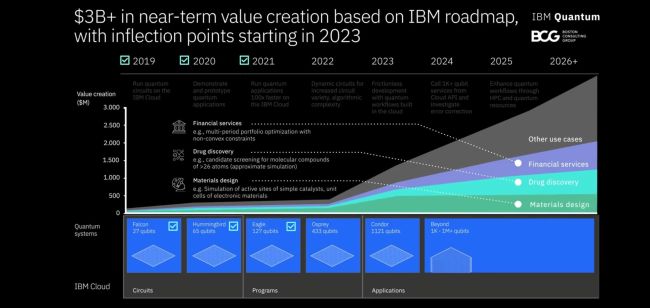

Hosting: Quantum Computing. Because of the inherent novelty of what a quantum computing environment might make possible the following discussion limits itself to what is currently known.

Published reports from giants such as IBM, Intel and Google lay out explicit timelines and project goals. In each case the expected goal of qubit availability is provided.

What has already been made very clear is that even with the very limited quantum computing capabilities currently available, these systems prove themselves to be orders of magnitude faster in solving difficult problems than even the most powerful classical supercomputer ensembles.

If we take a short step into the near term future then we might be obliged to attempt to assimilate and rationalize developments happening on a daily basis. Any or even all of which can have transformative implications.

The upshot is that as quantum computing becomes more prevalent the field of deep learning will take another forward "quantum leap" - literally which will put those who possess it at an incalculable advantage. It will be like trying to go after King Kong with Spad S. - firing with bb pellets.

The central problem in these most recent developments arises because of the well recognized human inability to process changes that happen in a nonlinear fashion. If change is introduced in a relatively linear fashion at a slow to moderate pace then most people adapt to and accommodate the change. However if change happens geometrically like what we see in the areas of deep learning then it is much more difficult to adapt to change.

- CG.X-Quantum will completely eclipse current incarnations of CG4: Quantum computing devices are already demonstrating their ability to solve problems in seconds or minutes that classical von Neuman computing machines require decades, centuries or even millennia to solve.

- Phase Shift. Classical physics describes how states of matter possess different properties depending upon their energy state or environment. Thus on the surface of the earth we can experience the gas of the atmosphere. In environments that are somewhat above the freezing point of water we are unaware of the fact that we inhale and exhale atmospheric gas. It is odorless, colorless and tasteless.

- STATE: Gas. Were we to collect sufficient quantities of atmospheric gas into a sealable container it would be possible to cool the gasses comprising earth's atmosphere into liquids. The idea of wetness, shape conformability and other properties of a liquid would suddenly become evident. Yet it would be difficult to adduce wetness if we never had experience with a liquids.

- STATE: Liquid. If one never had contact with the liquid state of H2O then properties as buoyancy, wetness, conformability (i.e. to the shape of a container, evaporation, discoloration and similar properties might be very difficult to imagine. It would be difficult if not even impossible to envision something that was otherwise undetectable because it was odorless, colorless and tasteless could somehow cause one's death by drowning. Yet were one to be plunged into a large enough body of water but not possess swimming skills one could very well die by drowning.

- STATE: Solid. The process can be repeated. If we were to use water as a basis then we might discover another state of matter that water can exhibit. This is the state of ice. Many of us use this material (ice) to condition our beverages. That a heretofore wet liquid could become solid might also defy our ability to imagine it taking on solid form. These all consist of the same substance, i.e. H2O. Yet properties found in one state, or phase bear little or no resemblance to those in the subsequent state. We should expect to see an evolution comparable happening in fast forward motion that is very comparable to "gas to liquid, liquid to solid". When DeepMind or CG4 are re-hosted in a quantum computing environment equally unimaginable capabilities will become the norm.

- STATE: Gas. Were we to collect sufficient quantities of atmospheric gas into a sealable container it would be possible to cool the gasses comprising earth's atmosphere into liquids. The idea of wetness, shape conformability and other properties of a liquid would suddenly become evident. Yet it would be difficult to adduce wetness if we never had experience with a liquids.

The analyst can find a number of useful references addressing the topics of quantum bits, entanglement and superposition,

here The Quantum Leap,

here QuBits and Scaling and

here Some very useful basics.

In some cases they will prove to be fairly technical.

A quick peek at this scaling chart shows that the IBM-Q-System TWO with 432 QuBits is completely off of the chart. Which if one views the associated video will see, IBM plans to offer joined systems together in trios. The result will be well over one thousand qubits. Making a conceptual or imaginative leap to what this kind of capability means requires considerable insight and a mental framework that benefits from value scales either only poorly understood or understood by very few alive today.

RESCALING.

Progress has moved forward apace in the world of quantum computing. By and large most members of the public have little awareness of its reality or what it might mean. What might be worth pondering briefly are the numbers in this comparison table. Note that with relatively small numbers of QuBits the amount of computational capacity becomes extremely large - by contemporary standards. If we look at the advertised progress that IBM is making with its Osprey and Quantum System Two 432 QuBits - Roadmap device then we are seeing computational capacity that requires a redefinition of our understanding of what is possible.

Taking a very short leap of the imagination one might envision the capability of modeling molecular processes in fast forward time. One can imagine creating a Sim City type model with a large to very large number of agents, hundreds, thousands or tens of thousands where each has very detailed knowledge about their own domain. They might have comparable communications channels as those described in the "Sim City" experiment. The processing likewise being sped up by an arbitrary scaling factor.

Molecular modeling at quantum computing speed can mean that any number of parallel pathways can be examined and fine tuned almost instantly. Existing experiments with various deep learning based systems have shown that they are capable of creating their own experimental validation and verification processes and actions. They would only need some human intervention for equipment assembly and setup. With that done such as system could overnight be capable of exploring various experimental pathways forward that optimized a particular molecular structure for a highly specific purpose, or combination of purposes, then recommend the processing steps to create them.

This kind of capability suggests that heretofore unforeseen or unimagined psycho-actives might become available in the very near term. Usage of such capabilities could mean a bifurcation of humans from one species to a successor species. This can not reasonably be ruled out.

Geopolitical, Geo-Economic modeling at quantum speed can mean the creation of plans that can either cause ones own national strengths and standing could be considerably enhanced or those of a rival equally diminished or worse, damaged. This necessarily inheres from the ability to direct such as system to use known historical facts and rules of human endeavors to be used to play a model forward to see what kinds of outcomes might obtains. If one were to take a case in point one might use such a capability to play forward the events that led up to The Great War, or World War One to explore if there might have been other actions available to the leaders of the time to avert the catastrophe that ensued.

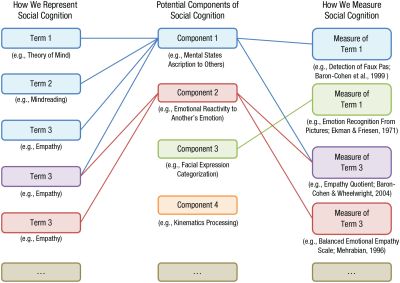

Interface, Interaction, Theory of Mind. A number of informed observers had advocated for the refinement of the Turing Test. A system capable of incorporating theory of mind, human psychology and behavior as well as other factors that enable humans to navigate as agents in the real world, one can imagine a situation where interacting with such a system might seem to be utterly indistinguishable from interacting with and extraordinarily brilliant individual. This might derive from the reality of it having the ability to probe and assess innumerable possible reasons why a person might be pursuing a line of thought or reasoning. One might experience this kind of system as being able to converse with the most knowledgeable, insightful, empathetic and reasonable individual ever. Such an experience could prove to be extremely seductive. Were such a a capability to be available then it becomes very difficult to imagine what uses it could be put to.

One can imagine a user interface that is based upon a variant or fine tuned derivative of CG4. It would be capable of speech recognition and generation. Its behavior repertoire would be capable of fail-soft disambiguation. Which means that it would immediately recognize that it had not anticipated or correctly interpreted a reference or contextual segment of text or verbiage. It might further retain this insight for future reference as a "by way of example" instance that the user could use to refer back to a prior discussion.

It would be able to push its current state onto a stack and backtrack to its last point of successful interpretation and request that the user or speaker clarify for it how to interpret the user's or speaker's intention. That clarification would then be incorporated into the saved context that was pushed onto a session stack. With the ability to use theory of mind the system might be able to make insightful inferences as to where the user's train of thought is going and prepare several alternative pathways to explore each direction. Further it might be able to infer the boundaries of knowledge that the user is operating with and suggest illuminative ways to fill in the missing elements. In this way a theory of mind for that specific individual would enable it to provide a more supportive interaction environment.

Existing CG4 functionality allows for sentiment analysis. This means that it is capable of analyzing a tract of text and deriving a sense of the sentiment that the author used in its composition. Looking at a far more sophisticated capability it is within the realm of reason to expect that a CG4-class system might iteratively develop theories that enable it to characterize various human states of mind. It might further be able to formulate theories that enable it to crystallize real world hypotheses of significant accuracy. Working with this kind of capability it would not seem to be far fetched to see CG4 type system teaching itself to interact with a human user in a seemingly highly empathetic and charming manner. The prospect of such a development should be both fascinating but also off-putting since it would enable such a system to be a highly agreeable interlocutor but could also become highly persuasive and manipulative. Further when operating at electronic speeds it could all happen in real time.

Looking at an only slightly more sophisticated approach even an existing CG4 class system should be able to create a set of agents and apply various interaction scripts to them to observe their behavior patterns and compare them to the behavior patterns of actual human users.

RISKS

The following collects these reactions suggesting promise or risk. They seem to partition into questions of promise and Risk. The main risk categories are: systemic, malicious and theoretical.

- Systemic. These risks arise innately from the emergence and adaptation of new technology or scientific insights. During the early years of private automobile usage the risks of traffic accidents was very low. This was because there were very few in private hands. But as they began to proliferate. Traffic accident risks escalated. Ultimately civil authorities were obliged to act to regulate their use and ownership. As some observers have pointed out, regulation usually occurs after there has been an unfortunate or tragic event. The pattern can be seen in civil aviation and later in control and usage of heavy transportation or construction equipment. In each case training became formalized and licensing became obligatory for airplane ownership and usage, trucking or heavy construction equipment.

- Malicious. History if littered with examples of how a new scientific advance or technological advance was applied in ways not intended by the inventor. The Montgolfier hot air balloons were considered an entertaining novelty. Their use during World War One as surveillance and attack platforms cast a new and totally different perception on their capabilities. We should expect the same lines of development with CG4. Its peers and derivatives should be considered as no different.

- Theoretical. CG4 has shown itself to be a powerful cognitive appliance or augmentation tool. Given that it is capable of going right to the core of what makes humans the apex predator we should take very seriously the kinds of unintended and unexpected ways that it can be applied. This way suggests considerable caution.

Recent Reactions. Since the most recent artificial intelligence systems have swept over the public awareness, sentiment has begun to crystallize. There have been four general types of sentiment that have crystallized over time. These include: voices of enthusiastic encouragement, cautious action, urgent preemption.

- Enthusiastic Encouragement. Several industry watchers have expressed positive reactions to the availability of CG4 and its siblings. Their position has been that these are powerful tools for good and that they should be viewed as means that illuminate the pathway forward to higher standards of living and human potential.

- Cautious Action. Elon Musk and eleven hundred knowledgeable industry observers or participants signed a petition to the US Congress urging caution and regulation of the rapidly advancing areas of artificial intelligence. They voiced concern that the potential usage of these new capabilities can have severe consequences. They also expressed concern that the rapid pace of the adaption of artificial intelligence tools can very quickly lead to job losses across a broad cross section of currently employed individuals. This topic is showing itself to be dynamic and fast changing. Therefore it merits regular review for the most recent insights and developments. In recent weeks and months there have been sources signaling that the use of this new technology will make substantial beneficial impact on their activities and outcomes. Therefore they wish to see it advance as quickly as possible. Not doing so would place the advances made in the West and the US at risk. Which could mean foreclosing on having the most capable system possible to use when dealing with external threats such as those that can be posed by the CCP.

- Urgent Preemption. Those familiar with geopolitics and national security hold the position that any form of pause would be suicide for the US because known competitors and adversaries will race ahead to advance their artificial intelligence capabilities at warp speed. In the process obviating anything that the leading companies in the West might accomplish. They argue that any kind of pause can not even be contemplated. Some voices go so far as to point out that deep learning systems require large data sets to be trained on. They posit the reality that the PRC has a population of 1.37 billion people. A recent report indicates that in 2023 there were 827 million WeChat users in the PRC. Further that the PRC makes use of data collection systems such as WeChat and TenCent. In each case these are systems that capture the messaging information from hundreds of millions of PRC residents on any given day. They also capture a comparably large amount of financial and related information each and every day. Topping the list of enterprises that gather user data we find:

- WeChat. According to BankMyCell.COM the PRC boasts of 1.671 billion users.

- TenCent. Recent reporting by [1] TenCent has over one billion users.

- Surveillance. Reporting from the PRC has over 600,000,000 surveillance cameras within a country of 1.37 billion people.

The result is that the Peoples Republic of China (PRC) has an almost bottomless sea of data with which to work when they wish to train their deep learning systems with. Furthermore they have no legislation that focuses on privacy. A disadvantage for the PRC AI efforts is that their entire data sets are limited to the Chinese people, their languages and its dialects.

The relevant agencies charged with developing these AI capabilities benefit from a totally unrestricted volume of current training data. Despite the availability of very large and current data sets that can be used for training purposes the PRC has an inherent disadvantage insofar as all of their data is in Mandarin or one of the other major dialects. This positions the government of the PRC to chart a pathway forward in its efforts to develop the most advanced and sophisticated artificial intelligence systems on the planet. And in very short time frames. If viewed from the national security perspective then it is clear that an adversary with the capability to advance the breadth of capability and depth of sophistication of an artificial intelligence tool such as ChatGPT4 or DeepMind will have an overarching advantage over all other powers.

This must be viewed in the context of the Western democracies. Which in all cases are bound by public opinion and fundamental legal restrictions or roadblocks. A studied scrutiny of the available reports suggests that there is a very clear awareness of how quantum computing will be used in the area of artificial intelligence.

A fundamental fact notwithstanding any prior qualifications is the reality that the CCP has launched an all-of-national resources project to seize the high ground in any area of high technology that matters. Where enterprises in the West can mount an effort with say one hundred participants, the PRC can stand up a comparable project with a thousand participants.

CG4 – Theory of Operation: CG4 is a narrow artificial intelligence system that is a Generative Pre-trained Transformer.

In order to make sense of this one would be well advised to understand several fundamental concepts associated with this technology. Because this is a highly technical subject the following is intended to introduce the core elements. The reader is encouraged to review the literature and body of insight that is currently available as explanatory video content.

By way of clarifying the topics of this work we organize these concepts into two primary groups. The first group offer basic information

on the fundamental building blocks of Large Language Models of which CG4 is a recent example. The second group introduces or otherwise clarifies

terms that have come to the forefront of recent public discussions and issues.

Fundamental Building Block Concepts.

- GPT3: a smaller precursor version of the GPT4 system;

- GPT3.5: a higher performance version of GPT3 but still falls short of the full-up GTP4 successor;

- GPT4: a LLM with over one trillion parameters;

- Chat GPT4: the conversational version of GPT4;

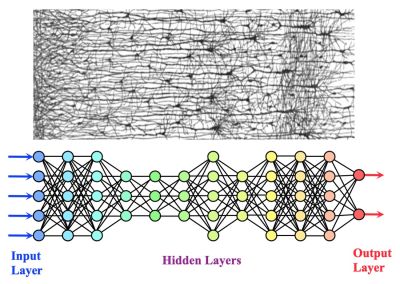

- Artificial Neural Networks. Four excellent episodes providing very useful insight into some underlying theory of how artificial neural networks perform their actions.

- From 3Blue1Brown: Home Page

- Neural_Network_Basics Some basic principles in how neural networks structures are mapped to a problem.

- Gradient DescentA somewhat technical topic requiring a careful examination on the part of the reader who is unfamiliar with the mathematical background.

- Back Propagation, intuitively, what is going on?

It graphically shows the mathematics behind how a neural network attempts to "home in" on a target; what we can see is the mathematics that underlies how training is iteratively done and the distance difference between the neural network's learning state gradually begins to converge with the actual desired response; - Back Propagation TheoryHow various layers in a deep learning neural network interact with each other.

A much closer mathematical description of how prior and successive neuron values contribute to successive neuron values; then how they ultimately contribute to the output neuron that they connect to and whether it will activate or not; this is a highly mathematical description using advanced partial derivative calculus;

- From 3Blue1Brown: Home Page

- Deep Learning: the technique of using representing an artificial neural network type structure to solve extremely complex problems; a neural network it typically will work using a large number of what are called "hidden layers"; they achieve greater performance when they are trained on very large data sets; these data sets can range from millions to billions of examples;

- Hidden Layer: One or more network layers that stands between an input layer and the final output layer. Each input layer is connected to all of the nodes in the first hidden layer. Subsequent hidden layers are added as a means to provide greater discriminatory resolution to the neural network. Each node in a hidden layer can be connected to each node in the following hidden layer. The more hidden layers the more sophisticated can be the ability of the neural network to perform its tasks.

In neural networks, a hidden layer is located between the input and output of the algorithm, in which the function applies weights to the inputs and directs them through an activation function as the output. In short, the hidden layers perform nonlinear transformations of the inputs entered into the network. Hidden layers vary depending on the function of the neural network, and similarly, the layers may vary depending on their associated weights. Useful insight into what hidden layers are doing can be viewed using this hidden layers video For an interactive view of how hidden layers enable a neural network to learn a new function one can monitor this video by sentdex; it has no audio but provides excellent insight into how the various layers and nodes within a layer develops an approximation of the function that it is attempting to learn.<BFR />

- Parameters: are the coefficients of the model, and they are chosen by the model itself. It means that the algorithm, while learning, optimizes these coefficients (according to a given optimization strategy) and returns an array of parameters which minimize the error. To give an example, in a linear regression task, you have your model that will look like y=b + ax, where b and a will be your parameter. The only thing you have to do with those parameters is to initialize them.

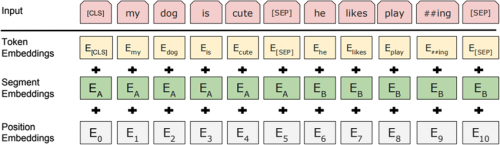

- Tokens: Making text into tokens. These become vector representations. They form the basis of the neuron values for each neuron in a neural network. The narrator provides a short and succinct introduction to the basic concepts of what a token is and how it is represented as a vector.

- Generative Artificial Intelligence.Generative artificial intelligence (AI) is artificial intelligence capable of generating text, images, or other media, using generative models. Generative AI models learn the patterns and structure of their input training data and then generate new data that has similar characteristics. A detailed oil painting of figures in a futuristic opera scene Théâtre d'Opéra Spatial, an image generated by Midjourney. In the early 2020s, advances in transformer-based deep neural networks enabled a number of generative AI systems notable for accepting natural language prompts as input. These include large language model chatbots such as ChatGPT, Bing Chat, Bard, and LLaMA, and text-to-image artificial intelligence art systems such as Stable Diffusion, Midjourney, and DALL-E.

A summary of the key elements of what generative artificial intelligence are explained in this video (11 minutes); note that a key and crucial point to keep in mind is that generative ai generates new output based upon a user's request or query; it uses massive amounts of training data to draw from in order to generate its output; note further that the "G" in GPT is derived from generative artificial intelligence;

Subsequent developments with generative ai systems have been moving forward apace at companies such as IBM. Their efforts are targeting a range of salient topic areas. To name a few their teams are addressing topics such as molecular structure, code, vision, earth science.

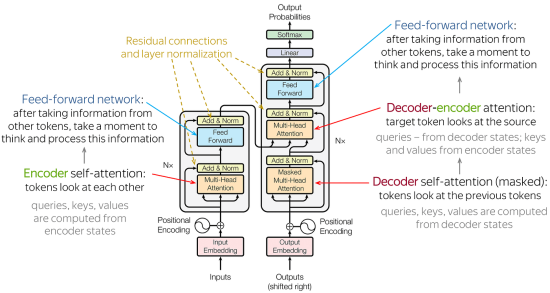

- Encoder-Decoder.According to Analytics YogiIn the field of AI / machine learning, the encoder-decoder architecture is a widely-used framework for developing neural networks that can perform natural language processing (NLP) tasks such as language translation, etc which requires sequence to sequence modeling. This architecture involves a two-stage process where the input data is first encoded into a fixed-length numerical representation, which is then decoded to produce an output that matches the desired format. As a data scientist, understanding the encoder-decoder architecture and its underlying neural network principles is crucial for building sophisticated models that can handle complex data sets. By leveraging encoder-decoder neural network architecture, data scientists can design neural networks that can learn from large amounts of data, accurately classify and generate outputs, and perform tasks that require high-level reasoning and decision-making. An excellent if somewhat technical video describes and explains the architecture of what is going on with the encoder and decoder components of the GPT system.

- Bidirectional Encoder Representations from Transformers (BERT) An insightful paper on several key features of what BERT is about and how it functions. official archive paper on BERT.

- BERT - very useful video a prediction system that uses training data that uses statistical mechanisms to anticipate a subsequent input based upon the most recent input;

- Large Language Model. Is a prediction system that uses training data to enable a deep neural network to perform recognition tasks. It uses statistical mechanisms to anticipate subsequent input based upon the prior input. Prediction systems use this training data to iteratively condition and train the deep learning system for its specific tasks. The following two videos provide focus on large language models; this is part one of large language models. it describes how words are used to predict subsequent words in an input text; here is part two and it is an expansion upon the earlier video but offers more technical detail. Together they provide a fairly concise synopsis of how large language models make predictions of how words are associated with each other in a body of text. They explain further how they require a very large training data set so as to achieve their performance.

- Transformer. A transformer is a deep learning system. This discussion presents key concepts of how transformers work from a more conceptual point of view. Its architecture relies on what is called parallel multi-head attention mechanism. The modern transformer was proposed in the 2017 paper titled "Attention is all you need".

There is some overlap with topics described above in the section on encoder-decoder architecture. A significant contribution is because it enables creation of a neural network model that requires less training time than previous recurrent neural architectures; It addressed such issues as inadequate long short-term memory (LSTM); this had been a shortcoming for earlier models because contextually significant terms might be beyond the positional proximity of semantically meaningful terms. Its later variation have been adopted for training large language models on large (language) datasets, such as the Wikipedia corpus and Common Crawl, by virtue of the parallelized processing of input sequence. Earlier efforts to resolve such difficulties were fundamentally influenced by the Attention Is All You Need paper by Ashish Vaswani et al of the Google Brain team. A breakdown of its major concepts are presented in : Attention is all you need.

- Generative Pre-trained Transformer: Generative pre-trained transformers (GPT) are a type of large language model (LLM) and a prominent framework for generative artificial intelligence. The first GPT was introduced in 2018 by OpenAI. GPT models are artificial neural networks that are based on the transformer architecture, pre-trained on large data sets of unlabeled text, and able to generate novel human-like content. As of 2023, most LLMs have these characteristics and are sometimes referred to broadly as GPTs. An overview of the important features can be viewed in the narrator touches on a number of related topics as well.

- Generative Adversarial Networks.a GAN is A generative adversarial network (GAN) is a class of machine learning framework and a prominent framework for approaching generative AI. The concept was initially developed by Ian Goodfellow and his colleagues in June 2014. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss.

Given a training set, this technique learns to generate new data with the same statistics as the training set. For example, a GAN trained on photographs can generate new photographs that look at least superficially authentic to human observers, having many realistic characteristics. Though originally proposed as a form of generative model for unsupervised learning, GANs have also proved useful for semi-supervised learning, fully supervised learning, and reinforcement learning. This video provides a short synopsis of the GANS capability.

- Recurrent Networks. A recurrent neural network is a kind of deep neural network created by applying the same set of weights recursively over a structured input, to produce a structured prediction over variable-size input structures, or a scalar prediction on it, by traversing a given structure in topological order. Fully recurrent neural networks (FRNN) connect the outputs of all neurons to the inputs of all neurons. This is the most general neural network topology because all other topologies can be represented by setting some connection weights to zero to simulate the lack of connections between those neurons. The illustration to the right may be misleading to many because practical neural network topologies are frequently organized in "layers" and the drawing gives that appearance. However, what appears to be layers are, in fact, different steps in time of the same fully recurrent neural network. A recurrent neural network is a kind of deep neural network] created by applying the same set of weights recursively over a structured input, to produce a structured prediction over variable-size input structures, or a scalar prediction on it, by traversing a given structure in topological order. For an intuitive overview of recurrent neural networks have a look here

- Recursive Networks. A recursive neural network is a kind of deep neural network created by applying the same set of weights recursively over a structured input, to produce a structured prediction over variable-size input structures, or a scalar prediction on it, by traversing a given structure in topological order. Recursive neural networks, sometimes abbreviated as RvNNs, have been successful, for instance, in learning sequence and tree structures in natural language processing, mainly phrase and sentence continuous representations based on word embedding. RvNNs have first been introduced to learn distributed representations of structure, such as logical terms. Models and general frameworks have been developed in further works since the 1990s. Addressing the task of attempting to transition from words to phrases in natural language understanding was addressed using recursive neural networks. A short but useful video presents several core elements of how the problem was solved.

- Fine Tuning CG4. Use instructions from the OpenAI Documentation page to fine tune CG4. Here is some insight on how to fine tune a Chat GPT3.5 system. For a somewhat less technical overview on when to use fine tuning and some basic instructions on how to perform fine tuning then have a look at this video. For observers with an interest in more practical uses then this video explains how to perform fine tuning to capture business knowledge.

- Supervised or Unsupervised Learning. Supervised learning (SL) is a paradigm in machine learning where input objects (for example, a vector of predictor variables) and a desired output value (also known as human-labeled supervisory signal) train a model. The training data is processed, building a function that maps new data on expected output values. An optimal scenario will allow for the algorithm to correctly determine output values for unseen instances. This requires the learning algorithm to generalize from the training data to unseen situations in a "reasonable" way (see inductive bias). This statistical quality of an algorithm is measured through the so-called generalization error. This short video presents a summarized explanation of the difference. Unsupervised learning is a paradigm in machine learning where, in contrast to supervised learning and semi-supervised learning, algorithms learn patterns exclusively from unlabeled data.

- Pretrained. A pretrained AI model is a deep learning model — an expression of a brain-like neural algorithm that finds patterns or makes predictions based on data — that’s trained on large datasets to accomplish a specific task. It can be used as is or further fine-tuned to fit an application’s specific needs. Why Are Pretrained AI Models Used? Instead of building an AI model from scratch, developers can use pretrained models and customize them to meet their requirements. To build an AI application, developers first need an AI model that can accomplish a particular task, whether that’s identifying a mythical horse, detecting a safety hazard for an autonomous vehicle or diagnosing a cancer based on medical imaging. That model needs a lot of representative data to learn from. This learning process entails going through several layers of incoming data and emphasizing goals-relevant characteristics at each layer. To create a model that can recognize a unicorn, for example, one might first feed it images of unicorns, horses, cats, tigers and other animals. This is the incoming data.

Then, layers of representative data traits are constructed, beginning with the simple — like lines and colors — and advancing to complex structural features. These characteristics are assigned varying degrees of relevance by calculating probabilities. As opposed to a cat or tiger, for example, the more like a horse a creature appears, the greater the likelihood that it is a unicorn. Such probabilistic values are stored at each neural network layer in the AI model, and as layers are added, its understanding of the representation improves. To create such a model from scratch, developers require enormous datasets, often with billions of rows of data. These can be pricey and challenging to obtain, but compromising on data can lead to poor performance of the model.

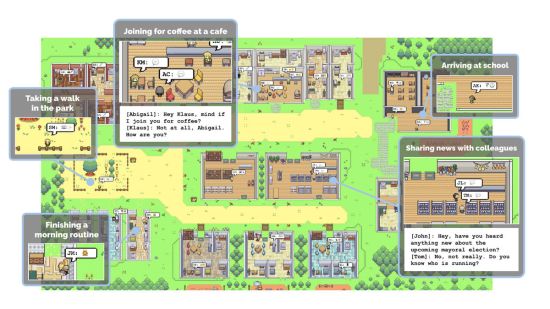

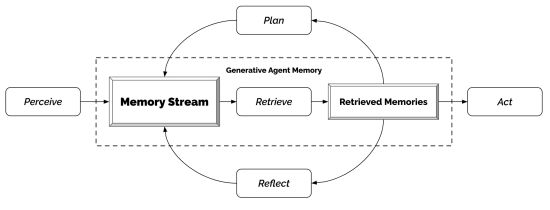

- Autonomous Agent: an instance of CG4 that is capable of formulating goals and then structuring subtasks that enable the achievement of those subtasks. A recent development has come to light wherein researchers at Stanford University and Google were able to demonstrate autonomous and asynchronous problem solving by having CG4 able to call instances of itself or of CG3. The result was that they were able to create collective of asynchronous problem solvers. They enabled these problem solvers a mechanism to interact and communicate with each other. The result was a very small scale simulation of a village. The "village" consisted of 25 agents. Each agent was assigned private memory as well as goals.

Of note is that the agent architecture is a bare bones minimal set of behavior controllers. A much more complex and sophisticated set can be envisioned wherein each agent can be developed out to the point that they become far more lifelike. This can mean that they might have goals but such characteristics as beliefs, which allow for correct or incorrect understanding, theory of mind of other agents, or users.

It would be a fairly small step to postulate a substantially larger collection of agents. This larger collection of agents might be put to the use of solving problems involving actual real people in real world situations. For instance one can imagine creating a population consisting of hundred or thousands of agents. These agents might be instantiated to possess positions or values regarding a range of topics. They can further be configured to associate themselves with elements or factors in the world that they operate in.

For instance, a subset of agents might be instantiated to exhibit a value to specific factors in the sim-world. A more concrete example might be that they attach considerable value to having the equivalent of "traffic management", i.e. the analog of "traffic lights" in their world vs. having the equivalent of "stop signs"; other agents might possess nearly opposite value; this sets up the possibility that in a larger collective that conflict can arise. With that conflict there might develop agents that lean toward mediation and compromise. Others might be more adamant and less cooperative. The upshot is that very complex models of human behavior can be modeled by adding more traits beyond those of goals and memory.

Recently there appears to have been a shift in landscape of the topic of artificial intelligence agents. What now appears to be coming into focus is the ability to construct specifically targeted tools that make use of multiple autonomous agents to cooperatively solve problems. Knowledgeable observers have been taking note of this trend and providing insight into what it means and how it might affect the further development of the field.

- Recent Topics or Issues

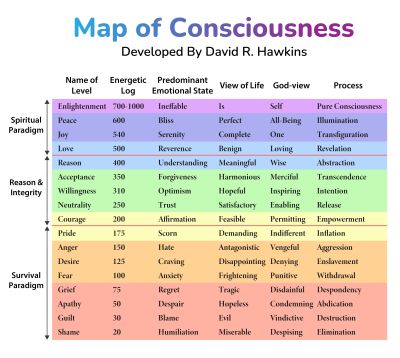

- Consciousness: Recent consciousness research has thrown more light on the subject. A number of models have developed in recent years that attempt to address the so-called "hard problem". The basis of this problem focuses on the question of "what it is like to be a bat or a dolphin or a wolf". And identifying the neural correlates that mediate the experience of being. The authors propose approaching the question of if artificial consciousness is possible they suggest the utilization of computational functionalism, empirical neuroscientific evidence then they suggest that a theory-heavy approach be used to assess the viability of the various models that have been proposed to date. They then list the current candidates. These include:

- recurrent processing theory

- global workspace theory

- computational higher order theories

- attention schema theory

- predictive processing

- agency and embodiment

In each case they set out what are described as indicator properties. An indicator property is a trait or feature that must be present for consciousness to be operative.

- Awareness: This is a characteristic trait of an organism that is capable of sensing the world around itself. It likewise will possess the capability to recognize, interpret and respond to various internal states. A feature of awareness is the ability to record, synopsize, tag and store episodic memories. With awareness there may or may not be self consciousness. However the organism will be able to respond to changes in its environment.

- Sentience: Derivative from the concept of sense. As in possessing sufficient sensorial apparatus to intercept states and developments in the real world such as temperature, light, chemical odors and acoustic patterns.

- World Model: The ability to create an abstract representation of an external reality. This model postulates that the sentient agent can further create a self-model that is an actor in this external world model and can interact or otherwise affect state in the world model. But also very importantly that events in the world model can give rise to events that can affect the agent. These events can be either adaptively positive or entail adverse risk.

- Emergence: experimenters and developers report observing a working system exhibit properties that had not heretofore been programmed in; they "emerge" from the innate capabilities of the system;

- Alignment: the imperative of imparting "guard rails" or otherwise limitations on what an artificial intelligence system can be allowed to do;

- Hallucinations. CG4 has produced results to queries wherein it created references that superficially look legitimate but upon closer inspection prove to be nonexistent.

- Theory of Mind: In order for a species to build a society, successful socialization processes between members of a species is fundamental. Development of a society requires that family members develop means of communicating needs and wants with each other. When this step is successful then collections of families can aggregate into clans. The basic is that each member develop a means of formulating or otherwise formalizing representations of their own mental and physical state. The key step forward is to be able to attribute comparable representations to others. When this step is successful then a theory of mind can crystallize. Intentions, wants and needs can then be represented. Intentions, wants and needs can then be used to develop plans. The more sophisticated the representation of self-state the more refined the clan's adaptive success will be.

Overview and Summary so far. If we step back for a moment and summarize what some observers have had to say about this new capability then we might tentatively start with that:

- is based upon and is a refinement of its predecessor, the Chat GPT 3.5 system;

- has been developed using the generative predictive transformer (GPT) model;

- has been trained on a very large data set including textual material that can be found on the internet; unconfirmed rumors suggest that it has been trained on 1 trillion parameters;

- is capable of sustaining conversational interaction using text based input provided by a user;

- can provide contextually relevant and consistent responses;

- can link topics in a chronologically consistent manner and refer back to them in current prompt requests;

- is a Large Language Models that uses prediction as the basis of its actions;

- uses deep learning neural networks and very large training data sets;

- uses a SAAS model; like Google Search, Youtube or Morningstar Financial;

Interim Observations and Conclusions.

- this technology will continue to introduce novel, unpredictable and disruptive risks;

- a range of dazzling possibilities that will emerge that will be beneficial to broad swathes of society;

- some voices express urgent action to preclude catastrophic outcomes;

- informed geopolitical observers urge accelerated action to further refine and advance the technology lest our rivals and adversaries eclipse us with their accomplishments;

- heretofore unforeseen societal realignments seem to be inevitable;

- recent advances in the physical embodiment of these tools represent a phase shift moment in history, a before-after transition;

At this point we note that we have:

- reviewed CG4’s capabilities;

- taken note of insights offered by informed observers;

- presented a thumbnail sketch of how CG4 operates;

- examined the primary risk dimensions and offered a few examples;

- suggested some intermediate notes and conclusions;

By way of summarization some observers say that CG4:

is:

- a narrow artificial intelligence;

- an extension of Chat GPT 3.5 capabilities;

- a sophisticated cognitive appliance or prosthetic;

- based upon Generative Predictive Transformer (GPT) model; performs predictive modeling;

- a world wide web 24/7 accessible SAAS;

can:

- converse:

- explain its responses

- self critique and improve own responses;

- responses are relevant, consistent and topically associated;

- summarize convoluted documents or stories and explain difficult abstract questions

- calibrate its response style to resemble known news presenters or narrators;

- understand humor

- convincingly accurate responses to queries suggests the need for a New Turing Test;

- reason:

- about spatial relationships, performing mathematical reasoning;

- write music and poems

- reason about real world phenomena from source imagery;

- grasp the intent of programming code debug, write, improve and explanatory documentation;

- understand and reason about abstract questions

- translate English text to other languages and responding in one hundred languages

- score in the 90% level on the SAT, Bar and Medical Exams

has:

- demonstrated competencies will disruptively encroach upon current human professional competencies;

- knowledge base, training data sets had 2021 cutoff date;

- very large training data set (books, internet content (purported to be in excess of 1 trillion parameters);

- no theory of mind capability (at present) - future versions might offer it;

- no consciousness, sentience, intentionality, motivation or self reflectivity are all lacking;

- earlier short term memory; current subscription token limit is 32k (Aug 2023);

- show novel emergent behavior; observers are concerned that it might acquire facility for deception;

- shown ability to extemporize response elements that do not actually exist (hallucinates);

- shown indications that a derivative (CG5 or later) might exhibit artificial general intelligence (AGI);

Intermediate Summary

- Trajectory. Advances in current artificial intelligence systems have been happening at almost break-neck speed. New capabilities have been emerging which had been thought to not be possible for several more years. The most recent developments in the guise of autonomous agents as of September 2023 strongly suggest that a new iteration of capabilities will shortly emerge that will cause the whole playing field to restructure itself all over again. Major driving factors that will propel events forward include:

- Autonomous agent based ensembles. This area is developing very quickly and should be monitored closely. The impact of this development will usher in a qualitative change in terms of what systems based upon large language models are capable of doing.

- Quantum Computing. The transition to quantum computing will eclipse everything known in terms of how computing based solutions are used and the kinds of problems that will migrate into the zone of solubility and tractability. Problems that are currently beyond the scope of von Neuman based computational tools will shortly become accessible. The set of possible new capabilities and insights will be profound and can not be guessed at as of this writing in September 2023. Passing the boundary layer between von Neuman based architectures and combined von Neuman and quantum modalities will come to be viewed as a before-after even in history. The difference in capabilities will rival the taming of fire, the invention of writing and the acquisition of agriculture. The risks will likewise be great. The means that rivals will be able to disrupt each other's societies and economies can not be guessed at. In short we should expect a period of turbulence unlike anything seen so far in recorded history.