Difference between revisions of "User:Darwin2049/ChatGPT4 Operations"

Darwin2049 (talk | contribs) |

Darwin2049 (talk | contribs) |

||

| Line 2: | Line 2: | ||

<!-- 20231009 --> | <!-- 20231009 --> | ||

'''''Theory of Operations''''' | '''''Theory of Operations''''' | ||

<BR /> | <BR /> | ||

<BR /> | <BR /> | ||

| Line 10: | Line 11: | ||

<BR /> | <BR /> | ||

<BR /> | <BR /> | ||

<!-- 20230907: BITE THE BULLET AND JUST BULLDOZE ON WITH IT; deal with the real stumble stone which is how this thing --> | |||

<!-- really works... what it is and what it does; use judgement calls to assess enough, not too little, not too much --> | |||

<!-- technical jargon to explain what CG4 is and how it works... especially given that one is almost obliged to have a --> | |||

<!-- PHD in computer science, and a specialty in deep learning, neural networks to follow the salient topics and associated --> | |||

<!-- discussion; therefore... moving right along... let us go down to the river and work... --> | |||

<!-- pull out all of the theory of operations and resolve a coherent structure on how to explain each of the core features --> | |||

<!-- that make this thing what it is and say how it does it all... --> | |||

<!-- given that that first pass on trying to explain it was almost but not quite there... --> | |||

'''''<Span Style="COLOR:BLUE; BACKGROUND:SILVER">CG4 – Theory of Operation: </SPAN>'''''CG4 is a narrow artificial intelligence system that is a Generative Pre-trained Transformer. <BR /> | |||

In order to make sense of this one would be well advised to understand several fundamental concepts associated with this technology. Because this is a highly technical subject the following is intended to introduce the core elements. The reader is encouraged to review the literature and body of insight that is currently available as explanatory video content.<BR /> | |||

<!-- 20230907: o.k... maintain momentum... moved terms down together and can use them as a basis for describing what and how --> | |||

<!-- but need to keep limited, focused to avoid opening huge "closet" of dreadfully gory technical details; challenge: just enough! --> | |||

<!-- now... move already present links into new term-concepts structure below... this should get things back on track to --> | |||

<!-- move it all over to version05 - put focus on theoretical issues topics; --> | |||

<!-- --> | |||

By way of clarifying the topics of this work we organize these concepts into two primary groups. The first group offer basic information | |||

on the fundamental building blocks of Large Language Models of which CG4 is a recent example. The second group introduces or otherwise clarifies | |||

terms that have come to the forefront of recent public discussions and issues.<BR /> | |||

'''''<Span Style="COLOR:BLUE; BACKGROUND:SILVER">Fundamental Building Block Concepts.</SPAN>''''' | |||

*'''''<SPAN STYLE="COLOR:BLUE">GPT3:</SPAN>''''' a smaller precursor version of the GPT4 system; | |||

*'''''<SPAN STYLE="COLOR:BLUE">GPT3.5: </SPAN>'''''a higher performance version of GPT3 but still falls short of the full-up GTP4 successor; | |||

*'''''<SPAN STYLE="COLOR:BLUE">GPT4: </SPAN>'''''a LLM with over one trillion parameters; | |||

*'''''<SPAN STYLE="COLOR:BLUE">Chat GPT4: </SPAN>'''''the conversational version of GPT4; | |||

*'''''<SPAN STYLE="COLOR:BLUE">Artificial Neural Networks. </SPAN>'''''Four excellent episodes providing very useful insight into some underlying theory of how artificial neural networks perform their actions. | |||

**'''''<SPAN STYLE="BLUE">From 3Blue1Brown: [https://www.youtube.com/@3blue1brown Home Page]</SPAN>'''''<BR /> | |||

**'''''<SPAN STYLE="BLUE">[https://www.youtube.com/watch?v=aircAruvnKk&ab_channel=3Blue1Brown Neural_Network_Basics]</SPAN>''''' Some basic principles in how neural networks structures are mapped to a problem.<BR /> | |||

**'''''<SPAN STYLE="BLUE">[https://www.youtube.com/watch?v=IHZwWFHWa-w&ab_channel=3Blue1Brown Gradient Descent]</SPAN>'''''A somewhat technical topic requiring a careful examination on the part of the reader who is unfamiliar with the mathematical background.<BR /> | |||

**'''''<SPAN STYLE="BLUE">[https://www.youtube.com/watch?v=Ilg3gGewQ5U&ab_channel=3Blue1Brown Back Propagation],</SPAN>''''' intuitively, what is going on?<BR /> It graphically shows the mathematics behind how a neural network attempts to "home in" on a target; what we can see is the mathematics that underlies how training is iteratively done and the distance difference between the neural network's learning state gradually begins to converge with the actual desired response; | |||

**'''''<SPAN STYLE="BLUE">[https://www.youtube.com/watch?v=tIeHLnjs5U8&ab_channel=3Blue1Brown Back Propagation Theory]</SPAN>'''''How various layers in a deep learning neural network interact with each other.<BR />A much closer mathematical description of how prior and successive neuron values contribute to successive neuron values; then how they ultimately contribute to the output neuron that they connect to and whether it will activate or not; this is a highly mathematical description using advanced partial derivative calculus; | |||

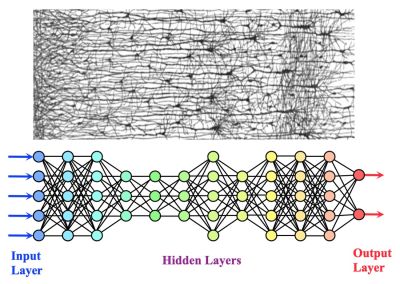

*'''''<SPAN STYLE="COLOR:BLUE">Deep Learning:</SPAN>''''' the technique of using representing an artificial neural network type structure to solve extremely complex problems; [https://www.youtube.com/watch?v=aircAruvnKk&ab_channel=3Blue1Brown a neural network] it typically will work using a large number of what are called "hidden layers"; they achieve greater performance when they are trained on very large data sets; these data sets can range from millions to billions of examples; | |||

[[File:Hiddenlayer00.jpeg|400px|left|Hidden Layers]] | |||

*'''''<SPAN STYLE="COLOR:BLUE">Hidden Layer:</SPAN>''''' One or more network layers that stands between an input layer and the final output layer. Each input layer is connected to all of the nodes in the first hidden layer. Subsequent hidden layers are added as a means to provide greater discriminatory resolution to the neural network. Each node in a hidden layer can be connected to each node in the following hidden layer. The more hidden layers the more sophisticated can be the ability of the neural network to perform its tasks.<BR />In neural networks, [https://deepai.org/machine-learning-glossary-and-terms/hidden-layer-machine-learning#:~:text=In%20neural%20networks%2C%20a%20hidden,inputs%20entered%20into%20the%20network. a hidden layer] is located between the input and output of the algorithm, in which the function applies weights to the inputs and directs them through an activation function as the output. In short, the hidden layers perform nonlinear transformations of the inputs entered into the network. Hidden layers vary depending on the function of the neural network, and similarly, the layers may vary depending on their associated weights. Useful insight into what hidden layers are doing can be viewed using [https://www.youtube.com/watch?v=0QczhVg5HaI&ab_channel=EmergentGarden this hidden layers video] For an interactive view of '''''<SPAN STYLE="COLOR:BLUE">how hidden layers enable a neural network to learn</SPAN>''''' a new function one can monitor [https://www.youtube.com/watch?v=joA6fEAbAQc&ab_channel=sentdex this video by sentdex]; it has no audio but provides excellent insight into how the various layers and nodes within a layer develops an approximation of the function that it is attempting to learn.<BR /> | |||

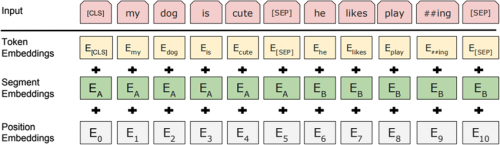

[[FILE:Tokens011.png|500px|right|tokens are vectors of words or word fragment data]] | |||

*'''''<SPAN STYLE="COLOR:BLUE">Parameters: </SPAN>'''''[https://towardsdatascience.com/neural-networks-parameters-hyperparameters-and-optimization-strategies-3f0842fac0a5#:~:text=Parameters%3A%20these%20are%20the%20coefficients,parameters%20which%20minimize%20the%20error. are the coefficients of the model], and they are chosen by the model itself. It means that the algorithm, while learning, optimizes these coefficients (according to a given optimization strategy) and returns an array of parameters which minimize the error. To give an example, in a linear regression task, you have your model that will look like y=b + ax, where b and a will be your parameter. The only thing you have to do with those parameters is to initialize them. | |||

*'''''<SPAN STYLE="COLOR:BLUE">Tokens: </SPAN>'''''[https://www.youtube.com/watch?v=wVN12smEvqg&ab_channel=HuggingFace Making text into tokens]. These become vector representations. They form the basis of the neuron values for each neuron in a neural network. The narrator provides a short and succinct introduction to the basic concepts of what a token is and how it is represented as a vector. | |||

*'''''<SPAN STYLE="COLOR:BLUE">Generative Artificial Intelligence</SPAN>''''' [https://www.wikiwand.com/en/Generative_artificial_intelligence Generative artificial intelligence (AI)] is artificial intelligence capable of generating text, images, or other media, using generative models. Generative AI models learn the patterns and structure of their input training data and then generate new data that has similar characteristics. A detailed oil painting of figures in a [https://www.wikiwand.com/en/Th%C3%A9%C3%A2tre_d'Op%C3%A9ra_Spatial futuristic opera scene Théâtre d'Opéra Spatial], an image generated by Midjourney. In the early 2020s, advances in transformer-based deep neural networks enabled a number of generative AI systems notable for accepting natural language prompts as input. These include large language model chatbots such as ChatGPT, Bing Chat, Bard, and LLaMA, and text-to-image artificial intelligence art systems such as Stable Diffusion, Midjourney, and DALL-E.<BR /> | |||

[[File:Dream00.jpg|450px|left|Dream from MidJourney]] | |||

A summary of the key elements of what generative artificial intelligence are explained in [https://www.youtube.com/watch?v=1U83yhGY_pI&ab_channel=EyeonTech this video] (11 minutes); note that a key and crucial point to keep in mind is that generative ai generates new output based upon a user's request or query; it uses massive amounts of training data to draw from in order to generate its output; note further that the "G" in GPT is derived from generative artificial intelligence;<BR /> Subsequent developments with generative ai systems have been moving forward apace at [https://www.youtube.com/watch?v=hfIUstzHs9A&ab_channel=IBMTechnology companies such as IBM]. Their efforts are targeting a range of salient topic areas. To name a few their teams are addressing topics such as molecular structure, code, vision, earth science. | |||

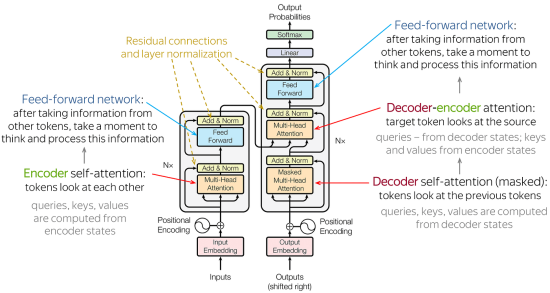

*'''''<SPAN STYLE="COLOR:BLUE">Encoder-Decoder.</SPAN>''''' [https://vitalflux.com/encoder-decoder-architecture-neural-network/ According to Analytics Yogi]In the field of AI / machine learning, the encoder-decoder architecture is a widely-used framework for developing neural networks that can perform natural language processing (NLP) tasks such as language translation, etc which requires sequence to sequence modeling. This architecture involves a two-stage process where the input data is first encoded into a fixed-length numerical representation, which is then decoded to produce an output that matches the desired format. As a data scientist, understanding the encoder-decoder architecture and its underlying neural network principles is crucial for building sophisticated models that can handle complex data sets. By leveraging encoder-decoder neural network architecture, data scientists can design neural networks that can learn from large amounts of data, accurately classify and generate outputs, and perform tasks that require high-level reasoning and decision-making. An [https://www.youtube.com/watch?v=4Bdc55j80l8&ab_channel=TheA.I.Hacker-MichaelPhi excellent if somewhat technical video] describes and explains the architecture of what is going on with the encoder and decoder components of the GPT system. | |||

*'''''<SPAN STYLE="COLOR:BLUE">Bidirectional Encoder Representations from Transformers (BERT)</SPAN>''''' An insightful paper on several key features of what BERT is about and how it functions. [https://arxiv.org/abs/1905.05583 official archive paper on BERT]. | |||

*'''''<SPAN STYLE="COLOR:BLUE">BERT - [https://www.youtube.com/watch?v=_BFp4kjSB-I&ab_channel=SebastianRaschka very useful video] </SPAN>''''' a prediction system that uses training data that uses statistical mechanisms to anticipate a subsequent input based upon the most recent input; | |||

*'''''<SPAN STYLE="COLOR:BLUE">Large Language Model.</SPAN>''''' Is a prediction system that uses training data to enable a deep neural network to perform recognition tasks. It uses statistical mechanisms to anticipate subsequent input based upon the prior input. Prediction systems use this training data to iteratively condition and train the deep learning system for its specific tasks. The following two videos provide focus on large language models; '''''<SPAN STYLE="COLOR:BLUE">[https://www.youtube.com/watch?v=lnA9DMvHtfI&ab_channel=Graphicsin5Minutes this is part one of large language models. ]</SPAN>''''' it describes how words are used to predict subsequent words in an input text; '''''<SPAN STYLE="COLOR:BLUE">[https://www.youtube.com/watch?v=YDiSFS-yHwk&ab_channel=Graphicsin5Minutes here is part two ]</SPAN>''''' and it is an expansion upon the earlier video but offers more technical detail. Together they provide a fairly concise synopsis of how large language models make predictions of how words are associated with each other in a body of text. They explain further how they require a very large training data set so as to achieve their performance. | |||

[[FILE:Decoder00.png|550px|left|Decoder]] | |||

*'''''<SPAN STYLE="COLOR:BLUE">Transformer.</SPAN>''''' A transformer is a deep learning system. [https://www.youtube.com/watch?v=4Bdc55j80l8&ab_channel=TheA.I.Hacker-MichaelPhi This discussion presents key concepts of how transformers work from a more conceptual point of view.] Its architecture relies on what is called parallel multi-head attention mechanism. The modern transformer was proposed in the 2017 paper titled "Attention is all you need".<BR /> There is some overlap with topics described above in the section on encoder-decoder architecture. A significant contribution is because it enables creation of a neural network model that requires less training time than previous recurrent neural architectures; It addressed such issues as inadequate long short-term memory (LSTM); this had been a shortcoming for earlier models because contextually significant terms might be beyond the positional proximity of semantically meaningful terms. Its later variation have been adopted for training large language models on large (language) datasets, such as the Wikipedia corpus and Common Crawl, by virtue of the parallelized processing of input sequence. Earlier efforts to resolve such difficulties were fundamentally influenced by the '''''Attention Is All You Need''''' paper by Ashish Vaswani et al of the Google Brain team. A breakdown of its major concepts are presented in :'''''<SPAN STYLE="COLOR:BLUE"> [https://www.youtube.com/watch?v=sznZ78HquPc&ab_channel=Lucidate: Attention is all you need. ]</SPAN>'''''<BR /> | |||

*'''''<SPAN STYLE="COLOR:BLUE">Generative Pre-trained Transformer:</SPAN>''''' [https://www.wikiwand.com/en/Generative_pre-trained_transformer Generative pre-trained transformers (GPT)] are a type of large language model (LLM) and a prominent framework for generative artificial intelligence. The first GPT was introduced in 2018 by OpenAI. GPT models are artificial neural networks that are based on the transformer architecture, pre-trained on large data sets of unlabeled text, and able to generate novel human-like content. As of 2023, most LLMs have these characteristics and are sometimes referred to broadly as GPTs. An overview of the important features can be viewed in [https://www.youtube.com/watch?v=9ebPNEHRwXU&ab_channel=ConnorShorten the narrator] touches on a number of related topics as well. | |||

*'''''<SPAN STYLE="COLOR:BLUE">Generative Adversarial Networks.</SPAN>''''' [https://www.wikiwand.com/en/Generative_adversarial_network a GAN is ]A generative adversarial network (GAN) is a class of machine learning framework and a prominent framework for approaching generative AI. The concept was initially developed by Ian Goodfellow and his colleagues in June 2014. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss.<BR /> Given a training set, this technique learns to generate new data with the same statistics as the training set. For example, a GAN trained on photographs can generate new photographs that look at least superficially authentic to human observers, having many realistic characteristics. Though originally proposed as a form of generative model for unsupervised learning, GANs have also proved useful for semi-supervised learning, fully supervised learning, and reinforcement learning. This video provides a short synopsis of the [https://www.youtube.com/watch?v=_qB4B6ttXk8&ab_channel=AICoffeeBreakwithLetitia GANS capability.] | |||

*'''''<SPAN STYLE="COLOR:BLUE">Recurrent Networks. </SPAN>''''' A [https://www.wikiwand.com/en/Recurrent_neural_network#Fully_recurrent recurrent neural network] is a kind of deep neural network created by applying the same set of weights recursively over a structured input, to produce a structured prediction over variable-size input structures, or a scalar prediction on it, by traversing a given structure in topological order. '''''Fully recurrent neural networks (FRNN) connect the outputs of all neurons to the inputs of all neurons. '''''This is the most general neural network topology because all other topologies can be represented by setting some connection weights to zero to simulate the lack of connections between those neurons. The illustration to the right may be misleading to many because practical neural network topologies are frequently organized in "layers" and the drawing gives that appearance. However, what appears to be layers are, in fact, different steps in time of the same fully recurrent neural network. A recurrent neural network is a kind of deep neural network] created by applying the same set of weights recursively over a structured input, to produce a structured prediction over variable-size input structures, or a scalar prediction on it, by traversing a given structure in topological order. For an intuitive overview of recurrent neural networks [https://www.youtube.com/watch?v=LHXXI4-IEns&ab_channel=TheA.I.Hacker-MichaelPhi have a look here] | |||

*'''''<SPAN STYLE="COLOR:BLUE">Recursive Networks.</SPAN>''''' [https://www.wikiwand.com/en/Recursive_neural_network A recursive neural network is a kind of deep neural network] created by applying the same set of weights recursively over a structured input, to produce a structured prediction over variable-size input structures, or a scalar prediction on it, by traversing a given structure in topological order. Recursive neural networks, sometimes abbreviated as RvNNs, have been successful, for instance, in learning sequence and tree structures in natural language processing, mainly phrase and sentence continuous representations based on word embedding. RvNNs have first been introduced to learn distributed representations of structure, such as logical terms. Models and general frameworks have been developed in further works since the 1990s. Addressing the task of attempting to transition from words to phrases in natural language understanding was addressed using recursive neural networks. A [https://www.youtube.com/watch?v=AqEF2HIMjYA&ab_channel=HugoLarochelle short but useful video] presents several core elements of how the problem was solved. | |||

*'''''<SPAN STYLE="COLOR:BLUE">Fine Tuning CG4.</SPAN>''''' Use instructions from the [https://platform.openai.com/docs/guides/fine-tuning OpenAI Documentation page] to fine tune CG4. Here is some insight on how to [https://www.youtube.com/watch?v=boHXgQ5eQic&ab_channel=JamesBriggs fine tune a Chat GPT3.5] system. For a somewhat less technical overview on when to use fine tuning and some basic instructions on how to perform fine tuning then have a look at [https://www.youtube.com/watch?v=z_x7j5qv6qo&ab_channel=MichaelBorman this video]. For observers with an interest in more practical uses then this video explains how to [https://www.youtube.com/watch?v=c_nCjlSB1Zk&ab_channel=AIJason perform fine tuning to capture business knowledge.] | |||

*'''''<SPAN STYLE="COLOR:BLUE">Supervised or Unsupervised Learning.</SPAN>''''' [https://www.wikiwand.com/en/Supervised_learning Supervised learning (SL)] is a paradigm in machine learning where input objects (for example, a vector of predictor variables) and a desired output value (also known as human-labeled supervisory signal) train a model. The training data is processed, building a function that maps new data on expected output values. An optimal scenario will allow for the algorithm to correctly determine output values for unseen instances. This requires the learning algorithm to generalize from the training data to unseen situations in a "reasonable" way (see inductive bias). This statistical quality of an algorithm is measured through the so-called generalization error. [https://www.youtube.com/watch?v=W01tIRP_Rqs&ab_channel=IBMTechnology This short video] presents a summarized explanation of the difference. Unsupervised learning is a paradigm in machine learning where, in contrast to supervised learning and semi-supervised learning,'''''<SPAN STYLE="COLOR:BLUE">algorithms learn patterns exclusively from unlabeled data.</SPAN>''''' | |||

*'''''<SPAN STYLE="COLOR:BLUE">Pretrained.</SPAN>''''' A pretrained AI model [https://blogs.nvidia.com/blog/2022/12/08/what-is-a-pretrained-ai-model/ is a deep learning model] — an expression of a brain-like neural algorithm that finds patterns or makes predictions based on data — that’s trained on large datasets to accomplish a specific task. It can be used as is or further fine-tuned to fit an application’s specific needs. Why Are Pretrained AI Models Used? Instead of building an AI model from scratch, developers can use pretrained models and customize them to meet their requirements. To build an AI application, developers first need an AI model that can accomplish a particular task, whether that’s identifying a mythical horse, detecting a safety hazard for an autonomous vehicle or diagnosing a cancer based on medical imaging. That model needs a lot of representative data to learn from. This learning process entails going through several layers of incoming data and emphasizing goals-relevant characteristics at each layer. To create a model that can recognize a unicorn, for example, one might first feed it images of unicorns, horses, cats, tigers and other animals. This is the incoming data.<BR /><BR />Then, layers of representative data traits are constructed, beginning with the simple — like lines and colors — and advancing to complex structural features. These characteristics are assigned varying degrees of relevance by calculating probabilities. As opposed to a cat or tiger, for example, the more like a horse a creature appears, the greater the likelihood that it is a unicorn. Such probabilistic values are stored at each neural network layer in the AI model, and as layers are added, its understanding of the representation improves. To create such a model from scratch, developers require enormous datasets, often with billions of rows of data. These can be pricey and challenging to obtain, but compromising on data can lead to poor performance of the model. | |||

Revision as of 17:38, 15 October 2023

Theory of Operations

Core Elements

Types

CG4 – Theory of Operation: CG4 is a narrow artificial intelligence system that is a Generative Pre-trained Transformer.

In order to make sense of this one would be well advised to understand several fundamental concepts associated with this technology. Because this is a highly technical subject the following is intended to introduce the core elements. The reader is encouraged to review the literature and body of insight that is currently available as explanatory video content.

By way of clarifying the topics of this work we organize these concepts into two primary groups. The first group offer basic information

on the fundamental building blocks of Large Language Models of which CG4 is a recent example. The second group introduces or otherwise clarifies

terms that have come to the forefront of recent public discussions and issues.

Fundamental Building Block Concepts.

- GPT3: a smaller precursor version of the GPT4 system;

- GPT3.5: a higher performance version of GPT3 but still falls short of the full-up GTP4 successor;

- GPT4: a LLM with over one trillion parameters;

- Chat GPT4: the conversational version of GPT4;

- Artificial Neural Networks. Four excellent episodes providing very useful insight into some underlying theory of how artificial neural networks perform their actions.

- From 3Blue1Brown: Home Page

- Neural_Network_Basics Some basic principles in how neural networks structures are mapped to a problem.

- Gradient DescentA somewhat technical topic requiring a careful examination on the part of the reader who is unfamiliar with the mathematical background.

- Back Propagation, intuitively, what is going on?

It graphically shows the mathematics behind how a neural network attempts to "home in" on a target; what we can see is the mathematics that underlies how training is iteratively done and the distance difference between the neural network's learning state gradually begins to converge with the actual desired response; - Back Propagation TheoryHow various layers in a deep learning neural network interact with each other.

A much closer mathematical description of how prior and successive neuron values contribute to successive neuron values; then how they ultimately contribute to the output neuron that they connect to and whether it will activate or not; this is a highly mathematical description using advanced partial derivative calculus;

- From 3Blue1Brown: Home Page

- Deep Learning: the technique of using representing an artificial neural network type structure to solve extremely complex problems; a neural network it typically will work using a large number of what are called "hidden layers"; they achieve greater performance when they are trained on very large data sets; these data sets can range from millions to billions of examples;

- Hidden Layer: One or more network layers that stands between an input layer and the final output layer. Each input layer is connected to all of the nodes in the first hidden layer. Subsequent hidden layers are added as a means to provide greater discriminatory resolution to the neural network. Each node in a hidden layer can be connected to each node in the following hidden layer. The more hidden layers the more sophisticated can be the ability of the neural network to perform its tasks.

In neural networks, a hidden layer is located between the input and output of the algorithm, in which the function applies weights to the inputs and directs them through an activation function as the output. In short, the hidden layers perform nonlinear transformations of the inputs entered into the network. Hidden layers vary depending on the function of the neural network, and similarly, the layers may vary depending on their associated weights. Useful insight into what hidden layers are doing can be viewed using this hidden layers video For an interactive view of how hidden layers enable a neural network to learn a new function one can monitor this video by sentdex; it has no audio but provides excellent insight into how the various layers and nodes within a layer develops an approximation of the function that it is attempting to learn.

- Parameters: are the coefficients of the model, and they are chosen by the model itself. It means that the algorithm, while learning, optimizes these coefficients (according to a given optimization strategy) and returns an array of parameters which minimize the error. To give an example, in a linear regression task, you have your model that will look like y=b + ax, where b and a will be your parameter. The only thing you have to do with those parameters is to initialize them.

- Tokens: Making text into tokens. These become vector representations. They form the basis of the neuron values for each neuron in a neural network. The narrator provides a short and succinct introduction to the basic concepts of what a token is and how it is represented as a vector.

- Generative Artificial Intelligence Generative artificial intelligence (AI) is artificial intelligence capable of generating text, images, or other media, using generative models. Generative AI models learn the patterns and structure of their input training data and then generate new data that has similar characteristics. A detailed oil painting of figures in a futuristic opera scene Théâtre d'Opéra Spatial, an image generated by Midjourney. In the early 2020s, advances in transformer-based deep neural networks enabled a number of generative AI systems notable for accepting natural language prompts as input. These include large language model chatbots such as ChatGPT, Bing Chat, Bard, and LLaMA, and text-to-image artificial intelligence art systems such as Stable Diffusion, Midjourney, and DALL-E.

A summary of the key elements of what generative artificial intelligence are explained in this video (11 minutes); note that a key and crucial point to keep in mind is that generative ai generates new output based upon a user's request or query; it uses massive amounts of training data to draw from in order to generate its output; note further that the "G" in GPT is derived from generative artificial intelligence;

Subsequent developments with generative ai systems have been moving forward apace at companies such as IBM. Their efforts are targeting a range of salient topic areas. To name a few their teams are addressing topics such as molecular structure, code, vision, earth science.

- Encoder-Decoder. According to Analytics YogiIn the field of AI / machine learning, the encoder-decoder architecture is a widely-used framework for developing neural networks that can perform natural language processing (NLP) tasks such as language translation, etc which requires sequence to sequence modeling. This architecture involves a two-stage process where the input data is first encoded into a fixed-length numerical representation, which is then decoded to produce an output that matches the desired format. As a data scientist, understanding the encoder-decoder architecture and its underlying neural network principles is crucial for building sophisticated models that can handle complex data sets. By leveraging encoder-decoder neural network architecture, data scientists can design neural networks that can learn from large amounts of data, accurately classify and generate outputs, and perform tasks that require high-level reasoning and decision-making. An excellent if somewhat technical video describes and explains the architecture of what is going on with the encoder and decoder components of the GPT system.

- Bidirectional Encoder Representations from Transformers (BERT) An insightful paper on several key features of what BERT is about and how it functions. official archive paper on BERT.

- BERT - very useful video a prediction system that uses training data that uses statistical mechanisms to anticipate a subsequent input based upon the most recent input;

- Large Language Model. Is a prediction system that uses training data to enable a deep neural network to perform recognition tasks. It uses statistical mechanisms to anticipate subsequent input based upon the prior input. Prediction systems use this training data to iteratively condition and train the deep learning system for its specific tasks. The following two videos provide focus on large language models; this is part one of large language models. it describes how words are used to predict subsequent words in an input text; here is part two and it is an expansion upon the earlier video but offers more technical detail. Together they provide a fairly concise synopsis of how large language models make predictions of how words are associated with each other in a body of text. They explain further how they require a very large training data set so as to achieve their performance.

- Transformer. A transformer is a deep learning system. This discussion presents key concepts of how transformers work from a more conceptual point of view. Its architecture relies on what is called parallel multi-head attention mechanism. The modern transformer was proposed in the 2017 paper titled "Attention is all you need".

There is some overlap with topics described above in the section on encoder-decoder architecture. A significant contribution is because it enables creation of a neural network model that requires less training time than previous recurrent neural architectures; It addressed such issues as inadequate long short-term memory (LSTM); this had been a shortcoming for earlier models because contextually significant terms might be beyond the positional proximity of semantically meaningful terms. Its later variation have been adopted for training large language models on large (language) datasets, such as the Wikipedia corpus and Common Crawl, by virtue of the parallelized processing of input sequence. Earlier efforts to resolve such difficulties were fundamentally influenced by the Attention Is All You Need paper by Ashish Vaswani et al of the Google Brain team. A breakdown of its major concepts are presented in : Attention is all you need.

- Generative Pre-trained Transformer: Generative pre-trained transformers (GPT) are a type of large language model (LLM) and a prominent framework for generative artificial intelligence. The first GPT was introduced in 2018 by OpenAI. GPT models are artificial neural networks that are based on the transformer architecture, pre-trained on large data sets of unlabeled text, and able to generate novel human-like content. As of 2023, most LLMs have these characteristics and are sometimes referred to broadly as GPTs. An overview of the important features can be viewed in the narrator touches on a number of related topics as well.

- Generative Adversarial Networks. a GAN is A generative adversarial network (GAN) is a class of machine learning framework and a prominent framework for approaching generative AI. The concept was initially developed by Ian Goodfellow and his colleagues in June 2014. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss.

Given a training set, this technique learns to generate new data with the same statistics as the training set. For example, a GAN trained on photographs can generate new photographs that look at least superficially authentic to human observers, having many realistic characteristics. Though originally proposed as a form of generative model for unsupervised learning, GANs have also proved useful for semi-supervised learning, fully supervised learning, and reinforcement learning. This video provides a short synopsis of the GANS capability.

- Recurrent Networks. A recurrent neural network is a kind of deep neural network created by applying the same set of weights recursively over a structured input, to produce a structured prediction over variable-size input structures, or a scalar prediction on it, by traversing a given structure in topological order. Fully recurrent neural networks (FRNN) connect the outputs of all neurons to the inputs of all neurons. This is the most general neural network topology because all other topologies can be represented by setting some connection weights to zero to simulate the lack of connections between those neurons. The illustration to the right may be misleading to many because practical neural network topologies are frequently organized in "layers" and the drawing gives that appearance. However, what appears to be layers are, in fact, different steps in time of the same fully recurrent neural network. A recurrent neural network is a kind of deep neural network] created by applying the same set of weights recursively over a structured input, to produce a structured prediction over variable-size input structures, or a scalar prediction on it, by traversing a given structure in topological order. For an intuitive overview of recurrent neural networks have a look here

- Recursive Networks. A recursive neural network is a kind of deep neural network created by applying the same set of weights recursively over a structured input, to produce a structured prediction over variable-size input structures, or a scalar prediction on it, by traversing a given structure in topological order. Recursive neural networks, sometimes abbreviated as RvNNs, have been successful, for instance, in learning sequence and tree structures in natural language processing, mainly phrase and sentence continuous representations based on word embedding. RvNNs have first been introduced to learn distributed representations of structure, such as logical terms. Models and general frameworks have been developed in further works since the 1990s. Addressing the task of attempting to transition from words to phrases in natural language understanding was addressed using recursive neural networks. A short but useful video presents several core elements of how the problem was solved.

- Fine Tuning CG4. Use instructions from the OpenAI Documentation page to fine tune CG4. Here is some insight on how to fine tune a Chat GPT3.5 system. For a somewhat less technical overview on when to use fine tuning and some basic instructions on how to perform fine tuning then have a look at this video. For observers with an interest in more practical uses then this video explains how to perform fine tuning to capture business knowledge.

- Supervised or Unsupervised Learning. Supervised learning (SL) is a paradigm in machine learning where input objects (for example, a vector of predictor variables) and a desired output value (also known as human-labeled supervisory signal) train a model. The training data is processed, building a function that maps new data on expected output values. An optimal scenario will allow for the algorithm to correctly determine output values for unseen instances. This requires the learning algorithm to generalize from the training data to unseen situations in a "reasonable" way (see inductive bias). This statistical quality of an algorithm is measured through the so-called generalization error. This short video presents a summarized explanation of the difference. Unsupervised learning is a paradigm in machine learning where, in contrast to supervised learning and semi-supervised learning,algorithms learn patterns exclusively from unlabeled data.

- Pretrained. A pretrained AI model is a deep learning model — an expression of a brain-like neural algorithm that finds patterns or makes predictions based on data — that’s trained on large datasets to accomplish a specific task. It can be used as is or further fine-tuned to fit an application’s specific needs. Why Are Pretrained AI Models Used? Instead of building an AI model from scratch, developers can use pretrained models and customize them to meet their requirements. To build an AI application, developers first need an AI model that can accomplish a particular task, whether that’s identifying a mythical horse, detecting a safety hazard for an autonomous vehicle or diagnosing a cancer based on medical imaging. That model needs a lot of representative data to learn from. This learning process entails going through several layers of incoming data and emphasizing goals-relevant characteristics at each layer. To create a model that can recognize a unicorn, for example, one might first feed it images of unicorns, horses, cats, tigers and other animals. This is the incoming data.

Then, layers of representative data traits are constructed, beginning with the simple — like lines and colors — and advancing to complex structural features. These characteristics are assigned varying degrees of relevance by calculating probabilities. As opposed to a cat or tiger, for example, the more like a horse a creature appears, the greater the likelihood that it is a unicorn. Such probabilistic values are stored at each neural network layer in the AI model, and as layers are added, its understanding of the representation improves. To create such a model from scratch, developers require enormous datasets, often with billions of rows of data. These can be pricey and challenging to obtain, but compromising on data can lead to poor performance of the model.